Blog

Build smart IVR for contact center automation with Python. Complete customer service voice AI tutorial with intelligent call routing.

Learn how to add voice control to smart TVs with Python. This tutorial walks through on-device voice search using wake word, speech recognition, and local LLM.

Create an on-device restaurant voice agent with Python for hands-free customer ordering, menu inquiries, and order management without cloud dependency.

Voice recognition is reshaping banking, healthcare, manufacturing, and more. Explore ten real-world use cases, each with a hands-on on-device voice AI solution

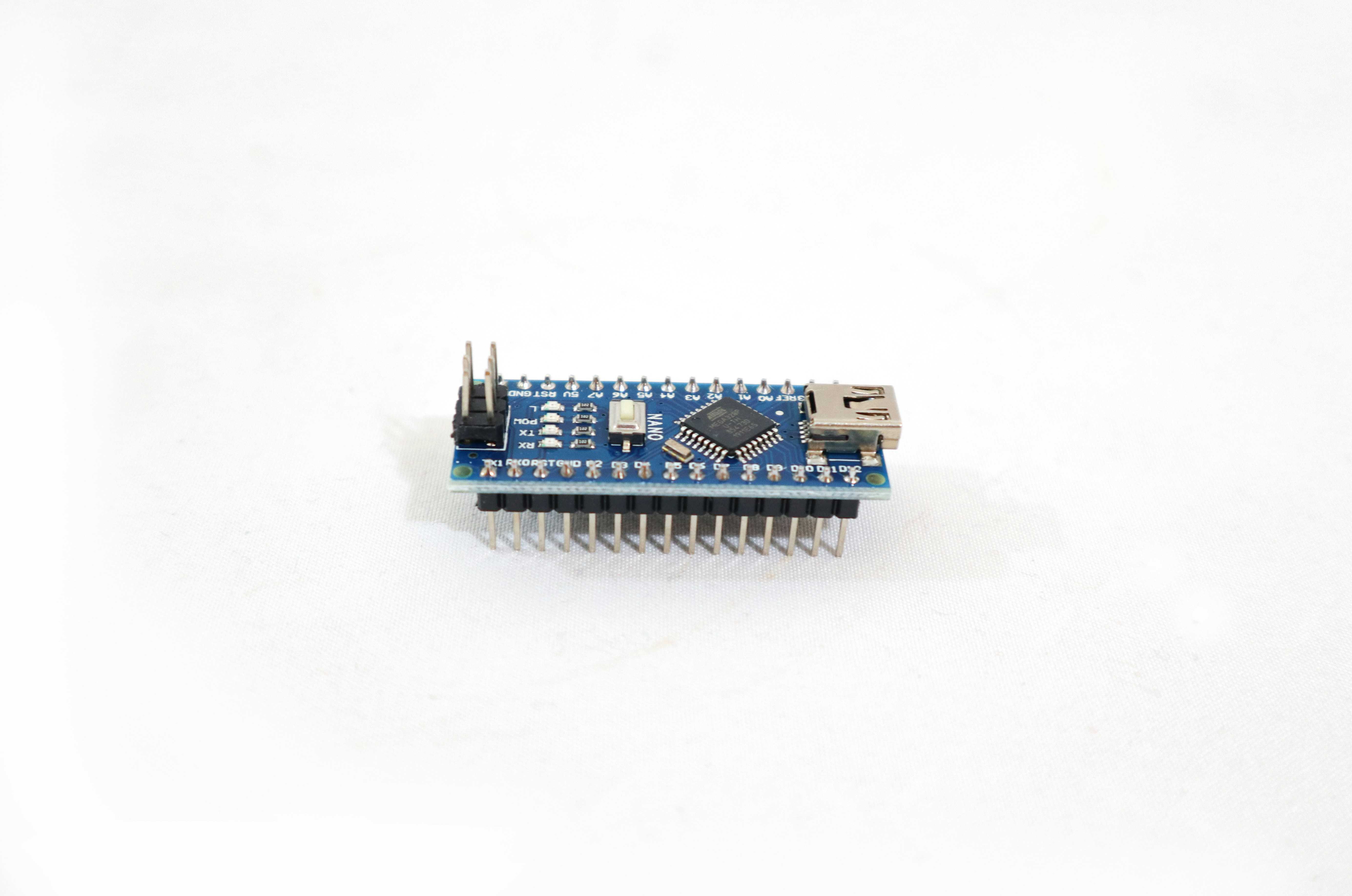

Add voice control to Arduino Nano 33 BLE Sense. Train custom wake word and speech-to-intent model to add voice command processing on the microcontroller.

Build a hands-free smart manufacturing voice agent with Python. Use Local LLMs and on-device voice control to manage equipment and factory data.

Create a fully on-device hotel room voice assistant with Python for private, cloud-free room control, guest services, and smart IoT automation.

Medical Language Models (Medical LLMs or Healthcare LLMs) are AI systems specifically trained on clinical literature, medical records, and healthcare data to understand medical terminology, generate clinical documentation, and assist with diagnostic reasoning.

Real-time transcription converts speech to text instantly with <1 second latency as someone speaks. It processes audio continuously, enabling use cases such as live captions, meeting transcription, voice assistants, and accessibility features across industries.

Integrate real-time noise suppression into your iOS app with the Koala iOS SDK. On-device noise supression for real-time communication apps.

Build ML Kit Android speech-to-speech translation with Kotlin. Complete guide using Cheetah STT, Google ML Kit Translation, and Orca TTS for on-device voice translation.

Complete guide to building a real-time meeting summarization tool in Python with streaming speech-to-text and AI summaries. Full code included.

Complete guide to building a voice note-taking app in Python with wake word activation, stop phrase control, and on-device transcription. Full code included.

Learn how to play audio in Python with PvSpeaker. Stream PCM audio output for text-to-speech, audio synthesis, and real-time audio playback on Windows, macOS, and Linux.

Learn how to record audio in React Native apps for Android and iOS. Capture PCM microphone input for speech recognition, voice commands, and real-time audio processing.

Learn how to enable automatic punctuation and correct casing in speech-to-text with Python. Get formatted transcripts with periods, commas, and capitalization.