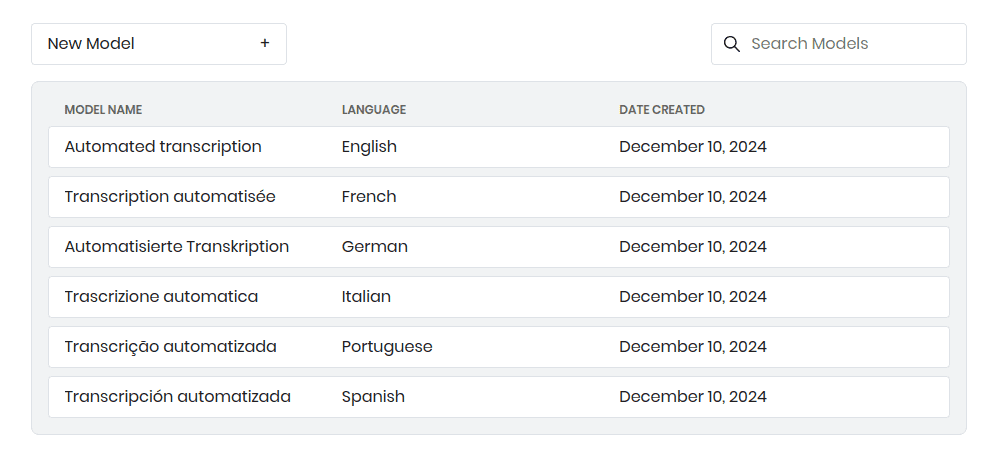

Offline voice recognition has a unique advantage over cloud APIs. It eliminates the need for cloud processing, resulting in privacy, zero latency, and cost-effectiveness at scale. The launch of on-device speech-to-text engines, Leopard Speech-to-Text and Cheetah Streaming Speech-to-Text has eliminated the need for cloud processing and brought these benefits without sacrificing cloud-level accuracy. Picovoice's offline transcription engines, Leopard and Cheetah, now offer more.

Today, Picovoice announces the public availability of new speech-to-text features - timestamps, word confidence, capitalization, punctuation, and diarization, even for the Forever-Free Plan users.

Speaker Diarization

Speech-to-Text deals with "what is said." It converts speech into text without distinguishing speakers, i.e., "who?". Speaker Diarization differentiates speakers, answering "who spoke, when" without analyzing "what's said." Thus, developers use Speech-to-Text and Speaker Diarization together to identify "who said what and when."

Leopard Speech-to-Text offers an optimized Falcon Speaker Diarization integrated!

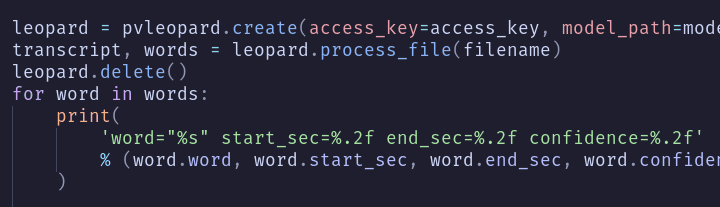

Timestamps

Automatic Speech Recognition with timestamps refers to the technology that adds start and end time for each recognized word or phrase. The output may look like:

"[00:07:20,191 - 00:07:20,447] don't you?"

Timestamps are useful to go to the corresponding part in the original audio recording from the transcript or add subtitles.

Timestamps are widely used in speech analytics, media and entertainment industry applications, or transcribing conversations such as interviews, panel discussions and legal depositions. In speech analytics, while analyzing a long audio file, one may want to go to the beginning of a particular section. Timestamping transcriptions could be helpful while reviewing court transcripts or interviews. In media and entertainment, auto-generated subtitles with timestamps are used for news, podcasts, movies or even YouTube videos. They improve accessibility and make the content discoverable. Making the content discoverable even helps with boosting the search engine presence.

Word Confidence

Word confidence is also known as Word Confidence Estimation, WCE for short. At the core, voice recognition technology uses prediction models and returns the output with a certain probability. Stating that probability, i.e. confidence level, for each recognized word is called "word confidence." The confidence level has to be between 0.0 and 1.0, 0.0 being the lowest and 1.0 being the highest. Word confidence is not related to accuracy. (WER is the most commonly used method for speech-to-text accuracy).

An app such as Duolingo could serve as an example use case for WCE. When a user pronounces "bad", speech to text may return "bad", "dad" and "bed" with different probabilities. Based on their probabilities, the app can provide a score and feedback to the user. Open-domain voice assistants such as Siri and Alexa also benefit from WCE. Voice assistants can be designed to prompt users with a question or alternatives when phrases are recognized with low confidence instead of responding directly.

Capitalization and Punctuation

Capitalization, also known as truecasing in Natural Language Processing (NLP), deals with capitalizing each word appropriately. Sentence case capitalization, capitalizing the first word of a sentence, and proper name capitalization are the most common uses of capitalization. AI-powered capitalization is an important feature for speech-to-text software as it makes the text output more readable. Truecasing improves not only the text rEaDaBILiTY for humans but also the quality of input for certain NLP cases which are otherwise considered too noisy. Along with capitalization, punctuation also contributes to the improved readability of machine transcribed transcripts.

What's next?

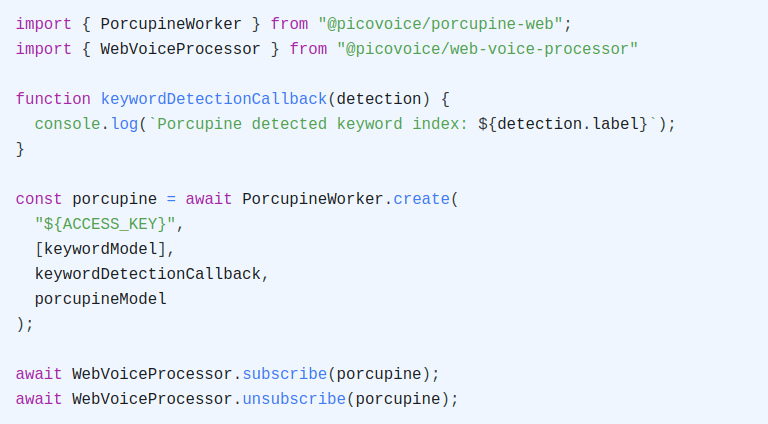

Start building with Leopard Speech-to-Text for recordings or Cheetah Speech-to-Text for real-time using your favourite SDK.

o = pvleopard.create(access_key)transcript, words =o.process_file(path)