AI Assistants and AI Agents are changing the modern world in ways that once seemed like science fiction. Powered by Large Language Model (LLM) technology, the new generation of generative AI assistants has awed the public and put AI in the tool belt of even the techno-skeptic. By integrating voice capabilities, LLM-based assistants can offer a more intuitive, efficient, and user-friendly experience. With increasingly natural voice-to-voice, interactions, Voice Agents are opening new possibilities in call centers and customer support. However, these Voice Assistants require voice AI to work in harmony with the LLM intelligence to provide a satisfactory customer-experience.

The recent GPT-4o mobile demo proved that an LLM-powered voice assistant done correctly could create a truly futuristic experience. Unfortunately, we must remember, the OpenAI's ChatGPT app is not running inference on the mobile device. The mobile device is streaming the prompts to a large, server-powered, cloud-based model for inference, which in turn streams its response back. Using a phone for the demo is a handy illusion of optimization though, isn't it?

What if we could run a similar experience locally, without the privacy, latency and connectivity concerns of a cloud-based approach? Picovoice's AI runs 100% on-device - this means that voice processing and LLM inference are performed locally without the user's request or response moving through a third-party API. Products built with Picovoice are private by design, compliant (GDPR, HIPPA, ...), and real-time without unreliable network (API) latency.

First, Let's See It In Action

Before building an LLM-powered voice assistant, let's check out the experience. Picovoice Android SDKs can run on both Android and iOS, allowing for cross-platform mobile experiences.

Picovoice also supports Linux, macOS, Windows, Raspberry Pi, and all modern web browsers (Chrome, Safari, Edge, and Firefox).

Running Phi-2 on Pixel 6a Android Phone

The video below shows Picovoice's LLM-powered voice assistant running Microsoft's Phi-2 model with voice-to-voice communication on a modest Pixel 6a Android phone. All AI processing is done on the device, but there is still enough CPU available for background phone activity.

Anatomy of an LLM Voice Assistant

An AI voice assistant powered by a local LLM must accomplish four key tasks:

- Detect when the user speaks the wake word.

- Understand the user's request, whether it’s a question or a command.

- Generate a response to the request using the LLM.

- Convert the LLM's text response into synthesized speech that can be played back to the user.

1. Wake Word

A Wake Word Engine is voice AI software designed to recognize a specific phrase. Each time you say "Alexa," "Siri," or "Hey Google," you activate their wake word engine.

Wake Word Detection is known as Keyword Spotting, Hotword Detection, and Voice Activation.

2. Streaming Speech-to-Text

Once the wake word is detected, we need to understand the user's request. This is achieved using a Speech-to-Text engine. For applications sensitive to latency, we use the real-time Streaming Speech-to-Text. Unlike traditional speech-to-text, which processes the entire speech after the user finishes speaking, Streaming Speech-to-Text transcribes speech in real-time as the user speaks (e.g., OpenAI's Whisper).

Speech-to-Text (STT) is also referred to as Automatic Speech Recognition (ASR), and Streaming Speech-to-Text can also be called Real-Time Speech-to-Text.

3. LLM Inference

After converting the user's speech to text, we run prompt the local LLM with the text of the request and let it generate the appropriate response. The LLM produces the response incrementally, token-by-token, which allows us to run speech synthesis simultaneously, reducing latency (more on this later). LLM inference is computationally intensive, requiring techniques to minimize memory and compute usage, such as quantization (compression) and platform-specific hardware acceleration.

Are you a deep learning researcher? Learn how picoLLM Compression deeply quantizes LLMs while minimizing loss by optimally allocating bits across and within weights [🧑💻].

Are you a software engineer? Learn how picoLLM Inference Engine runs x-bit quantized Transformers on CPU and GPU across Linux, macOS, Windows, iOS, Android, Raspberry Pi, and Web [🧑💻].

4. Streaming Text-to-Speech

A Text-to-Speech (TTS) engine takes text input and synthesizes corresponding speech audio. Since LLMs produce responses token by token in a stream, we utilize a TTS engine capable of handling a continuous stream of text inputs to minimize latency. This is known as Streaming Text-to-Speech.

Make Your Own Local Voice Assistant for Android

The following guide will walk you through integrating each element of the end-to-end AI pipeline into your Android project. If you just want to skip right to full app and start experimenting, check out the pico-cookbook repository on GitHub.

1. Voice Activation

Add the Picovoice Porcupine Wake Word Engine to your gradle.build file:

Then, you can create an instance of the wake word engine, and start processing audio in real time:

Replace $ACCESS_KEY with yours obtained from Picovoice Console and $KEYWORD_PATH with the absolute path to the keyword model file (.ppn) that you trained on Picovoice Console.

A remarkable feature of Porcupine is that it lets you train a custom wake word by just typing it in!

2. Speech Recognition

Add the Picovoice Cheetah Streaming Speech-to-Text Engine to your dependencies:

Then you can initialize an instance of the streaming speech-to-text engine, and start transcribing audio in real time:

Replace $ACCESS_KEY with your AccessKey, which you can obtain from Picovoice Console and $MODEL_PATH with the path to the language model files (.pv) that you can either customize and train on Picovoice Console, or you can use the default version from the Cheetah GitHub Repository.

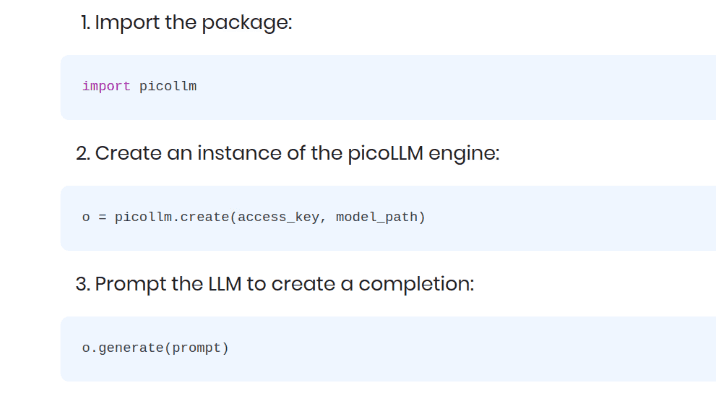

3. Response Generation

Add the picoLLM Inference Engine to your dependencies:

Initialize an instance of the LLM inference engine, create a dialog helper object, and start responding to the user's prompts:

Replace $ACCESS_KEY with yours obtained from Picovoice Console and $LLM_MODEL_PATH with the path to the picoLLM model (.pllm) downloaded from Picovoice Console.

picoLLM supports a variety of open-weight models such as Llama, Gemma, Mistral and Mixtral.

Note that the LLM's .generate function provides the response in pieces (i.e., token by token) using its setStreamCallback argument. We pass every token as it becomes available to the Streaming Text-to-Speech and when the .generate function returns we notify the Streaming Text-to-Speech engine that there is no more text and finalize the speech synthesis.

4. Speech Synthesis

Add Picovoice Orca Streaming Text-to-Speech Engine to your build.gradle dependencies:

Initialize an instance of Orca, and start synthesizing audio in real time:

Replace $ACCESS_KEY with yours obtained from Picovoice Console and $MODEL_PATH with the path to the voice model file (.pv) that you can pick from the Orca GitHub Repository.

What's Next?

The voice assistant we've created above is sufficient but basic. The Picovoice platform allows you to create more complex, multi-dimensional AI software products by mixing and matching our tools. For instance, we could add:

Personalization: We could let the AI assistant not only know what is being said, but who is saying it. This could allow us to create personal profiles on each speaker, with a database of past interactions that allow us to inform how to respond to future interactions. We can achieve this with the Picovoice Eagle Speaker Recognition Engine.

Multi-Turn Conversations: While saying the wake word is a voice activation mechanism for long-running, always-on systems, it becomes cumbersome when we have to go back-and-forth with the assistant during a prolonged multi-turn conversation. We could switch to a different form of activation after the initial interaction, to smooth conversations out. Using the Picovoice Cobra Voice Activity Detection Engine, we could simply detect when the speaker is speaking and when they are waiting for a response.