Blog

As the demand for large language models (LLMs) continues to grow, so does the need for efficient and cost-effective deployment solutions....

Over the years, Large Language Models (LLMs) have dominated the scene. However, a notable shift is underway towards Small Language Models (S...

Learn how to convert a stream of text into audio in real-time, enabling AI voice assistants with no audio delay....

Learn how to run Llama 2 and Llama 3 on iOS with picoLLM Inference engine iOS SDK. Runs locally on an iOS device....

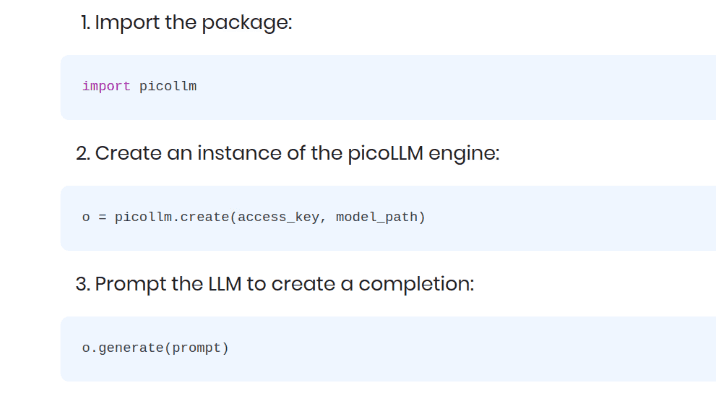

Learn how to run Llama 2 and Llama 3 in Python with the picoLLM Inference Engine Python SDK. Runs locally on Linux, macOS, Windows, and Rasp...

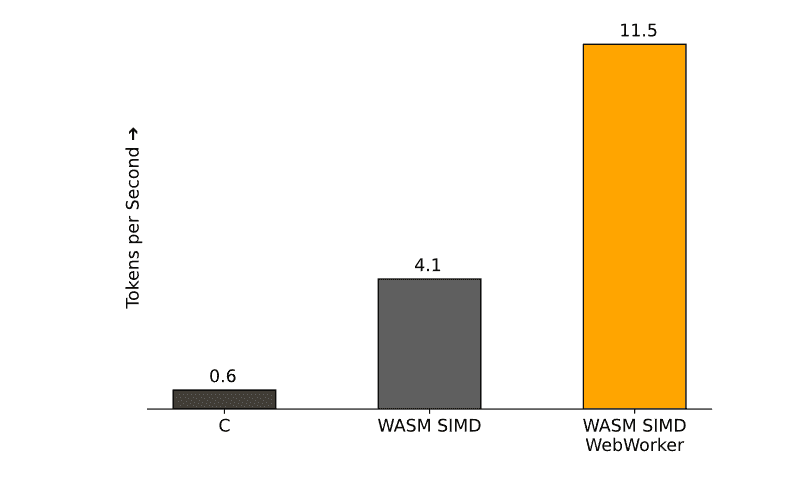

Run Llama Locally on Any Browser: GPU-Free Guide with picoLLM JavaScript SDK for Chrome, Edge, Firefox, & Safari...

Large Language Models (LLMs), such as Llama 2 and Llama 3, represent significant advancements in artificial intelligence, fundamentally chan...

Learn how to run Llama 2 and Llama 3 on Android with the picoLLM Inference Engine Android SDK. Runs locally on an Android device....

Dual Streaming Text-to-Speech allows to synthesize an incoming stream of text into consistent audio in real time, making it ideal for latenc...

Create an on-device, LLM-powered Voice Assistant for iOS using Picovoice on-device voice AI and picoLLM local LLM platforms....

Create an on-device, LLM-powered Voice Assistant for Android using Picovoice on-device voice AI and picoLLM local LLM platforms....

Learn how to run LLMs locally using the picoLLM Inference Engine Python SDK. picoLLM performs LLM inference on-device, keeping your data pri...

Create a local LLM-powered Voice Assistant for Web Browsers using Picovoice on-device voice AI and picoLLM local LLM platforms....

Run local LLMs using Node.js with picoLLM, enabling AI assistants to run on-device, on-premises, and in private clouds without sacrificing a...

picoLLM is a cross-browser local LLM inference engine that runs on all major browsers, including Chrome, Safari, Edge, Firefox, and Opera....

Learn how to run local LLMs on macOS using picoLLM, enabling AI assistants to run on-device, on-premises, and in private clouds without sacr...