Today, we are announcing the launch of the picoLLM platform. picoLLM is the end-to-end local large language model (LLM) platform that enables AI assistants to run on-device, on-premises, and in the private cloud without sacrificing accuracy.

For free, developers can deploy compressed open-weight LLMs across embedded systems, mobile devices, web, workstations, on-prem, and private cloud using the picoLLM Inference Engine. picoLLM Compression is publicly available for enterprises to optimize custom in-house LLMs for local deployment. picoLLM GYM is available in private beta for select partners to enable compression-aware training of small language models (SLMs).

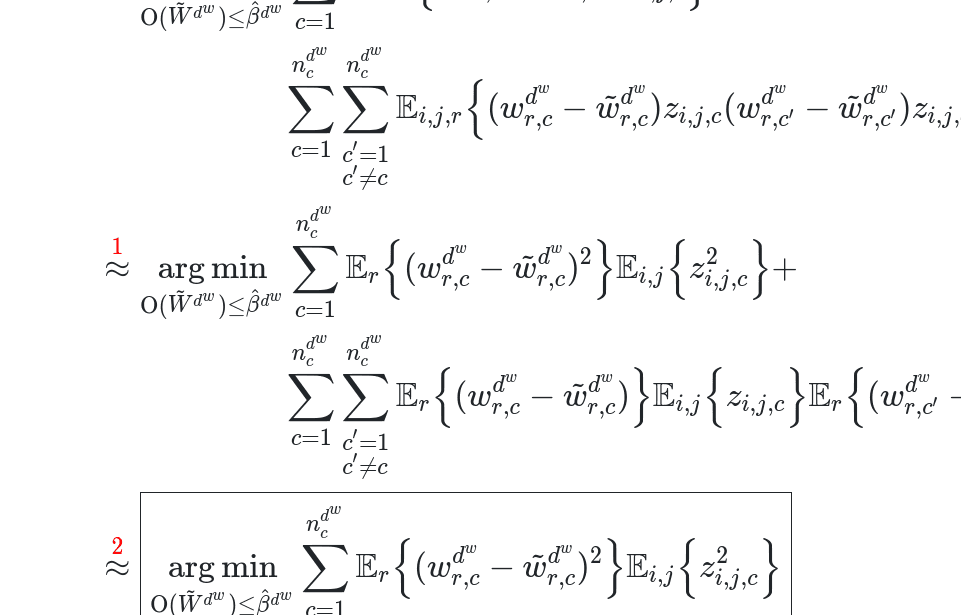

Are you a deep learning researcher? Learn how picoLLM Compression deeply quantizes LLMs while minimizing loss by optimally allocating bits across and within weights [🧑💻].

Are you a software engineer? Learn how picoLLM Inference Engine runs X-Bit Quantized Transformers on CPU and GPU across Linux, macOS, Windows, iOS, Android, Raspberry Pi, and Web [🧑💻].

Breaking New Grounds

LLMs are the fastest-adopted technology in history. Yet their eye-watering compute requirement hinders widespread deployment beyond a few vendors' APIs, putting enterprises in a challenging position to relinquish their privacy, security, and independence or get left behind in the GenAI race. picoLLM-compressed AI agents can run anywhere on your terms, enabling use cases deemed unattainable:

- Embedded Systems — picoLLM fits into embedded systems, enabling use cases that can't afford reliance on connectivity, such as automotive. Learn how to run Microsoft's

Phimodel on aRaspberry Pi. [🎥][🧑💻]. - Mobile Devices — picoLLM enables on-device LLM inference on mobile devices. OEMs can integrate GenAI without exposing themselves to unbounded API costs. Learn how to deploy LLM models locally into

Android[🎥][🧑💻] oriOS[🎥][🧑💻]. - Web — picoLLM can run 100% offline within webpages and across all browsers. Learn why picoLLM is the only cross-browser local LLM inference engine that can run across all modern browsers, including

Chrome,Safari,Edge, andFirefox[🧑💻]. - Workstations — Pinnacle LLMs demand data center GPU clusters with 100s GB of VRAM. picoLLM Compression busts this myth! Learn how we efficiently run

Llama-3-70bon a consumerRTX GPUavailable on workstations and laptops [🎥][🧑💻]. - Serverless Computing — picoLLM enables serverless LLM inference for scalable and low-ops deployment on any cloud provider, including private clouds. Would you like to learn how we deployed Meta's

Llama-3-8bonAWS Lambda? [🧑💻]

Are you building an LLM-powered Voice Assistant? picoLLM minimizes latency when integrated with Cheetah Streaming Speech-to-Text and Orca Streaming Text-to-Speech. Learn how to create a real-time LLM-powered Voice Agent in less than 400 lines of Python [🧑💻].

Start Building

Picovoice is founded and operated by engineers. We love developers who are not afraid to get their hands dirty and are itching to build. picoLLM is 💯 free for open-weight models. We promise never to make you talk to a salesperson or ask for a credit card. We currently support the Gemma 👧, Llama 🦙, Mistral ⛵, Mixtral 🍸, and Phi φ families of LLMs, and support for many more is underway.

o = picollm.create(access_key,model_path)res = o.generate(prompt)

picoLLM for Enterprises

Picovoice is a deep tech startup that invests heavily in creating bleeding-edge AI technology and making it readily accessible to enterprise developers. If you are interested in picoLLM and have technical resources, we highly recommend asking your engineers to use the Picovoice Forever-Free Plan to experiment, benchmark, and build a proof-of-concept. We love to get a chance to earn your trust 🤝 organically and early on.

Once you've defined your problem with a clear scope 🔎, budget 💰, and timeline 🗓️, we recommend contacting our enterprise sales team [🤵].