Earlier this year, Meta announced the release of the Llama 3 family of AI

language models. The largest in this family, the Llama-3 70B model, boasts 70 billion parameters and ranks among the

most powerful LLMs available. It has often outperformed current state-of-the-art models like Gemini-Pro 1.0

and Claude 3 Sonnet. However, running Llama-3 70B requires more than 140 GB of VRAM, which is beyond the capacity of

most standard computers. Even on cloud-based platforms, accessing such a GPU is uncommon and can be expensive.

Quantization stands as a potential solution for shrinking the size of models. However, as indicated by several studies, common quantization techniques, especially when aiming for a 2-bit depth to align with consumer-grade GPUs with 24 GB of VRAM, may lead to notable performance declines for Llama-3-70B, potentially rendering them ineffective.

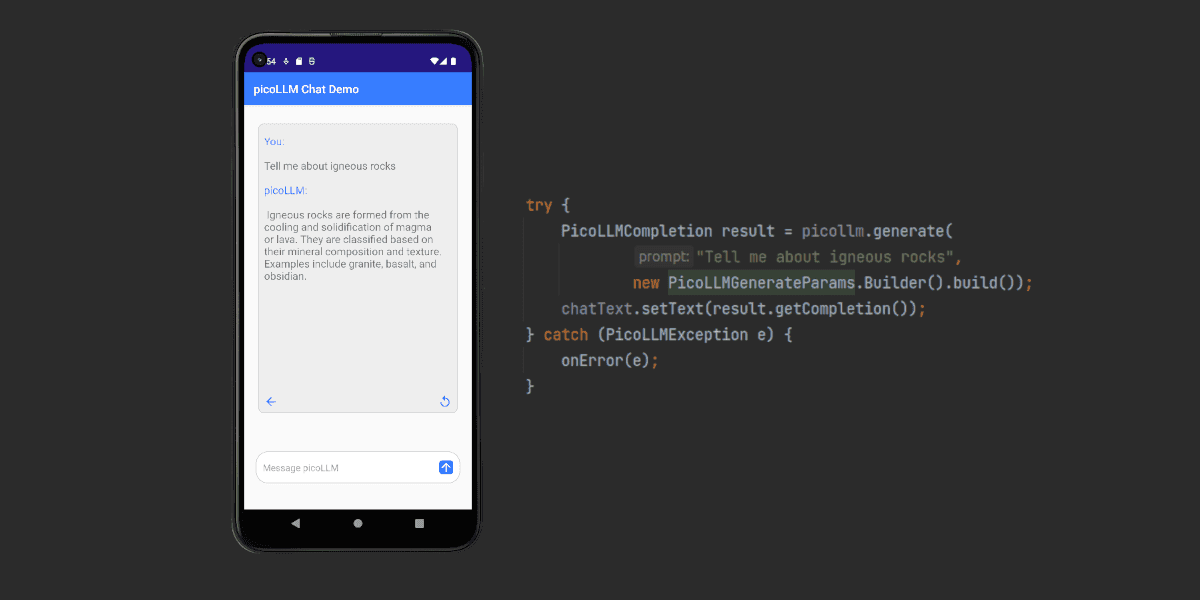

Picovoice's picoLLM offers a promising solution to this dilemma. By leveraging its optimal quantization

algorithm, developers can now execute Llama-3-70B on their everyday

computers equipped with a single Nvidia RTX 4090 GPU. The picoLLM algorithm efficiently shrinks the model size to

below 24 GB.

Our LLM benchmark

illustrates the resilience of the picoLLM quantization algorithm across diverse models, bit-depths, and sizes,

consistently delivering superior performance compared to alternative quantization techniques.

Setup

Getting started with picoLLM is straightforward: install the picollmdemo package on your system.

This package includes two demos: picollm_demo_completion for single-response tasks and picollm_demo_chat for

interactive conversations. With these demos, you can run LLMs locally on your device and evaluate their performance. Let

us go with picollm_demo_completion for this article.

Running the Demo

To discover the numerous options for tailoring the text generation process to suit your preferences, just run the following command:

To begin the demo, you'll need to provide the following information:

- Your Picovoice Access Key (

--access_key $ACCESS_KEY): Obtain your key from Picovoice Console. - The Path to LLM Model (

--model_path $MODEL_PATH): Download the LLM model file from Picovoice Console and provide its absolute path. Although we're using theLlama-3-70Bmodel in this example, you can use any other accessible Llama-3 model. - prompt (

--prompt $PROMPT): The text prompt to generate a completion for.

Once you have this information, execute the following command to start the demo:

picoLLM automatically identifies your GPU, transfers the model to it, and proceeds to generate completions for the specified prompt.

You can observe the demo in action in the following video:

Next Steps

To learn more about the picoLLM inference engine Python SDK and how to integrate it into your projects, refer to the picoLLM Python documentation.