Speech Recognition is a broad term that is often associated solely with Speech-to-Text technology. However, Speech Recognition can also include technologies such as Wake Word Detection, Voice Command Recognition, and Voice Activity Detection (VAD).

This article provides a thorough guide on integrating on-device Speech Recognition into JavaScript Web apps. We will be learning about the following technologies:

- Cobra Voice Activity Detection

- Porcupine Wake Word

- Rhino Speech-to-Intent

- Cheetah Streaming Speech-to-Text

- Leopard Speech-to-Text

In addition to plain JavaScript, Picovoice's Speech Recognition engines are also available in different UI frameworks such as React, Angular, and Vue.

Cobra VAD

Cobra Voice Activity Detection is a VAD engine that can be used to detect the presence of human speech within an audio signal.

- Install the Web Voice Processor and Cobra Voice Activity Detection Web SDK packages using

npm:

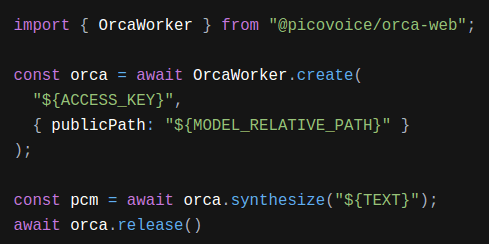

Sign up for a free Picovoice Console account and copy your

AccessKeyfrom the main dashboard. TheAccessKeyis only required for authentication and authorization.Create an instance of

CobraWorker:

- Subscribe

CobraWorkertoWebVoiceProcessorto start processing audio frames:

For further details, visit the Cobra Voice Activity Detection product page or refer to the Cobra Web SDK quick start guide.

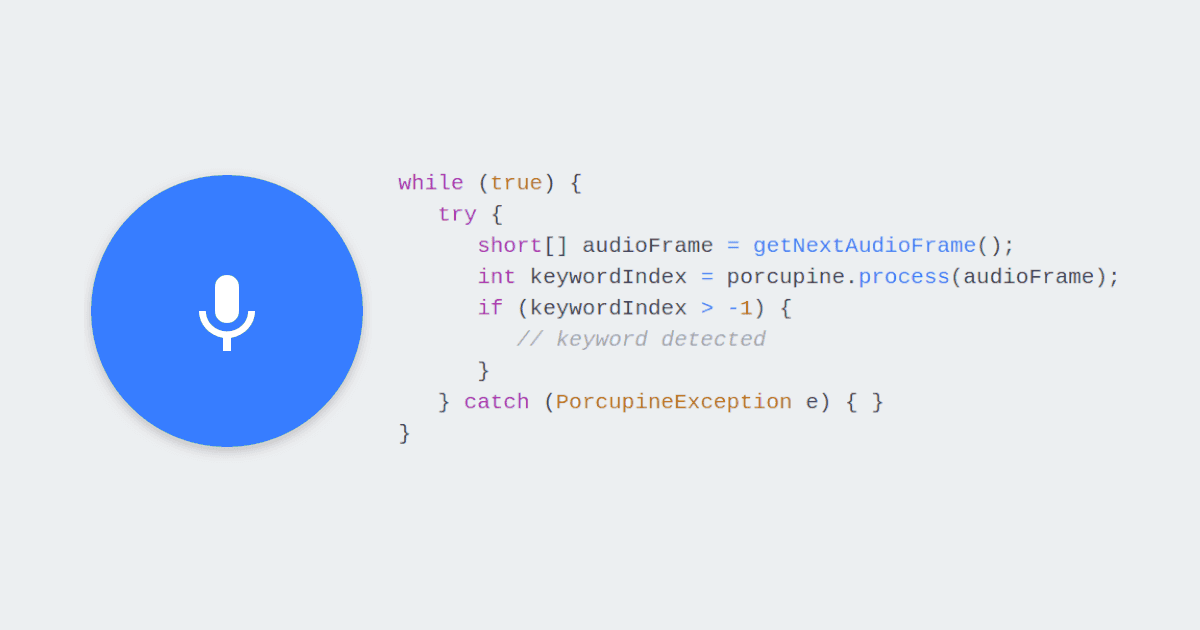

Porcupine Wake Word

Porcupine Wake Word is a wake word detection engine that can be used to listen for user-specified keywords and activate dormant applications when a keyword is detected.

- Install the Web Voice Processor and Porcupine Wake Word Web SDK packages using

npm:

Sign up for a free Picovoice Console account and copy your

AccessKeyfrom the main dashboard. TheAccessKeyis only required for authentication and authorization.Create and download a custom Wake Word model using Picovoice Console.

Add the Porcupine model (

.pv) for your language of choice and your customWake Wordmodel (.ppn) created in the previous step to the project's public directory:

- Create objects containing the

Porcupinemodel andWake Wordmodel options:

- Create an instance of

PorcupineWorker:

- Subscribe

PorcupineWorkertoWebVoiceProcessorto start processing audio frames:

For further details, visit the Porcupine Wake Word product page or refer to the Porcupine Web SDK quick start guide.

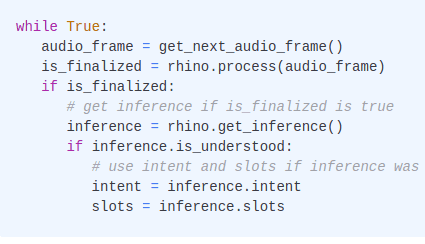

Rhino Speech-to-Intent

Rhino Speech-to-Intent is a voice command recognition engine that infers user intents from utterances, allowing users to interact with applications via voice.

- Install the Web Voice Processor and Rhino Speech-to-Intent Web SDK packages using

npm:

Sign up for a free Picovoice Console account and copy your

AccessKeyfrom the main dashboard. TheAccessKeyis only required for authentication and authorization.Create your Context using Picovoice Console.

Add the Rhino Speech-to-Intent model (

.pv) for your language of choice and theContextmodel (.rhn) created in the previous step to the project's public directory:

- Create an object containing the

Rhino Speech-to-Intentmodel andContextmodel options:

- Create an instance of

RhinoWorker:

- Subscribe

RhinoWorkertoWebVoiceProcessorto start processing audio frames:

For further details, visit the Rhino Speech-to-Intent product page or refer to the Rhino's Web SDK quick start guide.

Cheetah Streaming Speech-to-Text

Cheetah Streaming Speech-to-Text is a speech-to-text engine that transcribes voice data in real time, synchronously with audio generation.

- Install the Web Voice Processor and Cheetah Streaming Speech-to-Text Web SDK packages using

npm:

Sign up for a free Picovoice Console account and copy your

AccessKeyfrom the main dashboard. TheAccessKeyis only required for authentication and authorization.Generate a custom

Cheetah Streaming Speech-to-Textmodel from the Picovoice Console (.pv) or download the default model (.pv).Add the model to the project's public directory:

- Create an object containing the model options:

- Create an instance of

CheetahWorker:

- Subscribe

CheetahWorkertoWebVoiceProcessorto start processing audio frames:

For further details, visit the Cheetah Streaming Speech-to-Text product page or refer to the Cheetah Web SDK quick start guide.

Leopard Speech-to-Text

In contrast to Cheetah Streaming Speech-to-Text, Leopard Speech-to-Text waits for the complete spoken phrase to complete before providing a transcription, enabling higher accuracy and runtime efficiency.

- Install the Leopard Speech-to-Text Web SDK package using

npm:

Sign up for a free Picovoice Console account and copy your

AccessKeyfrom the main dashboard. TheAccessKeyis only required for authentication and authorization.Generate a custom

Leopard Speech-to-Textmodel (.pv) from Picovoice Console or download a default model (.pv) for the language of your choice.Add the model to the project's public directory:

- Create an object containing the model options:

- Create an instance of

LeopardWorker:

- Transcribe audio (sample rate of 16 kHz, 16-bit linearly encoded and 1 channel):

For further details, visit the Leopard Speech-to-Text product page or refer to Leopard's Web SDK quick start guide.