When people talk about Speech Recognition on Raspberry Pi, they mean Speech-to-Text (STT). Why? Because Speech-to-Text

is the most known (used) form of Speech Recognition. But it's almost always NOT the right tool! Specifically, if you are

thinking about building something on a Raspberry Pi. Let's look at use cases and what is the best tool to do it.

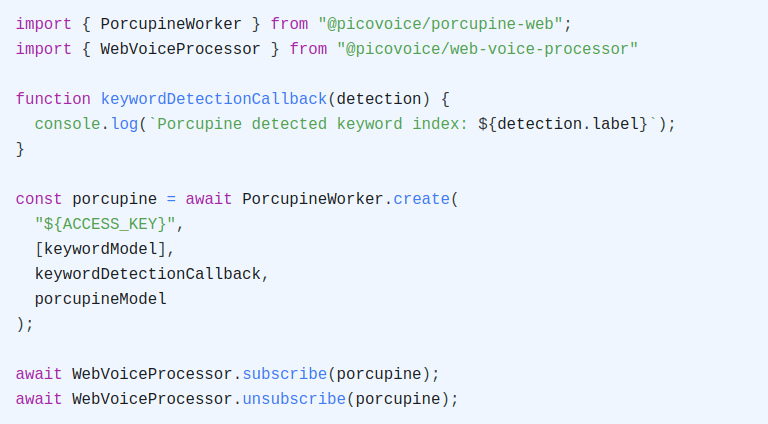

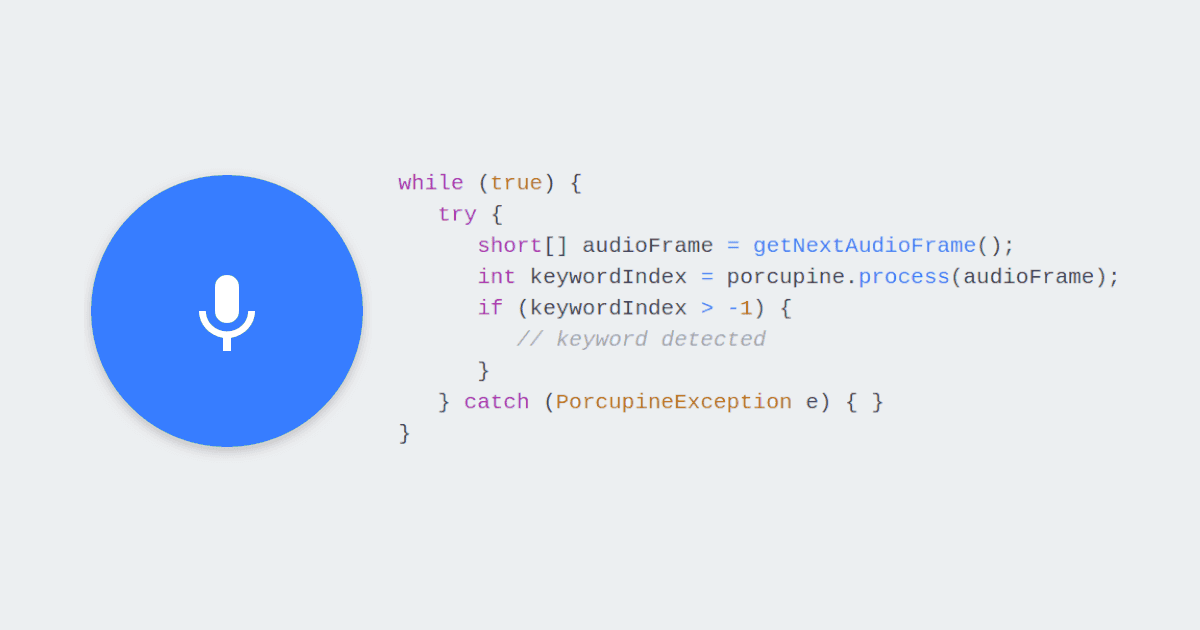

Wake Word Detection

A Wake Word Engine detects occurrences of a given phrase in audio. Alexa and Hey Google are examples of

wake words. Picovoice Porcupine Wake Word Engine empowers you to train custom

wake words (e.g. Jarvis) for Raspberry Pi. It is so efficient that it can even run on Raspberry Pi Zero in real time.

Wake Word Detection is also known as Trigger Word Detection, Keyword Spotting, Hotword Detection, Wake up Word Detection,

and Voice Activation.

You can use a Speech-to-Text for Wake Word Detection if you don't care that you are using much more power or,

even worse, sending voice data out of your device 24/7. The accuracy is significantly less than the proper solution!

Why? A Wake Word Engine is optimized to do one thing well, hence is smaller and more accurate.

Don't use Speech-to-Text when all you need is a Wake Word Engine!

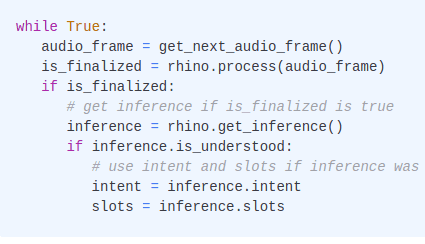

Voice Commands & Domain-Specific NLU

Intent inference (Intent Detection) from Voice Commands is at the core of any modern Voice User Interface (VUI).

Typically, the spoken commands are within a well-defined domain of interest, i.e. Context. For example:

- Turn on the lights in the living room.

- Play Tom Sawyer album by Rush.

- Call John Smith on his cell phone.

The dominant approach for inferring users' intent from spoken commands is to use a Speech-to-Text engine. Then parse

the text output using Grammar-Based Natural Language Understanding (NLU) or an ML-based NLU engine. The first shortcoming

of this approach is low accuracy. Speech-to-Text introduces transcription errors that adversely affect subsequent intent inference steps.

Picovoice Rhino Speech-to-Intent Engine fuses Speech-to-Text and NLU to minimize these adverse

errors and optimizes transcription and inference steps. What is the result? It is even more accurate than cloud-based

APIs.

In terms of runtime, Rhino only takes a few MBs of FLASH. It runs in real-time, consuming only a single-digit percentage

of one CPU core. It even runs on Raspberry Pi Zero.

Open-Domain Transcription

You want to transcribe speech to text in an open domain. i.e. users can say whatever they want. Then you need a Speech-to-Text engine.

Picovoice Leopard Speech-to-Text and Cheetah Streaming Speech-to-Text engines run on Raspberry Pi 3, Raspberry Pi 4, and Raspberry Pi 5 and match the accuracy of cloud-based APIs (Google Speech-to-Text, Amazon Transcribe, IBM Watson Speech-to-Text, Azure Speech-to-Text).

They only take about 20 MB of FLASH and can run on a single CPU.

Voice Activity Detection (VAD)

Do you want to know if someone is talking? You need a Voice Activity Detection (VAD) Engine such as Picovoice Cobra VAD.

More Voice AI Tools on Raspberry Pi

All Picovoice engines support Raspberry Pi. Don’t forget to check out our docs to learn about Koala Noise Suppression, Falcon Speaker Diarization and Eagle Speaker Recognition on a Raspberry Pi.

Start Free