Combining a wake word with Voice ID enables personalized voice activation on smart devices, ensuring that only enrolled users can trigger commands. By integrating custom wake words with speaker verification, devices such as XR glasses, smart earbuds, smartwatches, and laptops can securely respond to the right person. This approach mirrors the evolution of Apple's "Personalized Hey Siri" and Amazon's "Alexa Voice ID", making personalized voice control a standard expectation for modern wearables.

This guide explains how other device manufacturers can add personalized voice activation to laptops, mobile phones, XR glasses, earbuds, and smartwatches just like Apple and Amazon, using sequential and integrated approaches.

Did you know you can train production-ready custom wake words on Picovoice Console in seconds?

Why Wake Words with Voice ID Matter

Voice User Interfaces bring convenience to mobile devices, helping users navigate small screens and multitask. The first step of any hands-free experience is wake word detection. But when someone says "Hey [Your Brand]," should the command activate all nearby devices or just their own?

For wearables worn in shared spaces, unauthorized activations create critical problems:

- Privacy concerns: Your AR glasses respond to a colleague who accidentally says your wake word during a meeting.

- Security risks: Anyone nearby can activate voice commands on your smartwatch or earbuds.

- User experience friction: Devices can't personalize responses without knowing who's speaking.

That's why combining custom wake words with voice ID becomes essential. Adding speaker recognition to wake word detection creates truly personalized experiences while ensuring activation only for authorized users, minimizing false alarms.

What is Voice ID?

Voice ID, also known as speaker recognition, speaker identification, and speaker verification, identifies who is speaking based on unique vocal characteristics—pitch patterns, speaking rhythm, and acoustic signatures. It analyzes the voice itself, not the words being said.

When combined with wake word detection, systems can create two-stage authentication:

- Wake word detection: The engine detects "Hey [Brand]" (keyword spotting)

- Speaker verification: Voice ID verifies it's the enrolled user's voice (speaker verification)

This combination enables devices to know both what was said and who said it.

Have you tried Picovoice's Porcupine Wake Word and Eagle Speaker Recognition?

Real-World Use Cases for Wake Words with Voice ID

XR and AR Glasses

A user wearing Meta Quest XR glasses in a shared workspace says, "Hey Meta." The headset verifies it's the owner's voice before activating, ignoring the same command from others nearby.

Smart Earbuds

A professional uses earbuds for both personal calls and confidential work meetings. Voice ID ensures that sensitive voice commands like "read my messages" or "show my calendar" only activate for the authenticated user.

Smartwatches

A marathon runner says, "Hey Garmin, show my heart rate data" during a race. Voice ID verifies the runner's identity before displaying sensitive health information.

Approaches to Wake Word Detection with Speaker Verification: Sequential vs. Integrated

1. Integrated Audio Processing:

A single neural network with wake word and voice ID capabilities analyzes audio to simultaneously detect the wake word and verify the speaker before activating the software.

2. Sequential Audio Processing:

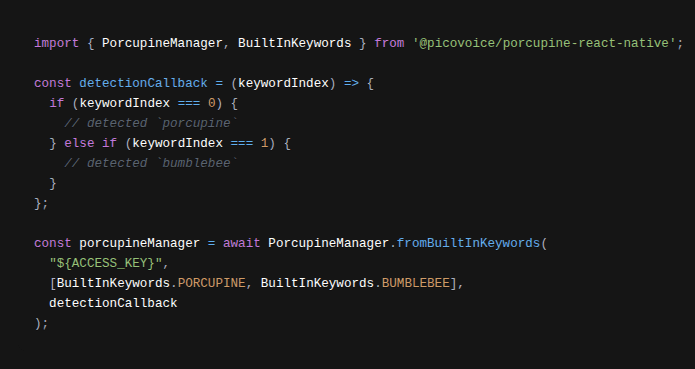

Sequential systems use a two-stage pipeline: first analyzing audio for the wake word, then running speaker recognition before activating the software.

Stage 1: Wake Word Detection (Always-On)

- Runs continuously on-device with minimal power consumption

- Uses a lightweight neural network optimized for edge devices

- Activates Stage 2 only when the wake word is detected

Stage 2: Voice ID Verification (On-Demand)

Activates only after the wake word is detected

If voice ID runs in the cloud:

- Sends audio data to the cloud

- The cloud-based voice ID engine extracts the voice "embedding" (digital voiceprint)

- The engine compares the voice embedding against enrolled user profiles

- Returns verification results to the device

- Activates the software based on verification results

If voice ID runs locally on the device:

- Processes audio data using the embedded voice ID component to extract the voice "embedding" (digital voiceprint)

- Compares the voice embedding against enrolled user profiles stored on the device

- Activates the software based on verification results

Why is the Integrated Approach Better than the Sequential Approach to Enable Wake Words with Speaker Verification?

Fusing Wake Word and Speaker Recognition Eliminates Cascaded Errors

Sequential approaches suffer from cascaded errors: the accuracy of wake word detection with voice ID relies on two independently trained modules. When the wake word detection fails, speaker verification fails too. Integrated systems avoid this by jointly optimizing both tasks during training, improving overall accuracy.

Speaker Recognition systems in the market are not built for short phrases.

Speaker verification systems experience significant performance degradation when tasked with short-duration trial recordings. Research defines short utterances as containing 1 to 8 seconds of net-speech. While speaker verification can work with as little as 0.6–1 seconds, 2–3 seconds is the practical minimum, with accuracy improving significantly up to 8 seconds.

Wake words define "short" differently. Saying "Alexa" takes approximately 600 ms, "Siri" takes 400 ms, and "OK Google" takes 800 ms on average. Wake words fall at the lower boundary of what's usable and well below what's optimal for speaker verification, making accurate verification challenging.

With that said, sequential systems can work after certain optimizations. Apple used the sequential wake word with voice ID for personalized Hey Siri, consisting of three components—all running on-device:

- A low-power wake word listener

- A high-accuracy checker

- A speaker recognition layer for personalization

Downsides of the Integrated Approach

Despite its advantages, the integrated approach faces significant data challenges.

The traditional approach to training wake word models is to gather data from thousands of users saying the wake word. For wake word models with speaker verification, this data requirement multiplies dramatically—hundreds of thousands of users are needed. Google, for example, used a training set of ~150 M utterances from ~630 K speakers from user queries. Unless a company has Google's scale with millions of users and its ability to collect and store users' data, most device manufacturers won't have access to such data.

Another challenge is processing this amount of data. If training a wake word from 15,000 utterances takes one week, training on 150 million utterances would take 1,000 weeks using the same computer. Improved methods such as GE2E (Generalized End-to-End) can lower training time by up to 60%. Yet this isn't enough to make wake words with integrated speaker verification affordable and accessible to companies beyond Big Tech.

Why This Matters in 2026?

Voice ID for wake words is moving from "premium feature" to "table stakes" for next-generation wearables. Users increasingly expect:

- Devices that automatically recognize who they are

- Privacy-by-design voice interfaces

- Seamless multi-user experiences

- Frictionless security

For device manufacturers building mobile devices such as laptops, XR glasses, smart earbuds, or wearables in general, the question isn't whether to add personalized voice activation—it's how to implement it to stay competitive.

Ready to Add Personalized Wake Words to Your Device?

Consult an Expert

Discuss your specific use case and evaluate SDK options for your hardware platform. Our on-device voice AI experts understand hardware constraints and can help you plan your integration roadmap.

Contact us to explore how custom wake words with voice ID can differentiate your product and create truly personalized user experiences.

Consult an Expert