Artificial intelligence is experiencing a major architectural transformation. While cloud-based AI has dominated the last decade of innovation, the future is increasingly on-device, where models run locally on smartphones, wearables, cars, and IoT devices instead of remote servers. For product managers, AI developers, and technology executives, understanding this shift is not optional; it's essential for competitive positioning.

Why On-Device AI Matters in 2025 and Beyond

Traditional AI models rely on cloud infrastructure, sending massive amounts of user data to centralized servers for inference, creating inherent latency and security vulnerabilities.

On-device AI, on the other hand, processes data locally, right where it's captured, eliminating the limitations around latency, privacy, and cost. That's why this transition isn't merely an optimization. It's a paradigm shift in how enterprises build, deploy, and experience AI products.

The Technical Foundation of On-Device AI

The rapid adoption of on-device AI has been made possible by two converging trends:

1. Hardware Innovation: Modern devices now ship with more powerful and specialized chips, such as TPU and NPU, beyond widely-adopted, popular processing units: CPU and GPU. These chips are designed to perform deep learning inference efficiently on the edge—enabling advanced AI workloads even on battery-powered devices.

2. Smarter AI Frameworks: Advanced deep learning models employ sophisticated techniques such as hardware-aware training and model compression. These allow developers to train, compress, and optimize AI models for on-device environments without sacrificing accuracy.

Strategic Advantages for Decision-Makers

- Cost Efficiency at Scale: On-device AI removes recurring cloud compute, storage, and orchestration costs, drastically lowering total cost of ownership (TCO).

- Privacy and Compliance by Design: On-device AI processes data, including sensitive information, such as biometric data, medical scans, and voice inputs, securely on-device. Keeping data within the user's device eliminates exposure risks related to HIPAA, GDPR, and CCPA.

- Real-Time Performance and Reliability: Efficient on-device AI returns instant results without dependency on network speed, eliminating network latency. For mission-critical applications—from healthcare diagnostics to industrial automation—this reliability profile is non-negotiable.

Implementation Considerations: Successfully deploying on-device AI requires different expertise than cloud implementations. Enterprises need to evaluate their capabilities in edge computing, as the implementation of models affects on-device AI performance as well.

If enterprises choose to use open-source or build their AI models, the development process may involve training models on large datasets, model optimization, and then deploying optimized versions to devices' onboard memory.

Learn about what matters in selecting the best neural network architecture for speech recognition.

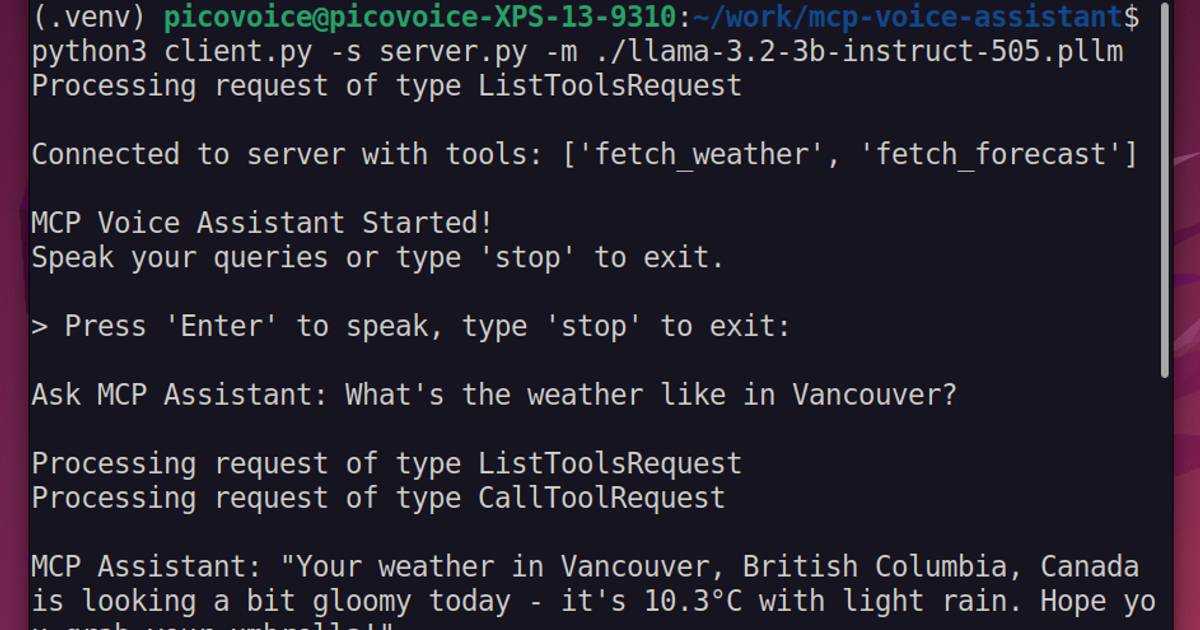

Achieving Cloud-Level Accuracy with On-Device AI: The Picovoice Example

Picovoice pioneered production-grade on-device voice AI before it became an industry imperative. Picovoice's on-device voice AI platform delivers cloud-level accuracy while running entirely on-device, addressing the fundamental tension between performance and privacy, latency, and cost at scale.

Picovoice has solved the core challenges of edge voice AI: wake word detection with minimal compute requirements, enabling cross-platform deployment from microcontrollers to mobile devices, speech-to-text that is faster and more accurate than Google's large models, and intent detection with industry-leading accuracy. Enterprises leveraging Picovoice services achieve cloud repatriation benefits without investing in building data centers. But, how?

What Makes Picovoice Different?

Most edge AI models use post-training optimization of pre-trained models. Since these models were not designed for edge deployment in the first place, potential optimizations are restricted. Furthermore, they depend on open-source runtimes like PyTorch or TensorFlow, which again restrict performance improvements. As a result, achieving cloud-level accuracy on the edge remains a challenge.

Picovoice takes a different approach: end-to-end optimization. It builds and owns the entire process, from the data and training process to the inference engines. This allows on-device AI models to achieve:

- On-par or better accuracy than cloud-based alternatives

- Cross-platform deployment, from microcontrollers to mobile

- Faster inference and enhanced privacy, even without connectivity

This approach demonstrates that cloud-quality AI is achievable entirely on-device.

On-device AI is the Strategic Imperative

The shift to on-device AI represents more than a technical evolution. It's a strategic recalibration of how intelligent systems serve users. The question for product and technology leaders isn't whether to adopt on-device AI, but when and how. While early movers gain a competitive advantage through superior user privacy, performance that doesn't degrade with network conditions, and lower operational costs at scale, late adopters will face mounting pressure from regulations, user expectations, and cloud cost structures that become untenable at scale.

Key Takeaways

- On-device AI is now foundational for privacy, performance, and cost efficiency.

- Cloud-quality AI is achievable on-device with the right architecture and frameworks.

- Voice AI is one of the most mature and production-ready edge AI applications today.

Next Steps

For teams evaluating AI deployment models, the calculus is increasingly clear. On-device AI isn't a constraint to work around, but the foundation to build upon. And for voice AI specifically, Picovoice has spent years ensuring that foundation is production-ready.

To explore how on-device AI can power your next-generation products, talk to our experts pioneering on-device AI.

Consult an Expert