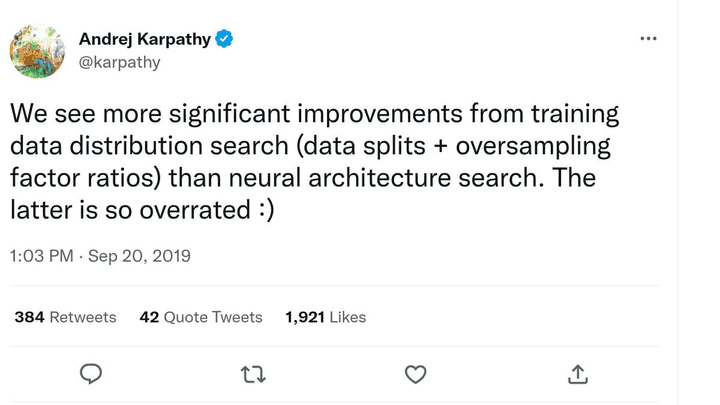

There is a trend in the deep learning community that using the latest architecture always gives the best result. This train of thought is more pronounced among junior practitioners. In reality, in our experience, your model architecture is not a crucial factor. It matters, just not as much as you think or hope! If you don't believe us check this tweet from the head of autonomous driving at Tesla, Andrej Karpathy.

Why?

A Deep Neural Network (DNN) is a computing machine with numerous free parameters, i.e. weights. You can shape it (train it) to do what you want. The same applies to feed-forward DNNs, Convolutional Neural Networks (CNNs), Recurrent Neural Networks (RNNs), Transformers, etc.

For any given problem, a cohort of these models presumably performs better as we believe their internal architecture is better suited for the task. For example, RNNs for time series problems have the advantage of internal recurrence and inherent state. They have been the leading model in space for many years. But, surprisingly, several talented folks surpassed RNN results using simple CNNs for speech recognition! A contradiction to popular belief. How did this happen?

What matters?

The main task of a Deep Learning practitioner is simulating the real problem within the training loop as close as possible to reality on the ground. Easier said than done. Accurate simulation is where we spend a good chunk of our time at Picovoice. It is tricky and requires patience, experimentation, and domain knowledge. Given an accurate simulation, at least the network has a chance to know what it needs to learn to succeed out there! Secondly, we need to make the learning process easier and more efficient. Data preprocessing, feature augmentation, curriculum learning, and more advanced optimization techniques play a role.