There is no shortage of articles discussing which deep learning framework is the best.

In this article, we want to focus on a niche. Which framework can make your life easier if your goal is On-Device Deployment? We also explore the controversial topic of building your in-house on-device Inference Engine.

TensorFlow

TensorFlow comes with TensorFlow Lite for Android, iOS, and single-board computers (e.g. Raspberry Pi) deployment. TensorFlow Lite Micro also supports several microcontrollers (MCUs). The level of support and maturity is high. You don't need to worry about writing Single Instruction Multiple Data (SIMD) code for these platforms, and you can remain productive in Python. If your trained model can run on your target platform using TensorFlow and that is all you need to deliver, TensorFlow should be your first choice.

PyTorch

PyTorch has the reputation of being a researcher-friendly platform, and cool kids on the block use PyTorch, period. Lazy Graph Building and Imperative Interface features are great for productivity and experimentation. PyTorch officially supports Android and iOS for edge deployment. The support for single-board computers (e.g. Arm-based Linux boxes) and microcontrollers is unofficial. We can tell you that Semiconductor companies love adding support for PyTorch as they see the traction it has. Things might change here fast! As a side note, PyTorch is not part of Meta anymore.

In-House

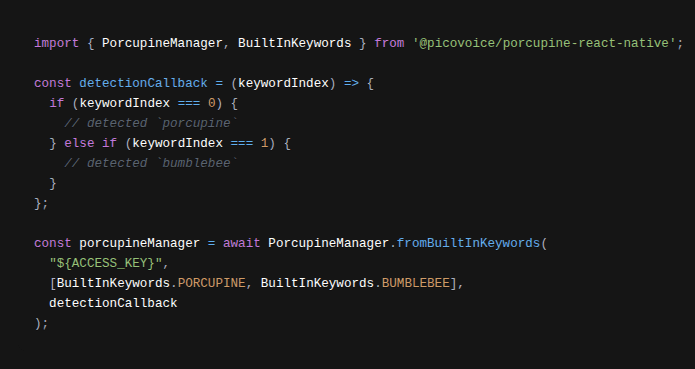

We underestimated how hard this can be. Why did we have to? Because we wanted to accelerate the transition of voice recognition from the cloud to the Edge. In our charter, Edge was anything from a web browser to mobile, PC, single-board computers, and microcontrollers. There wasn't (isn't) any framework that supports this breadth. More importantly, we knew we get at least 10X faster runtime if we train our models knowing the inference engine (instructions). But this level of coupling and system-level optimization is not always required.