TLDR: This tutorial shows how to integrate a voice assistant into smart kitchen appliances using on-device voice SDKs in Python. We'll build a complete embedded kitchen voice interface that controls appliance functions such as timers, temperature adjustments, and device activation. All without relying on cloud APIs or streaming audio to external servers.

Smart kitchen appliances demand voice interfaces that are safe, fast, and GDPR/CCPA compliant. Cloud voice APIs stream customer audio to external servers and add network latency per command. For appliance manufacturers building ovens, refrigerators, and robotic kitchen systems, on-device voice processing provides low-latency, complete audio privacy, and custom branded wake words like "Hey Chef" instead of generic voice assistants.

This smart kitchen voice assistant uses Porcupine Wake Word, Rhino Speech-to-Intent, and Orca Text-to-Speech for on-device voice processing. All audio is processed on Raspberry Pi, eliminating network latency while maintaining privacy compliance for smart kitchen products.

This demo runs on Raspberry Pi, but the same voice SDKs support ARM Cortex-M, Linux, Android, iOS, and web browsers. Check out Picovoice's platform documentation to integrate voice AI into your smart kitchen appliances.

Why Choose On-Device Voice Processing for Smart Kitchen Appliances

For smart kitchen products, on-device voice processing offers critical advantages:

Low-Latency Voice Processing: Cloud voice APIs require sending audio to remote servers and waiting for responses, adding 1-2 seconds of processing time for each voice command. On-device voice recognition processes commands immediately on the kitchen appliance hardware. Wake word detection, speech recognition, and voice synthesis all happen locally without network delays.

Privacy & GDPR Compliance: All audio processing stays on-device. No voice data leaves the appliance, simplifying GDPR and CCPA compliance for smart kitchen manufacturers.

Simple SDK Integration: The voice SDK embeds directly into your existing appliance stack with three Python imports. No cloud API management, no OAuth flows, no webhooks. Voice commands map directly to IoT device actions through structured voice command recognition.

Voice AI Architecture for Smart Kitchen Appliances

The Kitchen voice assistant combines three on-device SDKs:

- Porcupine Wake Word - Detects custom voice activation phrases

- Rhino Speech-to-Intent - Maps speech directly to structured commands

- Orca Text-to-Speech - Generates voice responses

Unlike traditional speech-to-text and natural language understanding pipelines, Rhino Speech-to-Intent processes user speech end-to-end and extracts intents and parameters (or "slots"), achieving high accuracy for command-and-control applications.

What You Need to Build a Smart Kitchen Voice Interface

Hardware:

- Raspberry Pi 3/4/5

- USB microphone

- Speaker (USB, Bluetooth, or 3.5mm)

Software:

- Python 3.9+

- Picovoice Console account (sign up here)

Estimated Time: ~45 minutes including setup

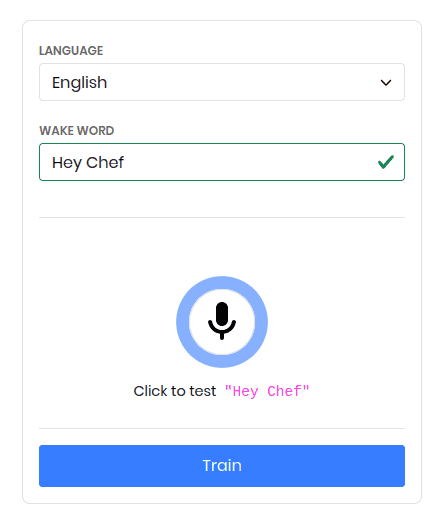

Step 1: Create a Custom Wake Word for the Kitchen Assistant

- To train the custom wake word model, create an account on the Picovoice Console

- In the Porcupine section of the Console, train the custom wake phrase, such as "Hey Chef".

- Download the keyword file, choosing Raspberry Pi as the target platform.

You will now find a zipped folder in your downloads containing the .ppn file for the custom wake word.

For tips on choosing an effective wake word, refer to this guide: Choosing a wake word

Step 2: Add Custom Voice Commands to the AI Kitchen Assistant

- In the Rhino section of the Picovoice console, create an empty Rhino context for the AI voice assistant.

- Click the "Import YAML" button in the top-right corner of the Console. Paste the YAML provided below to add the intents and expressions for the voice assistant.

- Download the context file, choosing Raspberry Pi as the target platform.

You will now find a zipped folder in your downloads containing the .rhn file for the custom context.

You can refer to the Rhino Syntax Cheat Sheet for more details on how to build your custom context.

YAML Context for the Kitchen Assistant:

This context defines four main intents:

- TimerControl: Set and manage kitchen timers

- TemperatureControl: Adjust oven temperature

- ApplianceControl: Turn kitchen devices on/off

- AskState: Ask current appliance status

With these intents, the AI Kitchen assistant can take on appliance-focused tasks. This context lays the groundwork for seamless integration with IoT platforms or embedded systems, enabling smart workflows where multiple devices coordinate together in real time.

To see how Rhino Speech-to-Intent can be applied beyond appliances, take a look at the AI Cooking Companion in Python

Step 3: Build the Smart Kitchen Voice Control Pipeline in Python

With the wake word and Rhino context ready, let's write the Python code that brings everything together.

Initialize the Voice AI Engines and Audio I/O

Detect the Custom Wake Word

Porcupine Wake Word continuously processes the microphone input to detect the predefined wake phrase. When the phrase is recognized, the system triggers the inference pipeline and generates an acknowledgment through Orca Text-to-Speech.

Run On-Device Intent Recognition

Once activated, Rhino Speech-to-Intent processes the incoming audio in real time. When the user finishes speaking, it returns a structured inference containing the intent and any relevant slots.

Execute Appliance Actions from User Intents

The execute_appliance_action function converts Rhino’s inference output into actions for the target device and generates a text response for playback.

Generate Voice Feedback

Orca Text-to-Speech synthesizes the response text into natural-sounding audio, completing the embedded conversational loop.

Complete Python Script

Here's the complete Python script integrating all components into an embedded voice AI stack for smart kitchen appliances:

Step 4: Run the Voice Stack on Raspberry Pi

With the script and model files ready, it’s time to deploy the embedded voice AI pipeline on a Raspberry Pi. For this demo, we used a Raspberry Pi 4 with a USB microphone and Bluetooth speaker for input and output. The same setup can run on laptops, web, or other single-board computers.

Transfer your project to the Raspberry Pi

Transfer your script and model files to the Raspberry Pi with scp. Replace <your-pi-ip-address> with your Pi’s address and add the path to your project folder:

Connect and prepare the Raspberry Pi

Make sure your Raspberry Pi is connected to a microphone and speaker so it can capture voice and play spoken responses. You can log in and launch the application. Run the following on the Raspberry Pi’s terminal:

Install Dependencies on Raspberry Pi

Install all required Python SDKs and supporting libraries:

- Porcupine Wake Word Python SDK

pvporcupine, - Rhino Speech-to-Intent Python SDK

pvrhino, - Orca Text-to-Speech Python SDK

pvorca, - Picovoice Python Recorder library

pvrecorder, - Picovoice Python Speaker library

pvspeaker.

Run the Smart Appliance Stack

You will need the Picovoice AccessKey to use the SDKs. Copy it from the Picovoice Console.

Run the following commands in the Raspberry Pi’s terminal. Replace the placeholder values with your actual ACCESS_KEY, and the file paths to your models. The audio device indices are optional and will use defaults if not specified.

The smart kitchen appliances are now ready and listening for a wake word.

Next Steps for Smart Kitchen Appliance Deployment

Once the on-device kitchen voice assistant is running, here are some ways you can expand it:

- Integrate with Smart Kitchen IoT Devices: Move beyond the Raspberry Pi by connecting the AI voice assistant to real-world smart kitchen appliances.

- Multiple Languages: Picovoice engines support a wide range of languages, allowing you to adapt the voice assistant for different regions.

- Advanced Conversational Flows: Implement more complex dialogue management for multi-turn interactions.