Capturing real-time PCM audio in React Native is essential for speech recognition, wake word detection, and voice-controlled apps, yet many existing audio libraries do not expose low-level microphone frames. React Native Voice Processor addresses this gap as a cross-platform audio capture library that provides direct access to raw PCM audio frames on iOS and Android, optimized for low-latency, real-time speech processing. This tutorial explains how to capture and stream PCM audio in React Native using React Native Voice Processor for voice AI applications.

Quick Answer: How to Capture Real-time PCM Audio in React Native

React Native Voice Processor provides developers with real-time access to microphone audio as raw PCM frames, ready for speech recognition. Install the npm package @picovoice/react-native-voice-processor, configure iOS/Android permissions, access the VoiceProcessor singleton instance, register frame listeners to receive audio data, and call start() with your frame length (e.g. 512 samples) and sample rate (e.g. 16 kHz) to start recording audio. Audio frames stream continuously to your listeners until you call stop().

What you'll learn in this guide:

- Install and configure React Native Voice Processor in a React Native project

- Set up microphone permissions and handle permission requests automatically

- Capture continuous PCM audio frames at 16 kHz optimized for speech recognition

- Process the same audio stream with multiple simultaneous listeners

Requirements and Platform Support

Platforms

- Android 5.0+ (API 21+)

- iOS 11.0+

System Requirements

- Node.js (16+)

- Android SDK (21+)

- JDK (11+)

- Xcode (11+)

- CocoaPods

Record Real-time PCM Audio in React Native: Step-by-Step Implementation

Step 1: Install React Native Voice Processor for Audio Recording

Install the React Native Voice Processor package:

This lightweight library provides cross-platform audio recording capabilities for both iOS and Android, with no native code configuration required beyond standard microphone permissions.

Step 2: Configure Microphone Permissions for iOS and Android

iOS Microphone Permission Configuration

Add the microphone usage description to your Info.plist file:

Android Microphone Permission Configuration

Add the required permissions to your AndroidManifest.xml:

Step 3: Access the VoiceProcessor Instance

Import VoiceProcessor and access the singleton instance that manages audio recording:

The VoiceProcessor singleton ensures consistent audio capture across your application, allowing multiple components to register listeners for the same audio stream.

Step 4: Register Audio Frame and Error Listeners

Set up listeners to receive audio frames and handle any errors that occur during recording:

Frame listeners receive continuous PCM audio data that can be fed directly into speech recognition engines, voice activity detectors, or custom audio processing pipelines. Error listeners allow you to gracefully handle recording issues.

Step 5: Request Microphone Permissions and Start Recording

Check whether microphone access is granted. Once permission is granted, start recording with your chosen frame length and sample rate. Note that most speech recognition and wake word engines expect 512-sample frames at 16 kHz:

The hasRecordAudioPermission() method automatically prompts the user for permission if not already granted. Once started, all registered frame listeners will begin receiving audio data at the specified frame length and sample rate.

Step 6: Stop Recording and Clean Up Resources

When audio recording is complete, stop VoiceProcessor to release system resources:

Working with Multiple Audio Listeners

The VoiceProcessor instance supports multiple listeners, allowing different parts of your application to process the same audio stream simultaneously. However, only one audio configuration (frame length and sample rate) can be active at a time:

This pattern is useful when multiple features (such as wake word detection and speech transcription) need to process the same audio input concurrently.

Checking Recording Status

You can check whether audio recording is currently active at any time using the isRecording() method:

This is helpful for updating UI states, preventing duplicate start calls, or coordinating audio capture with other app features.

Complete Example Code: Ready-to-use Component

Here is a complete working component you can copy into your project to add audio capture and real-time access to PCM frames to your application:

AudioRecorder.tsx

This is a simplified example but contains all the necessary code to get you started. For a complete demo application, refer to the React Native Voice Processor Example Demo on GitHub.

For more comprehensive implementation details, refer to the official React Native Voice Processor Quick Start Documentation, the React Native Voice Processor API Documentation, or explore the React Native Voice Processor repository on GitHub.

Next Steps: Implement Voice AI Features

Now that you can capture microphone audio in React Native, you can implement advanced voice AI capabilities:

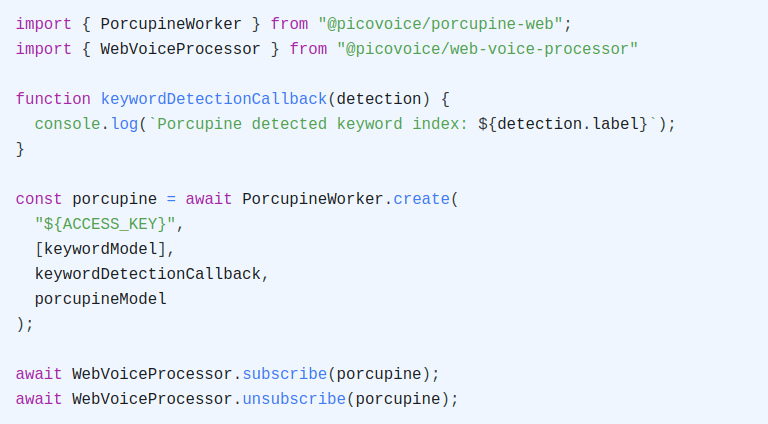

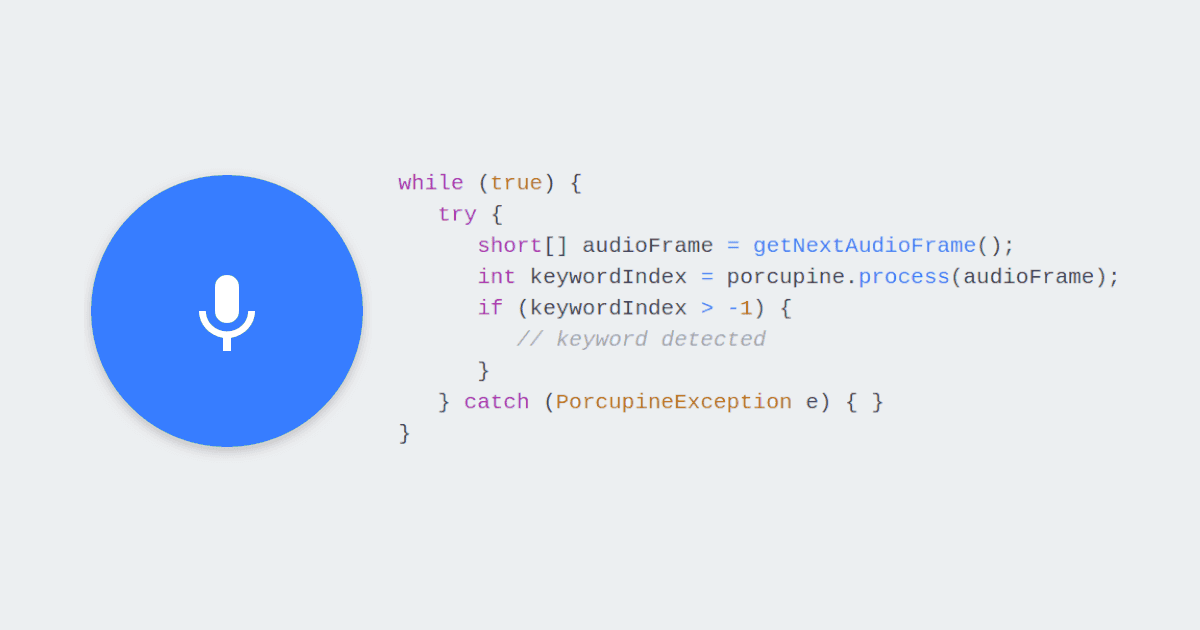

- Wake Word Detection in React Native: Use Porcupine Wake Word to trigger actions with custom wake words like "Hey Siri" or "Alexa" in your React Native app.

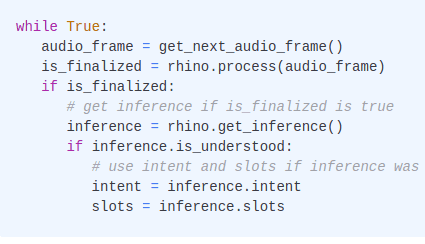

- Speech-to-Intent in React Native: Implement Rhino Speech-to-Intent to understand user voice commands without sending audio to the cloud.

- React Native Speech-to-Text: Add real-time speech transcription with Cheetah Streaming Speech-to-Text for live captioning or voice input.

All these speech recognition features work with the PCM audio frames captured by React Native Voice Processor, enabling fully offline voice AI on iOS and Android.

Start Building