TLDR: This guide explains how to evaluate summarization APIs and SDKs for enterprise-grade applications in AI-powered text summarization. You'll learn:

- Key evaluation factors — accuracy, latency, scalability, domain adaptation, and deployment models.

- When to choose on-device summarization vs. cloud-based APIs.

- How quantization and LLM compression enable fast, private, and cost-efficient summarization.

- Top open-source and commercial APIs, including BART, Pegasus, T5, GPT-4, Claude, Cohere, and Mistral.

What Is AI-Powered Summarization?

AI-powered summarization uses machine learning models to condense lengthy documents or speech transcripts into concise, context-aware summaries.

There are two primary approaches:

- Extractive: selecting key sentences directly from the text

- Abstractive: generating rephrased summaries that capture the intent of the source material

If you're new to the subject, see our introductory articles on Natural Language Processing, Large Language Models, and Transformer Models.

Why Enterprises Use Summarization

Enterprise developers use text summarization tools to handle information overload, accelerate decision-making, and maintain consistency across large datasets.

Common enterprise use cases:

- Customer support: Summarize thousands of support tickets daily.

- Research and analysis: Extract insights from lengthy reports.

- Meetings and calls: Generate action-item summaries automatically.

- Legal and compliance: Condense long case files or regulatory documents.

AI summarization APIs allow teams to gain real-time insights without reading or transcribing every word.

What Matters Most When Choosing a Summarization API

1. Accuracy and Hallucination Risks

When evaluating summarization APIs, test accuracy and reliability first. Abstractive summarization is more human-like but can hallucinate facts. Extractive summarization can be safer for regulated industries.

Summarization API Evaluation Tip: Always test summarization output against real company documents and verify entity accuracy (names, dates, numbers).

For Large Language Model evaluation frameworks, see Evaluating Large Language Models (LLMs).

2. Domain Adaptation

Generic summarization models often fail in specialized domains and subjects, such as:

- Healthcare

- Legal

- Finance

One of the most downloaded summarization models on HuggingFace is falconsai/medical_summarization, which performs better on clinical text.

Summarization API checklist for domain adaptation:

- Do you need domain adaptation for your use case?

- Was the model trained or fine-tuned on data from your domain?

- Does the vendor support domain-specific adaptation?

3. Latency: Real-Time vs. Batch Processing

Real-time call summarization is a completely different use case than batch processing of archived documents. Latency requirements of use cases dictate the architecture.

Measure:

- Latency: 95th percentile response time for average document size

- Throughput: how many summaries per minute/hour can be processed

Avoid cloud APIs that offer inconsistent response times for real-time applications.

4. Cost at Scale

Evaluate the total cost of ownership by calculating:

- Compute costs for self-hosting, on-device vs. API pricing

- Engineering time for maintenance and updates

- Licensing costs at your expected volume

Guideline: Use cloud APIs for quick and easy MVPs. Consider on-device for real-time apps and on-prem for batch processing at scale.

5. On-Device vs. Cloud: The Deployment Decision

This question often gets overlooked until real-world constraints are hit: poor reception in remote locations, data privacy regulations, or inference costs spiraling out of control.

Key concept: Quantization

Quantization compresses large language models (LLMs) by lowering precision, reducing model sizes, ideally with no accuracy loss. Smaller model footprints result in faster inference and lower power usage. Prioritize on-device summarization if you're building medical devices, automotive systems, industrial equipment, and privacy-sensitive applications, such as meeting transcription with trade secrets.

See popular quantization algorithms developed by researchers GPTQ, AWQ, LLM.int8(), and SqueezeLLM, along with Picovoice's approach Towards Optimal LLM Quantization.

Summarization AI Model Landscape

Open Source Models

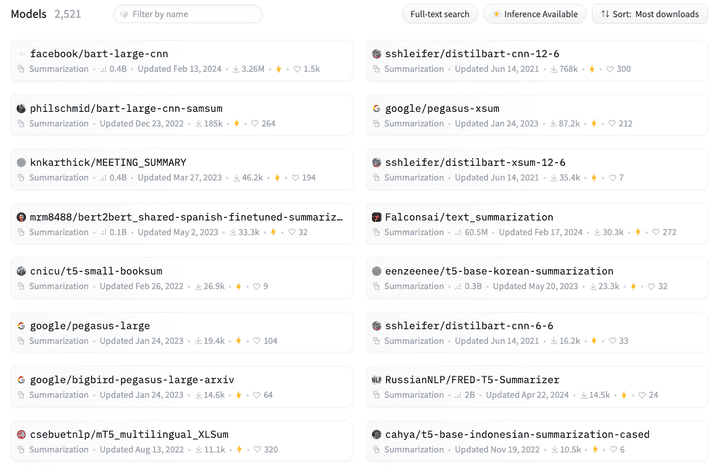

At the time of writing this article, there are over 2500 summarization models available on HuggingFace, 16 of which have been downloaded over 10,000 times. Most popular ones:

- BART (facebook/bart-large-cnn) - Has been downloaded over 3.25M times by the end of October 2025. It's a BART model that's fine-tuned on CNN Daily Mail. Solid for news articles and general documents. Base PyTorch and TensorFlow models are ~1.6GB at full precision (FP32), but can be quantized significantly.

- Pegasus (google/pegasus-large, google/pegasus-xsum, google/bigbird-pegasus-large-arxiv, google/pegasus-cnn_dailymail) - Pegasus is Google's transformer model to perform abstractive summarization. Not just the base model, but the fine-tuned models using news (CNN Daily Mail data similar to BART), scientific papers from Arxiv, and xsum (Extreme Summarization) datasets are also popular choices among machine learning enthusiasts.

- T5 (google/flan-t5-base) - T5 is another Google model, which is more flexible than BART and requires more tuning. Although the base model is around ~900 MB, "small" version (~250MB) is popular for resource-constrained environments.

For a broad view of open-source LLM ecosystems, see Best Open Source Language Models.

The Quantization Factor for Summarization Models: Running Models On-Device

Popular HuggingFace models seen above are for full-precision (FP32) weights. For cloud inference, that's fine unless you have high volume and billions of dollars or care about the environment. For on-device deployment, running on mobile apps, embedded systems, and IoT devices, they're completely impractical. LLM quantization solves this by reducing the precision of model weights from 32-bit floating point to lower bit representations. Done correctly, large summarization models can be compressed to sub-4-bit with no accuracy loss, achieving 8x or more size reduction.

The catch: Not all quantization is created equal. Naive quantization can destroy model accuracy.

Evaluate compression algorithms along with summarization models for use cases when on-device matters:

- Medical devices: Summarizing patient data locally for HIPAA compliance

- Automotive: Real-time voice command summarization without cellular connectivity

- Industrial IoT: Equipment maintenance logs summarized at the edge

- Mobile apps: Meeting note summaries without draining battery or data plans

- Privacy-sensitive applications: Financial documents, legal briefs, confidential business data

Commercial Text Summarization APIs

- OpenAI (ChatCompletion API) - GPT-4 and GPT-3.5 with prompt engineering. Handles any summarization style, strong with complex reasoning tasks. Use structured outputs for consistent formatting.

- Anthropic Claude - Claude 4 Sonnet via API. Handles long documents (200K token context), strong at following detailed instructions, good at extracting specific information types.

- Cohere Summarize - Dedicated summarization endpoint. Designed specifically for this task, multiple summary lengths, extractive and abstractive modes available.

- Mistral API - Cost-effective European alternative. Good performance-to-price ratio, strong multilingual capabilities, GDPR-friendly infrastructure.

For context, see Retrieval-Augmented Generation to understand how these APIs can integrate structured context.

Decision Framework for Selecting a Summarization API

To choose the right summarization API, ask:

- Where does inference happen? (Cloud or device)

- What's your monthly volume?

- What's your data sensitivity level?

- Do you need domain-specific summarization?

- What are your latency and cost constraints?

Conclusion

Hugging Face download numbers tell a story. BART and Pegasus are still trending among open-source enthusiasts as they’re well-documented and "good enough" for many use cases. The specialized models (medical, meeting notes) show that domain adaptation matters more than raw model size. But update dates matter too as these models are from 2021-2022, the pre-ChatGPT era. The jump in quality from modern LLMs is substantial, especially for complex reasoning and instruction-following.

The future points toward on-device summarization — balancing speed, privacy, and scalability. With quantization, even small devices can run sophisticated summarizers that outperform cloud APIs in latency-sensitive environments. If you're exploring on-device deployment, reach out to Picovoice on-device AI experts to get fine-tuned, compressed, edge-optimized models.

Consult an Expert