Voice Activity Detection (VAD) is a core building block for speech and audio systems, used to determine when human speech is present in an audio stream. In low-level and embedded environments, developers often need a real-time, offline, on-device voice detection solution implemented in C that works reliably across operating systems (Linux, Windows, macOS, and Raspberry Pi) and hardware targets.

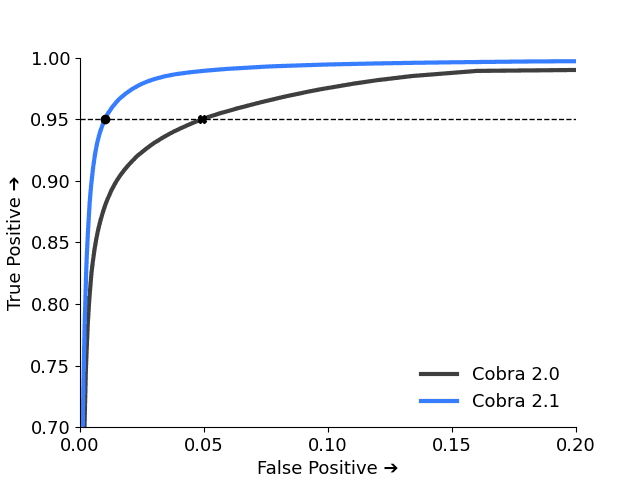

Many developers evaluate existing solutions such as WebRTC VAD, Silero VAD, or cloud-based speech detection services, each with trade-offs in accuracy, performance, and deployment complexity. This tutorial focuses on Cobra Voice Activity Detection, an on-device VAD engine with published benchmark results for accuracy and real-time performance, and a C API designed for cross-platform deployment.

You will learn how to capture microphone audio, process fixed-size PCM frames, and compute per-frame voice probability in real time with Cobra VAD. The result is a portable voice detection C application suitable for embedded systems, desktop applications, and edge deployments.

Important: This guide builds on How to Record Audio in C. If you haven't completed that setup yet, start with that tutorial to get your recording environment in place.

Prerequisites

- C99-compatible compiler

- Windows: MinGW

Supported Platforms

- Linux (x86_64)

- macOS (x86_64, arm64)

- Windows (x86_64, arm64)

- Raspberry Pi (Zero, 3, 4, 5)

Part 1. Set Up Your C VAD Project Structure

This is the folder structure used in this tutorial. You can organize your files differently if you like, but make sure to update the paths in the examples accordingly:

To set up audio capture, refer to: How to Record Audio in C.

Add Cobra library files

- Create a folder named

cobra/. - Download the Cobra header files from GitHub and place them in:

- Download a Cobra model file and the correct library file for your platform and place them in:

Part 2. Cross-Platform Dynamic Library Loading for VAD in C

Cobra VAD distributes pre-built platform libraries, meaning:

- the shared library (

.so,.dylib,.dll) is not linked at compile time - the program loads it at runtime

- functions must be retrieved by name

So, we need to write small helper functions to:

- open the shared library

- look up function pointers

- close the shared library

Include platform-specific headers

Understanding the headers:

- On Windows systems,

windows.hprovides theLoadLibraryfunction to load a shared library andGetProcAddressto retrieve individual function pointers. - On Unix-based systems,

dlopenanddlsymfrom thedlfcn.hheader provide the same functionality. signal.hallows us to handleCtrl-Clater in this example.

Define dynamic loading helper functions

Open the shared library

Load function symbols

Close the dynamic library

Print platform-correct errors

Load the library file

Downloaded the correct library file for your platform and point library_path to the file.

Load dynamic library functions

Load all required function symbols for Cobra Voice Activity Detection:

Part 3. Implement Real-Time Voice Activity Detection (VAD) in C

Now that we've set up dynamic loading, we can implement the Cobra Voice Activity Detection API.

Initialize Cobra VAD

- Sign up for an account on Picovoice Console for free and obtain your

AccessKey - Replace

${ACCESS_KEY}with yourAccessKey

Call pv_cobra_init to create a Cobra VAD instance:

The device parameter lets you choose what hardware the engine runs on.

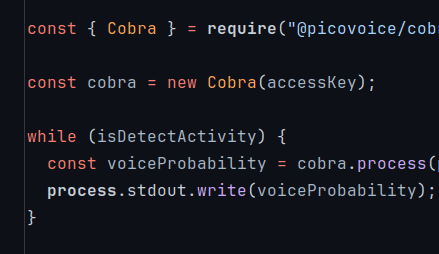

Detect voice activity

Capture audio with PvRecorder and pass the recorded audio frames to Cobra VAD for voice detection:

Explanation:

- pv_cobra_frame_length: Required number of samples per frame.

- pv_cobra_process: Analyzes an audio frame and returns a voice probability score between

0.0and1.0.voice_probability: A value closer to1.0indicates high confidence that voice is present; closer to0.0indicates silence or non-speech audio.

Cleanup Resources

Full Working Example: C Voice Detection Application Code

Here is the complete cobra_tutorial.c file you can copy, build, and run (complete with error handling and PvRecorder implementation):

cobra_tutorial.c

Before running, update:

COBRA_LIB_PATHto point to the correct Cobra VAD library for your platformPVRECORDER_LIB_PATHto point to the correct PvRecorder library for your platformPICOVOICE_ACCESS_KEYwith yourAccessKeyfrom Picovoice Console

This is a simplified example but includes all the necessary components to get started. Check out the Cobra C demo on GitHub for a complete demo application.

Compile and Run Your C Voice Activity Detection Application

Build and run the application:

Linux (gcc) and Raspberry Pi (gcc)

macOS (clang)

Windows (MinGW)

Conclusion

You now have a complete cross-platform voice activity detection implementation in C that works on Linux, Windows, macOS, and Raspberry Pi. The dynamic loading approach gives you flexibility to deploy the same codebase across all supported platforms without modification.

This tutorial covered the fundamentals: setting up the Cobra VAD library, implementing platform-specific dynamic loading, processing audio frames for voice detection, and interpreting probability scores. You can build on this foundation by integrating Cobra into:

- Voice-controlled applications that respond only when someone is speaking

- Real-time speech-to-text systems (or use Cheetah Streaming Speech-to-Text, which already includes built-in voice activity detection)

- Noise suppression tools that clean only speech segments

- Other efficient speech-processing pipelines that activate exclusively when voice is detected

Troubleshooting Common VAD Issues

VAD consistently reports low voice probability

Make sure the input audio matches the format required by the VAD engine. Cobra VAD expects 16 kHz, 16-bit linear PCM, with frames exactly the length returned by pv_cobra_frame_length. Using a different sample rate or incorrect frame size often results in poor detection performance.

Compilation fails due to missing headers

Check that all include paths in your compiler command correspond to your folder structure. The -I flags should reference directories containing header files—not the files themselves. Also, confirm that all required headers have been downloaded and placed correctly.

Access key authentication errors

If initialization fails with an authentication error, ensure that you've replaced the placeholder AccessKey with your actual key from Picovoice Console.

Frequently Asked Questions

Yes. Read the audio file in fixed-size frames and process each frame sequentially, just as you would with live microphone input.

A common approach is to treat the VAD output as a probability and apply a threshold (for example, 0.5) based on your tolerance for false positives versus missed speech.

Use Cobra to detect voice activity, then only send audio segments with detected speech to your STT engine. This reduces processing costs and improves efficiency. You can combine Cobra with Cheetah Streaming Speech-to-Text for a complete voice processing pipeline.