TLDR: When evaluating wake word accuracy and wake word benchmarks, raw "accuracy" numbers are meaningless without context. Always examine the False Rejection Rate (FRR) at a fixed False Acceptance Rate (FAR), such as one false activation per hour, along with the test methodology. Reliable vendors provide transparent, reproducible benchmark data, including methodology and test conditions, instead of cherry-picked observations.

Why Wake Word Benchmarks Matter

Vendors often advertise "best-in-class" wake word accuracy, but these claims rarely reflect real-world performance. That's why most voice AI demos fail in production. Many factors, e.g., audio quality, environmental noise, microphone distance, and wake word choice, significantly affect the performance. This creates multiple opportunities for vendors to manipulate results. Understanding wake word benchmarks helps you separate marketing hype from actual performance.

Many factors affect wake word engine performance: audio quality, data diversity, environment, methodology, and wake word choice.

This guide breaks down the most common tricks vendors use to inflate their accuracy scores and teaches you exactly what questions to ask before you trust their claims.

Table of Contents

- The "99% Accurate" Trap

- Common Vendor Tricks (and How to Spot Them)

- 5 Simple Questions to Ask to Verify Vendor Claims

- Signs to Watch for

- What You Can Do

1. The "99% Accurate" Trap

Let's start with the most common misleading claim: "99% accurate."

What does this even mean?

When a vendor says their wake word engine is "99% accurate," they could mean:

- 99% of wake word utterances are correctly detected (1% False Rejection Rate)

- 99% of non-wake-word utterances don't trigger activation (1% False Acceptance Rate)

- 99% of all audio samples (wake word + non-wake-word) are classified correctly

- Something else entirely that sounds good when used in marketing

These are completely different metrics with vastly different implications. Let's have an example of two engines that are "99% Accurate."

Engine A with more false activations and fewer misses:

- False Rejection Rate: 1% (misses 1 out of 100 wake word utterances)

- False Acceptance Rate: 5 per hour (activates 5 times per hour when it shouldn't)

Engine B with fewer false activations and more misses:

- False Rejection Rate: 10% (misses 10 out of 100 wake word utterances)

- False Acceptance Rate: 0.1 per hour (activates once every 10 hours incorrectly)

Both can technically claim "99% accuracy" depending on how they measure it. But their real-world performance is drastically different.

Which wake word engine is better?

It depends on your application. In a noisy factory, missed activations reduce productivity, making Engine A preferable. In automotive applications, false triggers erode user trust, making Engine B the better choice.

Key takeaway: "Accuracy" without context is meaningless.

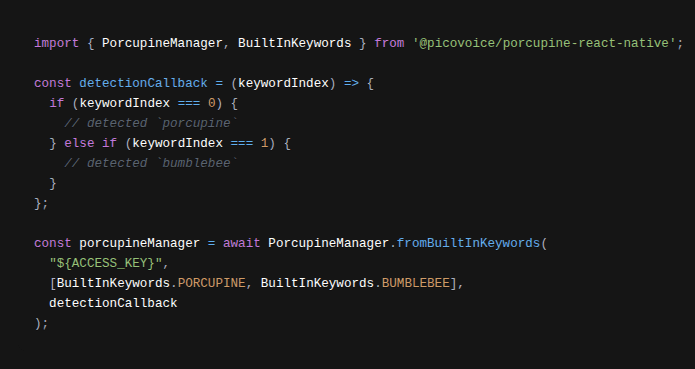

See Porcupine Wake Word API documentation to change the sensitivity, which determines False Rejection Rate (FRR) and False Acceptance Rate (FAR) trade-offs.

2. Common Vendor Tricks (and How to Spot Them)

1. Testing in Unrealistic Conditions

Testing in quiet studios with clean audio or ideal mic distance inflates wake word detection benchmarks. Even if they claim they add "noise", they may not disclose the SNR (Signal-to-Noise Ratio). A good SNR for speech is 25-30 dB, while anything below 20 dB is considered a noisy environment. Engines do not perform the same at 10 and 19 dB noise levels, although both can be considered a noisy environment.

Key takeaway: Real-world accuracy degrades significantly with increased noise, distance, and reverberation. Always ask vendors to disclose test conditions.

2. Cherry-Picking Test Data

Some common tactics to manipulate test data:

- Excluding non-native or accented speakers

- Using the same speakers in training and testing (overfitting)

- Removing "bad" runs before reporting results

Tip: Test data composition dramatically affects reported accuracy. If test data is not disclosed, question the results.

3. Hiding the Methodology

Some vendors never reveal:

- How accuracy was calculated

- The detection threshold used

- The FRR/FAR trade-off point (i.e., threshold)

- Test duration (Minimum 10 hours of audio is required for meaningful FAR measurement.)

Transparency = credibility. Transparency equals credibility. If the test setup isn't reproducible, it's fair to assume it was optimized for marketing.

4. Mixing Metrics

False Rejection Rate (FRR) and False Acceptance Rate (FAR) are measured differently (percentage and rate per hour). Vendors may cherry-pick whichever metric looks better.

Tip: If a vendor doesn't disclose the FRR at a specific FAR rate or publish the ROC curve, question the results.

5. Comparing Apples to Oranges

Vendors may use shady comparison tactics:

- Comparing their best results against competitors' worst

- Using different wake words (Wake word choice affects the performance.)

- Using different datasets

- Testing in different noise environments

Key takeaway: If you cannot reproduce a vendor's comparison, demand an explanation.

6. Hiding Behind "Contact Us"

If vendors require you to contact them or sign an NDA before sharing basic accuracy metrics, you should treat it as a red flag. Genuine confidence comes from published, verifiable data—not gated PDFs or private demos.

3. 5 Questions to Ask to Verify Vendor Claims

Picovoice has published an open-source wake word benchmark that has been used by researchers in the industry and academia. Yet, Picovoice is the only vendor providing this level of transparency. That's why we prepared a list of questions that will help you navigate the accuracy discussions with any vendor.

- What is your FRR at 1 FAR per hour?

- Can you share the ROC curve showing FRR vs FAR trade-offs?

- How do you define "accuracy"?

- Is test data separate from training data?

- Can your results be reproduced with shared methodology and datasets?

These questions ensure transparent benchmarking for hotword detection, supporting fair global comparisons.

4. Signs to Watch for

When vendors claim "99% accuracy" or "industry-leading performance," stay skeptical. Many accuracy claims are designed to impress rather than inform. By asking the right questions and demanding transparent benchmarks, you'll find a solution that truly fits your needs.

- Request proof of methodology, dataset, and reproducibility.

- Run your own tests without relying on vendor numbers.

- Watch out for red flags and warning signs.

Critical Red Flags 🚩

- Results that can't be reproduced

- No FRR/FAR data

- Vague "accuracy" definitions

- Hidden or missing methodology

- Inconsistent claims across materials

Warning Signs

- Only "quiet room" testing disclosed

- Comparison with "details", without reproducibility

- "Best in class" claims without data

- Requires email/NDA for benchmarks

- FRR shared without FAR

- Short (<1 hour) test durations

5. What You Can Do

Picovoice provides free and open-source resources for enterprises, researchers, and developers to run independent tests:

- Wake Word Benchmark Documentation

- Open-Source Benchmark Framework

- Benchmarking a Wake Word Engine

- Wake Word Detection Complete Guide

- Open-Source Keyword Spotting Speech Corpora

Accuracy isn't the only metric to evaluate when choosing a wake word system. Depending on your application, speed, efficiency (resource utilization), platform support, and language support can be equally important.

Picovoice owns its entire stack—data pipelines, training mechanisms, and inference engines—rather than relying on open-source frameworks like PyTorch or TensorFlow. This vertical integration enables fine-tuning of both the out-of-the-box wake word models and the inference engine, optimizing accuracy and performance for specific keywords on target platforms.

Start your free trial and test out-of-the-box capabilities of Porcupine Wake Word on all the metrics you care about!

Start Free