Wake word detection is part of almost every successful Voice User Interface (VUI).

Hey Siri, Hey Google (OK Google), and Alexa are proof of this. Although the wake word's functionality is simple, implementing a

robust wake word algorithm is surprisingly complex. Apple, Google, and Amazon have teams of scientists working on wake

word models.

The performance of any VUI heavily relies on its wake word robustness, calling for a rigorous technology selection process. A recognition failure adversely affects user experience, and a false recognition can damage user trust. Below we present metrics and frameworks to quantify and benchmark the accuracy of wake word engines.

False Rejection & False Alarm

Any decision-making algorithm with a yes/no output is called a binary classifier. The accuracy of a binary classifier, such as a wake word detector, is measured by two parameters:

- False Rejection Rate (FRR)

- False Acceptance Rate (FAR)

The FRR for a wake word model is the probability of the wake word engine missing the wake-up phrase. Ideally, we want

FRR to be zero. The FAR for wake word is usually expressed as FAR per hour. It is the number of times a wake word engine

incorrectly detects the wake phrase in an hour. Ideally, we like FAR per hour to be zero as well.

The Devil is in the Details

If you are on the quest to procure a wake word detection software, you want to ensure you are comparing apples to apples. Here are a few real-life examples:

A vendor claims their wake word engine is 99% accurate— Clarify if they mean their FRR is 1%. Ask about their FAR per hour. Any engine can achieve 100% accuracy with high FARs.A company says their wake word model's FAR is less than 1%— Ask for FAR per hour. Percentage is not a sensible unit of measurement for FAR. What does false alarming 1% of the time mean for your product?A vendor shows great FRR/FAR and claims their wake word technology is the best in the market— Ask for detailed benchmarking methodology. What is the speech corpora used? Does the corpus include accented utterances? What is the acoustic environment? Is the test performed in a noisy reverberant environment? What kind of noise and reverberation?

Open Access & Benchmark

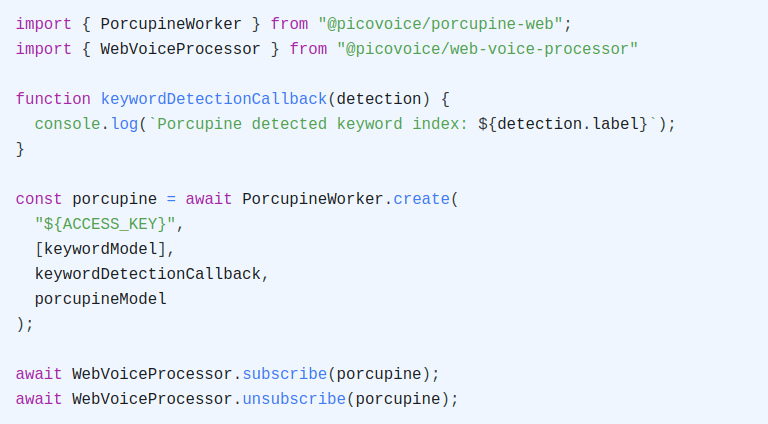

Comparing wake word models the right way is complex. It requires time, investment, and expertise. Vendors make you go through lengthy sales processes to provide (possibly paid) access to their tech for evaluation, unlike Picovoice. Picovoice offers a self-service Free Plan.

In response to this pain point, we have open-sourced our internal wake word benchmark framework to enable customers to inspect all data and algorithms used. Even better, plug in your data to measure the accuracy of Picovoice Porcupine Wake Word Engine on what matters to your use case. Below is the result taken from this benchmark:

Inspect Deeper with ROC Curve

Tuning the detection threshold of binary classifiers balances FRR and FAR. A lower detection threshold yields higher sensitivity. A highly-sensitive classifier has a high FAR and a low FRR value. A Receiver operating characteristic (ROC) curve plots FRR against FAR for varying sensitivity values.

A superior algorithm has a lower FRR for any given FAR. To combine these two metrics, sometimes ROC curves are compared

by their Area Under Curve (AUC).

Smaller AUC indicates superior performance. In the figure below, algorithm C has better performance (and lower AUC)

than B, and B is better than A.