Voice recognition has flourished with the growth of cloud-based speech services. Despite the ubiquity of voice-enabled products, processing speech in the cloud has raised privacy concerns with the uploading and handling of personal voice data. Also, cloud speech recognition has fundamental limitations with cost-effectiveness, latency, and reliability.

Offline speech recognition has the potential to address cloud service drawbacks by eliminating the need for connectivity and tapping into readily available compute resources on billions of devices. The computational cost of speech recognition algorithms has made it impossible to get comparable accuracy on commodity edge devices.

Picovoice has developed deep learning technology specifically designed to perform large vocabulary speech-to-text efficiently on edge devices. Picovoice software runs on commodity hardware with constrained compute resources. Bespoke voice AI technology allows speech-to-text on even a Raspberry Pi, recognizing more than 300,000 words in real time. The Picovoice offline option lowers cost and latency while matching the accuracy of cloud voice services.

Accuracy

Picovoice has benchmarked the accuracy of its local speech-to-text engine against widely-used speech-to-text APIs: Google Speech-to-Text, Amazon Transcribe, Azure Speech-to-Text, and IBM Watson Speech-to-Text. Details of the benchmark are available on the Leopard Speech-to-Text benchmark page, including the link to the open-source repository. The figure below shows that Picovoice achieves accuracy comparable to cloud-based services.

Cost-Effectiveness

The figure below compares the operational cost of voice recognition engines. Picovoice's offering is not a fractional cost saving. It's an order of magnitude more cost-efficient than API-based offerings.

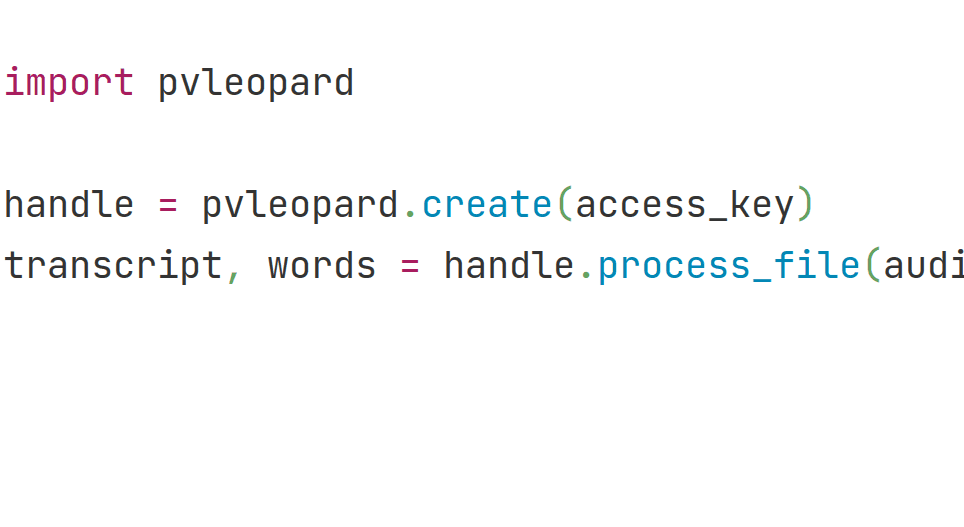

Start Building

Start building with Picovoice's local speech-to-text engine, Leopard, by creating a Picovoice Console account.

o = pvleopard.create(access_key)transcript, words =o.process_file(path)