The only all-in-one on-device voice AI already deployed at scale

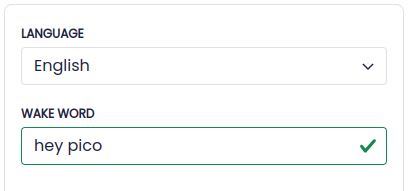

Wake word, speech-to-text, LLM, text-to-speech, and more.

All on-device. Runs across mobile, web, desktop, and embedded.

Built for forward-thinking enterprises ready to deploy, not just experiment.

Voice AI Agents Across Platforms

Picovoice's modular voice AI platform is engineered for on-device deployment, empowering enterprises to deliver customized, cross-platform voice solutions without sacrificing performance, latency, or privacy.

Develop smarter products with no compromises

Accurate and lightweight on-device AI engines at your fingertips.

Why Picovoice?

Bringing cloud performance and convenience to the edge with no compromises.

Serious AI built for real-world scale

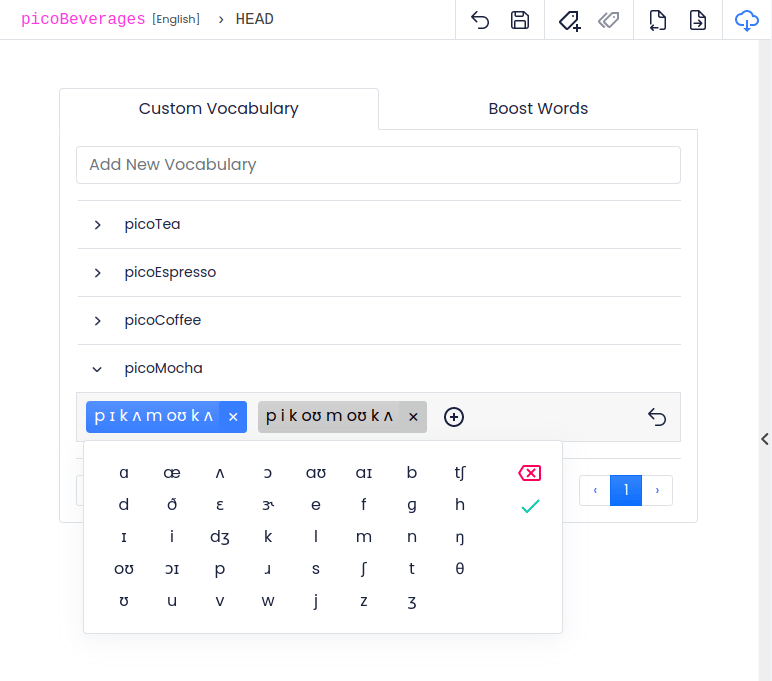

Enterprise-grade, fully customizable on-device voice AI without the cost or complexity of building it in-house.

Download custom wake words, voice commands, speech-to-text, and compressed large language models in seconds. Deploy across mobile, web, or embedded devices using Picovoice's cross-platform SDKs. No prior AI/ML expertise required. Picovoice transforms your ideas into voice-powered experiences, effortlessly.

1o = pvcheetah.create(access_key)2

3partial_transcript, is_endpoint =4 o.process(get_next_audio_frame())1const o = new Cheetah(accessKey)2

3const [partialTranscript, isEndpoint] =4 o.process(audioFrame);1Cheetah o = new Cheetah.Builder()2 .setAccessKey(accessKey)3 .setModelPath(modelPath)4 .build(appContext);5

6CheetahTranscript partialResult =7 o.process(getNextAudioFrame());1let cheetah = Cheetah(2 accessKey: accessKey,3 modelPath: modelPath)4

5let partialTranscript, isEndpoint =6 try cheetah.process(7 getNextAudioFrame())1Cheetah o = new Cheetah.Builder()2 .setAccessKey(accessKey)3 .build();4

5CheetahTranscript r =6 o.process(getNextAudioFrame());1Cheetah o =2 Cheetah.Create(accessKey);3

4CheetahTranscript partialResult =5 o.Process(GetNextAudioFrame());1const {2 result,3 isLoaded,4 isListening,5 error,6 init,7 start,8 stop,9 release,10} = useCheetah();11

12await init(13 accessKey,14 model15);16

17await start();18await stop();19

20useEffect(() => {21 if (result !== null) {22 // Handle transcript23 }24}, [result])1_cheetah = await Cheetah.create(2 accessKey,3 modelPath);4

5CheetahTranscript partialResult =6 await _cheetah.process(7 getAudioFrame());1const cheetah = await Cheetah.create(2 accessKey,3 modelPath)4

5const partialResult =6 await cheetah.process(7 getAudioFrame())1pv_cheetah_t *cheetah = NULL;2pv_cheetah_init(3 access_key,4 model_file_path,5 endpoint_duration_sec,6 enable_automatic_punctuation,7 &cheetah);8

9const int16_t *pcm = get_next_audio_frame();10

11char *partial_transcript = NULL;12bool is_endpoint = false;13const pv_status_t status = pv_cheetah_process(14 cheetah,15 pcm,16 &partial_transcript,17 &is_endpoint);1const cheetah =2 await CheetahWorker.create(3 accessKey,4 (cheetahTranscript) => {5 // callback6 },7 {8 base64: cheetahParams,9 // or10 publicPath: modelPath,11 }12 );13

14WebVoiceProcessor.subscribe(cheetah);Go into production with enterprise-grade on-device voice AI. Picovoice powers real-world, high-scale products, not proof-of-concepts that never see the light of day. Beyond cutting-edge voice AI technology, Picovoice delivers strategic guidance and hands-on support your team needs to launch successfully—from rapid troubleshooting to long-term partnership.

Traditional voice AI deployment forces enterprises into an uncomfortable choice: Build or Buy. Building in-house requires massive R&D investment. Buying off the shelf compromises performance, flexibility, and control. By owning the entire voice AI pipeline, Picovoice can deliver customized solutions tailored to specific enterprise requirements.