Voice Activity Detection (VAD), also known as speech detection, speech activity detection (SAD), or simply voice detection, is the invisible technology that lets machines know when someone is speaking and not. While the concept sounds simple, implementing accurate and efficient VAD in real-world conditions is surprisingly complex.

VAD powers modern voice applications - whether you're building a voice assistant, transcription service, or real-time communication app. An accurate VAD is the foundation of a smooth voice user experience (VUX).

This guide covers everything you need to know about VAD, from fundamental concepts to production implementation.

Table of Contents

- What is Voice Activity Detection (VAD)?

- How Voice Activity Detection Works

- Traditional VAD vs. Deep Learning VAD

- Why Voice Activity Detection Matters

- VAD Use Cases and Applications

- Choosing a VAD Solution

- Implementation Guide

- Platform-Specific Tutorials

- Production Best Practices

- Frequently Asked Questions

- Getting Started with Cobra Voice Activity Detection

- Conclusion

- Key Takeaways

- Additional Resources

What is Voice Activity Detection (VAD)?

Voice Activity Detection is a signal-processing technique that distinguishes speech from silence or background noise in an audio stream. At its core, Voice Activity Detection answers one simple question: "Is someone speaking right now?"

It outputs a binary decision for each audio frame:

- 1 → Voice detected (speech is present)

- 0 → No voice detected (silence or non-speech sounds)

Why VAD is Challenging

Distinguishing speech from non-speech sound may sound simple, but real-world conditions make it surprisingly difficult:

- Noise - HVAC systems, traffic, music, and other noises in the background

- Voice-like sounds - Coughing, laughter, mouth noises

- Distance and reverberation - Far-field audio, echo, room acoustics

- Audio quality - Compression, low sample rates, poor microphones

- Efficiency - Lightweight engines for low compute latency

In quiet environments, clean data with high compute power, VAD is trivial. Yet, in real-world environments, it becomes a complex machine learning problem.

How Voice Activity Detection Works

VAD systems analyze audio in real-time, processing small chunks (frames) continuously to detect speech activity.

The VAD Pipeline

- Audio Capture - Microphone records a continuous audio stream

- Frame Processing - Divides audio into small frames.

- Feature Extraction - Converts raw audio to meaningful features (translates sound waves into numbers the algorithm can understand)

- Classification - Determines if the frame contains speech

- Probability Output - Assigns a confidence score [0,1]

Traditional VAD vs. Deep Learning VAD

Traditional VAD (Signal Processing)

Traditional VAD systems use hand-crafted features based on acoustic properties: energy levels, zero-crossing rates, and heuristic thresholds.

- Energy levels - Speech is louder than silence.

- Zero-crossing rate - Speech has predictable patterns.

- Spectral features - Human speech occupies specific frequency ranges.

- Pitch detection - Speech has pitch, noise typically doesn't.

- Signal-to-noise ratio (SNR) - Speech has a louder signal compared to background noise.

Limitations of Traditional VAD: These features are defined by engineers. That's why traditional VAD is also known as hand-tuned or hand-crafted, and may miss subtle patterns that distinguish speech from voice-like noise.

Deep Learning VAD

Modern VAD systems use neural networks. Neural networks learn complex speech–noise patterns from large datasets, improving robustness to background sounds and varied accents. Engineers do not define any features because networks discover the best features automatically:

- Learned representations - Neural networks discover patterns humans might miss

- Mel-frequency cepstral coefficients (MFCCs) - Audio features that mimic how humans perceive sound

- Spectrograms - Neural networks "see" the visual representation of frequencies over time

Advantage of Deep Learning VAD: The network learns which features matter most from thousands of hours of real-world audio, making it more robust to complex noise conditions.

Picovoice's VAD, Cobra Voice Activity Detection, leverages the latest advances in neural networks.

VAD Classification Approaches

Traditional Methods:

- Use statistical models, such as Gaussian Mixture Models (GMM) and Hidden Markov Models (HMM)

- Apply energy-based thresholds

- Follow hand-crafted rules

- Fast but less accurate in noise

Modern (Deep Learning powered) Methods:

- Use neural networks (CNN, RNN, or hybrid architectures)

- Learn patterns from training data

- Adapt to complex acoustic conditions

- More accurate than traditional VAD

Deep learning approaches significantly outperform traditional methods in noisy conditions but may require more computational resources.

Deep Learning VAD Resource Efficiency Misconception

Traditional VAD models are generally much lighter than Deep Learning VAD models. This has created a misconception about deep learning VAD computational resources among developers. Efficient deep learning VAD models do not require powerful computers.

Real-time Factor (RTF) is a metric used to show how much (computational) time it takes to process an audio file. For example, Silero VAD (Python) measured RTF of 0.004 on an AMD CPU, which means it takes 15.4 seconds to process a 1-hour-long audio, or 0.43% of CPU is used to process it in real time, which is negligible, barely scratching the CPU. Cobra VAD is much more efficient than Silero, achieving an RTF as low as 0.005 on the same AMD CPU, lowering the CPU usage to 0.05% - 8.6 times faster than Silero VAD.

Result: Deep learning accuracy with traditional VAD resource footprint.

Why Voice Activity Detection Matters

VAD is a critical component in speech processing. From speech-to-text to speaker diarization and real-time communication apps, accurate VAD is essential:

1. Efficiency and Resource Saving

VAD helps systems process only the parts of audio that contain speech.

- In telecommunication, it reduces bandwidth by not transmitting silence.

- In speech recognition, it shortens processing time by skipping silent segments.

- In recording devices, it saves storage and energy.

Processing audio, especially in the cloud, is expensive both computationally and financially. VAD ensures you only process what matters.

- Cloud costs for Transcription APIs: Only send voiced segments to cloud APIs (reduce data transfer and API calls)

- CPU usage for On-device Transcription: Skip processing during silence (save battery on mobile devices), use resources more efficiently (don't store/buffer non-speech audio)

Example: A 1-hour recording might contain only 10-15 minutes of actual speech. VAD can save 75%+ of processing costs.

2. Improved Speech Recognition Accuracy

By filtering out non-speech segments, VAD ensures that automatic speech recognition (ASR) models focus only on relevant audio. This:

- Reduces the likelihood of transcription errors from background noise.

- Improves word boundary detection.

- Enables real-time ASR in noisy environments.

3. Better User Experience in Voice Interfaces

Users expect systems to respond intelligently to their speech:

- Video conferencing: Only transmit when speaking (reduce bandwidth)

- Voice assistants: Know when commands are complete

- Call centers: Detect when customers start and stop speaking

Poor VAD leads to:

- Cut-off speech (premature end detection)

- Long delays (waiting for timeout instead of detecting silence)

- Wasted bandwidth (transmitting silence/noise)

- False activations (processing non-speech as speech)

VAD Use Cases and Applications

Call Centers:

During calls, people pause often. On average, half of a conversation is silence. Without VAD, the app would continuously transmit audio packets, even when no one is talking. Silence can also be powerful, letting others think and speak. The ratio of silence and speech is a common metric tracked in speech analytics, real-time agent assistance, and call center operations.

Voice Assistants:

These devices are always listening for a wake word ("Hey Alexa," "OK Google"). After activation, they rely on VAD to detect when the user starts and stops speaking a command, and stop listening immediately when the user is done, reducing latency and power consumption.

Enhancing Speech Processing Pipelines:

VAD is fundamental for speech recognition and is integrated into it. Speaker Diarization is one of the speech recognition technologies that use VAD. In a meeting with multiple participants, speaker diarization identifies who spoke when. VAD first segments the audio into speech and non-speech blocks. These speech blocks are then passed to speaker embedding models to label speakers. Without VAD, the system would waste computation on silence, or worse, may assign noise to a speaker.

Choosing a VAD Solution

There are several factors that affect the VAD choice. For some applications, latency and resource efficiency can be critical, for others not. Below are the common metrics used to evaluate VAD solutions. Prioritize what matters most for your application.

1. Accuracy

- True Positive Rate (detection rate)

- False Positive Rate (false alarms)

- Performance in noisy conditions

- Robustness to different speakers/accents

2. Latency

- Real-time processing capability

- Compute Latency

- Network Latency

3. Resource Efficiency

- Model size

- CPU usage

- Memory footprint

- Battery consumption (for mobile)

4. Cross-Platform Support

- Web, mobile, desktop, embedded

- Operating systems supported

- Hardware compatibility

5. Ease of Integration

- SDK availability

- Documentation quality

- Code examples

- Community support

6. Production Readiness

- Reliability and stability

- Enterprise support

- Update frequency

- Licensing terms

If you're deciding whether to build, open-source, or buy, don't forget to check out the common pitfalls in Voice AI projects. Open-Source Overconfidence (The "Free Lunch" Fallacy) is more common than you think.

Measuring VAD Accuracy

VAD is a binary classifier, so standard classification metrics are used to measure the accuracy.

True Positive Rate (TPR): Percentage of speech frames correctly identified as speech. It answers the question of how good the VAD is at detecting actual speech. Ideally, TPR should be 100%, meaning it never misses speech.

False Positive Rate (FPR): Percentage of non-speech frames incorrectly identified as speech. It answers the question of how often VAD incorrectly triggers on non-speech. Ideally, FPR should be 100%, meaning no false alarms.

The Accuracy Trade-Off

There is a trade-off between TPR and FPR, as they are inversely related. The threshold choice affects the True Positive and False Positive rates.

- Lower threshold → More sensitive → Higher TPR, Higher FPR

- Higher threshold → Less sensitive → Lower TPR, Lower FPR

ROC Curves: The Standard Evaluation Tool

Receiver Operating Characteristic (ROC) curves plot TPR against FPR across all possible thresholds. This provides a complete picture of VAD performance considering the trade-off. Below is the ROC curve showing the performance of WebRTC VAD, Silero VAD, and Cobra VAD.

How to read ROC curves:

- Each point represents a different threshold setting

- Top-left corner is perfect (100% TPR, 0% FPR)

- Higher curve = better performance overall

- Area Under Curve (AUC) summarizes performance (the larger the area, the better).

Cobra VAD has the largest AUC, followed by Silero VAD and WebRTC VAD, showing that Cobra VAD is more accurate than both Silero VAD and WebRTC VAD.

Comparing Popular VAD Solutions (2026)

Although we have already established that Cobra VAD is more accurate than Silero VAD and WebRTC VAD, there is more to consider.

Cobra Voice Activity Detection

Pros:

- More accurate than alternatives - 2x better than WebRTC VAD

- Deep learning based, optimized for production

- Extremely lightweight (even runs on microcontrollers)

- Cross-platform (web, mobile, desktop, embedded, server)

- Real-time processing with minimal latency

- Enterprise support and documentation

Things to Consider:

- Requires Picovoice Console account (free tier available)

- Commercial licensing for production use

pyannote.audio

Pros:

- Deep-learning powered

- Good accuracy for research applications

- Free and open source

- Strong research community

Things to Consider:

- High resource usage (research-oriented, not optimized)

- Not designed for production deployment

- Built for speaker diarization

Silero VAD

Pros:

- Deep learning based (better accuracy than WebRTC)

- Free and open source

- Good performance

Things to Consider:

- PyTorch/ONNX dependency (limited optimization)

- Larger footprint - 8.6x of Cobra VAD

- Limited platform support (primarily Python)

- Less mature ecosystem than commercial solutions

Ten VAD

Pros:

- Deep learning based (better accuracy than WebRTC)

- Free and open source

- Good performance

- 48% faster than Silero VAD

Things to Consider:

- PyTorch/ONNX dependency (limited optimization)

- Larger footprint

- Less mature ecosystem than established solutions

- Primarily focused on C/Python

WebRTC VAD

Pros:

- Free and open source

- Lightweight

- Easy to integrate

- Well-documented

Things to Consider:

- Lower accuracy, especially in noise

- Traditional VAD - uses outdated Gaussian Mixture Models

- Limited customization

- Not suitable for challenging acoustic conditions

- Primarily web-focused, with C/C++ source code available

Implementation Guide

Ready to add voice activity detection to your application? Here's a step-by-step guide.

Step 1: Choose Your VAD Engine

Select the VAD solution that fits your requirements. We prepared a detailed comparison of popular VAD alternatives: WebRTC VAD, Silero VAD, and Cobra VAD.

For enterprise applications, we recommend Cobra VAD for its combination of accuracy, efficiency, and cross-platform support.

Step 2: Integrate the VAD into Your Application

Follow the documentation for the VAD engines of your choice.

Cobra Voice Activity Detection provides SDKs for all major platforms:

- Web Browsers: Chrome, Safari, Firefox, and Edge

- Mobile Devices: Android and iOS

- Desktop and Servers: Linux, macOS, and Windows

- Single Board Computers: Raspberry Pi

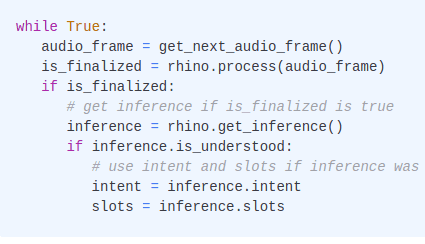

Below is the basic Python implementation guide for Cobra VAD with pvcobra:

Step 3: Determine the Threshold for your Application

Cobra VAD outputs a floating-point value between 0 and 1 representing the probability of voice activity—where 0 indicates no human speech and 1 indicates definite human speech. Developers can set their own threshold and define custom logic based on their application's needs.

Setting the threshold at a low value: If you're building a QSR drive-thru app, you can choose your application to be triggered at a lower probability level, as false alarms will be less problematic than missing an order.

Setting threshold at high value: If you're using VAD for robocalls, you can choose your recording to be triggered at a higher probability, as starting the conversation before someone picks it up will be more problematic than missing the first "hello".

Step 4: Test and Optimize

- Test in realistic noise conditions

- Try edge cases (whispering, shouting, coughing)

- Test with various speakers, accents

- Adjust sensitivity threshold as needed

- Monitor FRR and FAR in production

Platform-Specific Tutorials

Choose your platform to get started with voice activity detection:

Voice Activity Detection for Web Applications

Mobile

Desktop & Server

Production Best Practices

Performance Optimization

- Process at appropriate frame rate (Cobra VAD accepts 16 kHz audio)

- Minimize memory allocations

- Use efficient audio capture

Track Key Metrics

- Average voice probability during speech

- False positive rate (non-speech detected as speech)

- False negative rate (speech missed)

- VAD processing latency

- CPU/memory usage

Common Mistakes to Avoid

- Assuming lab accuracy matches production performance

- Choosing VAD based on cost alone without considering accuracy or efficiency

- Not testing with diverse speakers and acoustic conditions

- Ignoring latency requirements for real-time applications

Learn more about common pitfalls in Voice AI projects and considerations for build vs. buy decisions.

Frequently Asked Questions

What's the difference between VAD and wake word detection?

Voice Activity Detection (VAD) determines whether anyone is speaking. Wake Word Detection focuses on whether anyone is uttering a specific phrase (like "Hey Siri").

Learn more about wake word detection →

What's the difference between VAD and speaker recognition?

VAD answers: "Is someone speaking?" Speaker Recognition answers: "Who is speaking?" VAD doesn't identify individuals, only detects the presence of speech. To identify who's speaking, see Eagle Speaker Recognition.

Does Cobra VAD work in real-time?

Yes. Cobra Voice Activity Detection processes audio in small frame sizes with minimal algorithmic delay, enabling real-time applications, such as: Meeting transcription, voice assistants, and real-time agent coaching.

Getting Started with Cobra Voice Activity Detection

Ready to add voice activity detection to your application? Try the Cobra Voice Activity Detection Web Demo.

- Lightweight on-device voice activity detection

- Industry-leading accuracy

- Cross-platform support (mobile, web, desktop, embedded)

- Free plan and trial available

Quick Start Guides:

- Cobra Voice Activity Detection Python Quick Start

- Cobra Voice Activity Detection Node.js Quick Start

- Cobra Voice Activity Detection Web Quick Start

- Cobra Voice Activity Detection Android Quick Start

- Cobra Voice Activity Detection C Quick Start

Additional Resources

- Cobra VAD Platform Page

- Voice Activity Detection Benchmark

- Open-Source Benchmark Repository

- What is Voice Activity Detection?

- Cobra VAD Announcement

- Cobra VAD Accuracy Improvements

Conclusion

Voice Activity Detection is the foundation of modern speech applications, yet it's often overlooked until poor performance creates user frustration. The difference between good and great VAD directly impacts user experience—from smooth voice assistant interactions to reliable video conferencing and accurate speech transcription.

In recent years, the VAD landscape has evolved significantly. Traditional signal processing approaches like WebRTC VAD served well for years, but it's no longer state-of-the-art. Modern deep learning solutions achieve dramatically better accuracy, and production-optimized engines like Cobra VAD prove that accuracy doesn't require sacrificing efficiency.

For production applications, the choice is clear: invest in accurate, production-ready VAD from the start. The cost of poor VAD—in user frustration, support tickets, and lost customers—far exceeds any licensing fees. Whether you choose a commercial solution with enterprise support or an open-source option depends on your specific needs, but accuracy and reliability should never be compromised.

Key Takeaways

- VAD is a binary classifier that detects speech vs non-speech in audio streams

- Measure accuracy using the ROC Curve

- Set the detection threshold based on your use case (prioritize TPR or FPR)

- Don't forget to test latency for real-time applications

- For most modern applications, deep learning-based VAD is the right choice—the question is whether you need commercial support or can work with open-source options.

Ready to add voice activity detection to your application?

Start Building