Researchers have recently experimented with the wake words of Amazon Echo, Google Home, and Jibo Robot to see the effect of branding on voice user interfaces. They changed the wake word "Alexa" to "Hey Amazon" and observed that users found Alexa more trustworthy, competent, and more like a companion than the wake word "Hey Amazon." Similar to the research, when the Picovoice team is asked for guidance on choosing a wake work, our team refers to the best practices from the technical point of view and encourages organizations to train the alternatives and perform A/B testing.

What's the voice user interface?

Voice user interface (VUI) is a means of interacting with machines, such as a web or mobile application by using voice or conversing. Typical examples of voice user interfaces are voice-controlled warehouse applications, smart speakers, elevators, mobile or web applications, and search with voice and voice search.

Voice UIs employ spoken language understanding, which combines automatic speech recognition and natural language understanding, transforming user speech into meaning. For us, the most natural and intuitive way to interact with humans or machines is speech, and voice user interfaces enable the latter. Voice user interfaces are significantly faster than typing, easier to use and more accessible.

Many digital-first enterprises follow human-centred design principles and iterate graphic user interfaces, but not voice user interfaces. However, it's not surprising given many enterprises cannot afford to experiment with multiple wake words as the researchers did. Picovoice offers the ability to train multiple voice AI models. Here is our simple guide with 5 tips to design user experience-driven voice interfaces.

How to design user-centric voice user interfaces?

1. Identify Sample Interactions

Defining sample interactions is the first and most crucial step. It helps clarify what the user problem is and how it is solved. Picovoice always asks customers for a few sample voice interactions and their goals to help them find the best solution. Developing a voice product that doesn't address users' needs and behavioural patterns will most likely fail regardless of how accurate the engines are.

Sample interactions can be drafted on a notebook, whiteboard, or digital tools. For example, if IT help desk specialists are overwhelmed with the number of queries, listing sample interactions such as "reset my password" or "activate my device" will clearly show what a product should do.

Identifying sample interactions also helps get buy-in from internal and external stakeholders, especially when supported with data or user feedback. Knowing that 57% of employees had to reset their passwords in the last 90 days will easily demonstrate the need and value.

2. Create Low-Fidelity Mock-ups for Multimodal Applications

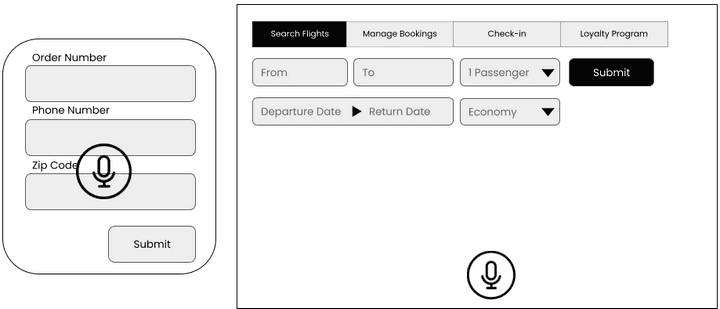

Mobile and web applications are the first things one thinks of when multimodality is discussed. However, screens are everywhere: smartwatches, in-car entertainment (ICE) systems, kiosks, handheld readers… While designing multimodal applications, voice user interface design and graphic user interface design should go hand-in-hand. If the goal is to add voice typing capability to your website in order to help users fill out a form, users need to be able to see what they need to provide. Depending on the use case, low-fidelity mock-ups could be shared with users to gather their feedback. Figure 1: Poor attempts to design multi-modal experiences. Most likely voice is added as a feature after the graphic user interfaces are designed. On the left, the push-to-talk button overlaps with text boxes. On the right, the push-to-talk button is far from the other elements, doesn't clearly indicate what it does.

Poor attempts to design multi-modal experiences. Most likely voice is added as a feature after the graphic user interfaces are designed. On the left, the push-to-talk button overlaps with text boxes. On the right, the push-to-talk button is far from the other elements, doesn't indicate what it does.

Poor attempts to design multi-modal experiences. Most likely voice is added as a feature after the graphic user interfaces are designed. On the left, the push-to-talk button overlaps with text boxes. On the right, the push-to-talk button is far from the other elements, doesn't indicate what it does.3. Design the flow

Users interact with machines with a goal such as retrieving information and performing a task. Going back to the IT help desk example, if a user says "reset my password", the next options should be clear. Decision trees and possible branches should be laid out for the user to achieve their goals. Once a user says "reset my password", a clear flow on how the authentication will be performed, and what the response will be in cases of both successful and failed authentication attempts should be defined.

4. Prototype

The fourth step is to build a prototype that is viable enough to show the value to the users without investing too much into the product to gather feedback. While the term MVP, Minimum Viable Product, became popular in Silicon Valley in the 2000s, prototyping has a long history in product development.

5. User Testing and Iteration

Prototypes should be tested with users without leading or directing. Tests could be performed by prompting users with a task. How they interact with the product will provide insights for further development. With open-ended questions or surveys, additional feedback could be gathered if users cannot be observed during testing. Different versions of a product could be randomly assigned to users to perform A/B testing as researchers did with "Alexa" and "Hey Amazon".

The process doesn't end here. Product development is an iterative process, user testing and feedback should always be at the center of the development.

Start Free