You read Snow Crash by Neal Stephenson, watched Free Guy, heard Facebook, Microsoft or NVIDIA's plans for the Metaverse, or Gucci and Roblox collaboration, now you probably do not want to see another post on metaverse. Promise, this is different. If you’re not there yet, let’s catch you up quickly. The Metaverse is essentially connected communities where people can interact with each other to work, socialize, shop or play. It consists of extended reality, always-on digital environments powered by AR, VR and a mix of them. In this article, we’ll discuss the benefits of on-device voice recognition for AR, VR and MR applications so you can start building with web, Android, iOS or Unity SDKs.

The Metaverse may seem far away when you think of waiting for Alexa to play the next song for almost a minute. When you rely on cloud providers for voice recognition, the shortcomings of broadband connectivity and cloud latency hinder the experience. However, we have a solution, at least for the voice part. Let’s say you have an AR-enabled application where users can wear digital clothes and a user wants to change the colour.

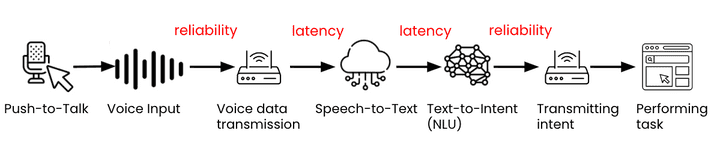

Standard Approach

The user presses a push-to-talk button before talking. Then the voice data is recorded and sent to the cloud for transcription, how fast the data is sent depends on the user’s internet service provider (ISP). Next, the text is passed to an NLU (Natural Language Understanding) service to infer the user’s intent from the text. The latency of these steps depends on the cloud service providers’ performance and proximity to the data center. Then the command is sent back to the device for the task and once again this part relies on the ISP. Finally, the user can see the color has changed.

It may sound exhausting. Next time, when getting Alexa to play the next song takes some time, just think about this long process.

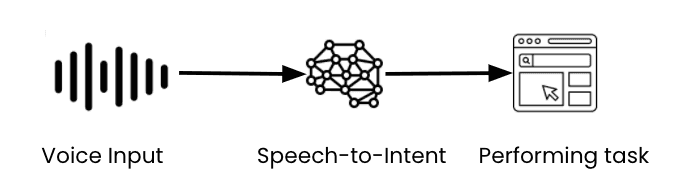

Picovoice Approach

Porcupine Wake Word eliminates the need for a push-to-talk button. The user can start with a voice command. Rhino Speech-to-Intent infers the intent directly from speech without a text representation. Orca Text-to-Speech reads the responses generated by machines when needed. During the whole process, voice data is processed locally on the device without sending the data to the cloud. With the Picovoice approach, since the voice data is not transmitted to the cloud, the users do not face reliability and latency issues.

The user says “Porcupine (or your branded wake word) change the color to black”, and voila!

What’s more with Picovoice?

Picovoice technology is not only fast but also very mindful when it comes to power consumption. For example, Porcupine, the wake word engine, uses less than 4% of the Raspberry Pi 3 CPUs and detects multiple wake words concurrently without any additional footprint. You can develop voice products for Metaverse by using Picovoice SDKs for web, Android, iOS or Unity.

o = pvporcupine.create(access_key=access_key,keyword_paths=keyword_paths)while True:keyword_index =o.process(audio_frame())if keyword_index >= 0:// Detection callback

Watch the demo!

To show how it works, we’ve built a Voice-Controlled VR Video Player with Unity.

Start Building!

Adding on-device voice recognition to AR, VR or MR applications is just a few clicks away. Explore Metaverse with Picovoice’s Free Plan and build your prototype in minutes.

AR, VR and MR stand for Augmented Reality, Virtual Reality and Mixed Reality, respectively. Extended Reality can be shortened as XR and is an umbrella term for AR, VR and MR.