Latency is a major drawback of LLM-based voice assistants. The awkward silence while waiting for the AI agent's response defeats the use of cutting-edge GenAI to create humanlike interactions. The root cause is the combined delay of the LLM generating the response token-by-token and then the text-to-speech (TTS) synthesizing the audio. The state-of-the-art remedy is to rush the TTS to synthesize audio faster and stream the audio out as soon as possible. Yet, it's just a remedy, not a solution, as it still needs to wait for the LLM's response before starting the synthesis.

Today, we are introducing Orca Streaming Text-to-Speech, which plans ahead and starts synthesizing in parallel to the LLM in locked steps, reducing latency by up to 10X.

We instigate that text-to-speech engines in the LLM era should evolve to become Plan Ahead, Don't Rush It (PADRI).

Plan Ahead, Don't Rush It

Orca starts synthesizing audio while the LLM is still producing the response, i.e., Orca can handle streaming text input. At the time of this article, we found no other TTS system with this capability. Other engines can only rush it by streaming the audio after receiving the entire LLM output.

The animation above shows this phenomenon using a real-world example that is described in more

detail in the next section.

Because Orca is a PADRI engine, it can start synthesizing speech much earlier, and finishes reading before

OpenAI TTS can even begin,

thereby reducing audio latency by 10x.

ChatGPT 10X Faster

The video above shows a real-world voice-to-voice interaction with ChatGPT. The interaction begins with Picovoice's Cheetah Streaming Speech-to-Text transcribing the user's speech in real time. The transcribed text is then sent to ChatGPT using OpenAI Chat Completion API, to generate a response. Finally, the LLM's response is synthesized into speech using either Picovoice's Orca Streaming Text-to-Speech or OpenAI TTS.

Orca begins audio synthesis within 0.2 seconds, while OpenAI TTS takes about 2 seconds to produce the first audio, making conversations with Orca feel significantly more seamless. With Orca, the main bottleneck in voice interactions becomes the LLM response time (0.5 seconds in the video above) rather than the text-to-speech system. This is a game-changer in the current technology landscape, where the TTS wait time is known to be the major limitation.

Orca isn’t necessarily faster than OpenAI’s TTS, because OpenAI TTS runs on a data-center-grade

NVIDIA GPU, while Orca TTS in the demo above runs on a consumer-grade x86 AMD CPU.

Not All Streaming Is Equal

Amazon Polly, Azure Text-to-Speech, ElevenLabs, and OpenAI all offer streaming TTS. The catch is that the

streaming feature only refers to audio output streaming, not text input streaming. Hence, all these APIs will have the

limitations discussed above. They need to wait for the LLM while Orca Streaming Text-to-Speech can start the synthesis well

ahead of time and reduce the end-to-end latency to sub-seconds regardless of the LLM response length.

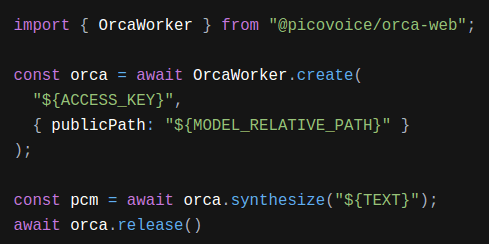

Start Building for Free

Are you technical? Start building with Orca Streaming Text-to-Speech 💯 free using Picovoice's Forever-Free Plan.

We promise you won't need to talk to a salesperson, and we never ask for your credit card information.

orca = pvorca.create(access_key)stream = orca.stream_open()speech = stream.synthesize(get_next_text_chunk())

Applications of PADRI TTS

LLMs outperform traditional NLP techniques when it comes to use cases that require more natural, open-ended conversations and a broader understanding of context, such as:

- Customer Support: requires handling a wide range of customer inquiries across topics and follow-up questions.

- Healthcare Services: requires engaging in empathetic conversations to assist patients and healthcare providers with symptom checking or encourage adherence to treatment plans.

- Knowledge Management: requires virtual assistants and tutors to be engaging and efficient to improve employee productivity. A 6-minute delay per employee per day in a 10,000-person company can easily translate into a 1,000-hour loss, equivalent to an extra ~145 employees.

In the same way, LLMs have leveled up traditional NLP techniques, PADRI TTS has taken Text-to-Speech to new heights,

unlocking humanlike spoken interactions.