Most enterprises do not train their own Machine Learning models. However, they buy, deploy, and run them. Hence, some Machine Learning model training techniques and concepts find their place in business conversations, requiring business executives to understand them to make sound decisions. This article aims to explain Transfer Learning and Model Fine-Tuning and the difference between them.

What’s Transfer Learning?

The traditional approach to training Machine Learning models was to train each model for a specific task and from scratch. It’s a costly and time-consuming process that requires data collection, cleaning, labeling, framework decisions, and lots of trial and error. Transfer Learning is a training method that overcomes these challenges. It refers to the ability of machines to re-use a task to boost performance on related tasks. For example, an image recognition model could apply its knowledge of recognizing cars to trucks. Transfer Learning shortens time-to-market, allowing developers to achieve higher accuracy and performance with fewer resources.

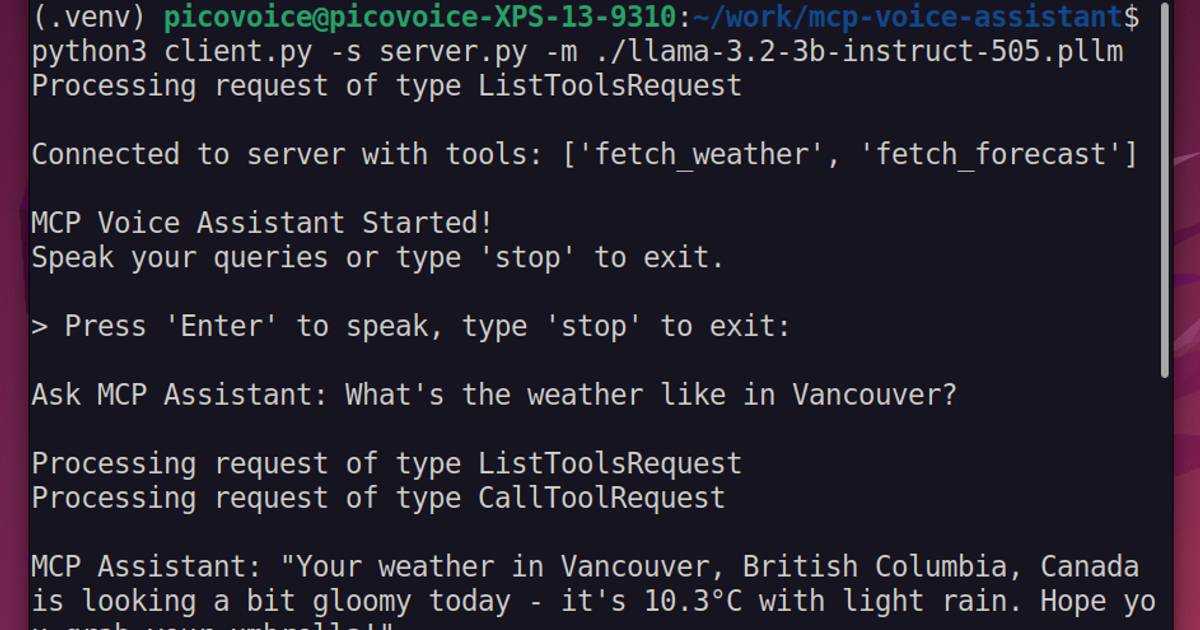

Picovoice’s first product, Porcupine Wake Word, shortened the AI model generation process from months to hours, then seconds using transfer learning while legacy players still train models from scratch.

What’s Model Fine-Tuning?

The dictionary definition of Fine-Tuning is to adjust something precisely to bring it to the highest level of performance or effectiveness. It applies to Machine Learning models, too. Fine-Tuning refers to adjusting a pre-trained model on something specific to improve its performance. Most enterprises do not train their own models but Fine-Tune the generic, pre-trained models to improve accuracy for their use case.

What is the difference between Transfer Learning and Fine-Tuning?

Both Transfer Learning and Fine-Tuning refer to learning a new task. Thus, they sound similar, and some people use these terms interchangeably.

However, Transfer Learning captures general patterns and features from the dataset and uses a pre-trained model as a knowledge base to solve new but similar problems. Fine-Tuning focuses on adapting a model by further training it on a task-specific dataset. It includes training on more data and refining the pre-trained model, enabling it to accommodate the specific nuances. Fine-tuning adjusts the weights of the pre-trained model on the new dataset, allowing models to learn task-specific details while retaining their general knowledge.

A simple analogy can come from humans. Imagine you need to learn a new task. If you do not have information about it, you may transfer skills from relevant experiences and figure out how to do it or read a book and apply your learnings using your existing knowledge. In both cases, you do not ignore everything you know and start from scratch. In both cases, you aim to complete a new task. The decision of which method to use depends on the resource availability and the requirements of the situation, i.e., transferable skills and knowledge vs. data and information availability.

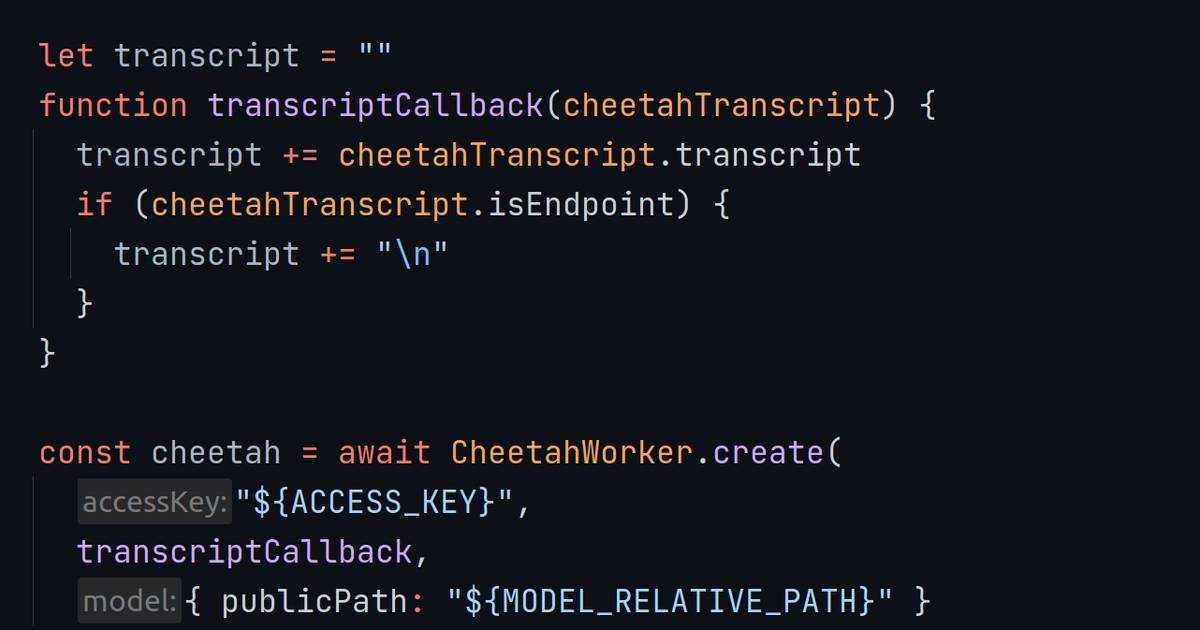

Picovoice leverages transfer learning while training its models and allows users to fine-tune them further on the Picovoice Console or via Picovoice Consulting engagements.

Consult an Expert