Part of an enterprise voice AI project that is struggling, stalled, or already failed? You're not alone. Gartner predicts that over 40% of agentic AI projects will be canceled by 2027 due to escalating costs and unclear business value.

However, failure isn't inevitable. The enterprises that succeed learn from others' mistakes and implement strategic safeguards from day one. This guide identifies the 7 most common pitfalls that kill enterprise voice AI projects - and provides actionable recovery strategies, whether you're planning your first implementation or trying to salvage a troubled deployment.

The Hidden Cost of Voice AI Failures

Before diving into solutions, understand what's at stake:

- Average Failed Project Cost: $500K - $2M+ in sunk investments.

- Timeline Impact: 12-24 months of delayed competitive advantage.

- Organizational Damage: Lost credibility for future AI initiatives, seeing any new project as risky.

- Opportunity Cost: Losing the first-time mover advantage to competitors.

Pitfall #1: Lack of Understanding of the User

Problem: Organizations invest in voice AI projects driven by technology hype or competitive fear rather than genuine user needs and experience design.

Example: An oil & gas company invested in automating maintenance tasks using generic AI models, only to discover that field technicians couldn't use the system effectively due to industrial noise and specialized terminology. Project abandoned after 14 months.

Prevention:

- Start with comprehensive user research, not technology selection

- Include end-users in the design process from day one

- Test in actual working conditions, not controlled environments

- Define success metrics based on user outcomes, not technical features promoted by vendors

Pitfall #2: Mismatched Use Cases

Problem: Choosing agentic voice AI for scenarios better suited to traditional voice agents, driven by technology excitement rather than practical business needs.

Example: A major retailer hired technology consultants to improve the accuracy of the legacy system that helps store associates with tasks, such as inventory inquiries, resulting in an 8-figure agentic AI project proposal, although the need was to replace the legacy voice AI tech with a modern one.

Prevention:

- Always start with the business problem, not the technology capability

- Use our decision framework: Choose voice agents for predictable workflows, agentic AI for complex reasoning

- Apply the minimum viable complexity principle

- Reserve advanced AI for genuine competitive differentiation opportunities

- Choose the right vendor who shares your goals

Pitfall #3: Underestimating Technology Limitations

Problem: Ignoring fundamental limitations of AI, such as large language models' hallucinations that can derail enterprise deployments.

Example: A healthcare organization deployed an LLM-powered voice assistant for patient triage that occasionally provided contradictory medical advice, forcing a complete system shutdown and regulatory review.

Prevention:

- Start with low-risk use cases and gradually expand

- Implement extensive testing with real-world scenarios

- Design robust guardrails and validation systems

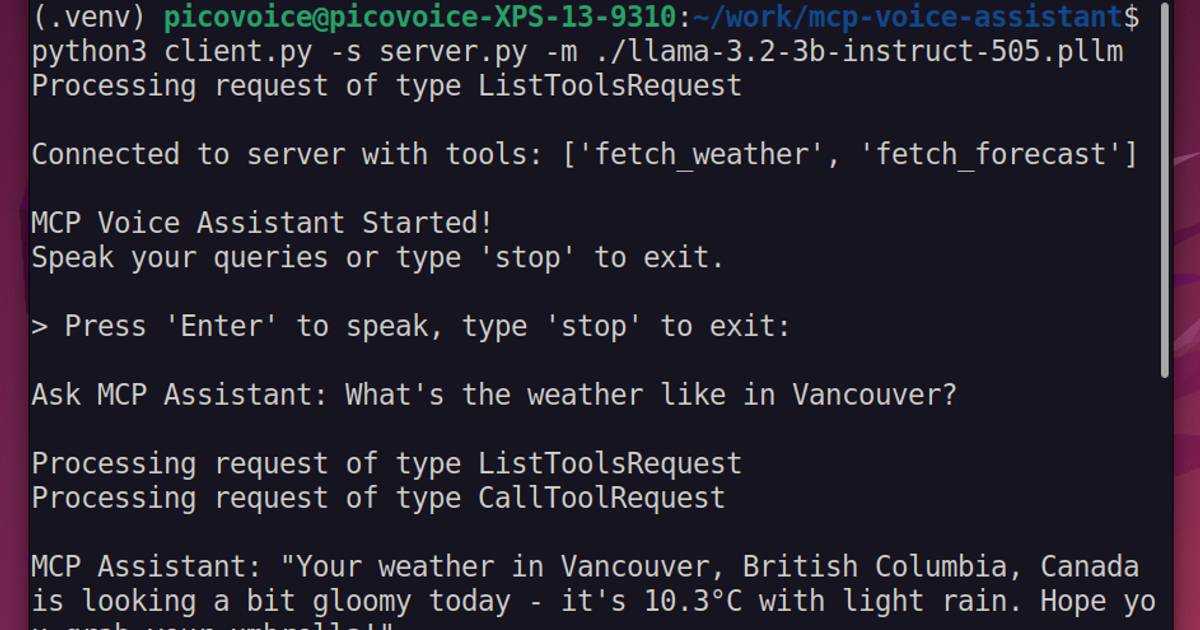

Pitfall #4: Open-Source Overconfidence (The "Free Lunch" Fallacy)

Problem: Underestimating the total cost of ownership, performance gaps, and expertise requirements when building enterprise systems on open-source foundations.

Example: A financial services firm hired a team of machine learning engineers and developers and spent 12 months trying to productionize an open-source AI agent, ultimately switching to commercial solutions in order not to miss their market window any further.

Prevention:

- Compare the 5-year total cost of ownership, not just initial licensing costs

- Assess the availability of enterprise-grade support and SLAs

- Evaluate compliance, security, and governance capabilities

- Consider talent availability and retention costs for specialized skills

- Consider switching to enterprise solutions if the cost justifies market timing

Pitfall #5: Engineering Gold-Plating (The "Perfect Solution" Trap)

Problem: Engineering teams building overly sophisticated solutions when simpler approaches would deliver faster business value, turning complexity into a liability rather than an asset.

Example: A manufacturing company spent 8+ months developing a complex agentic system for facility scheduling, while a rule-based voice agent could have launched in weeks and met 80% of needs at a fraction of the cost.

Prevention:

- Separate "must-have" from "nice-to-have" features

- Apply the 80/20 rule: Solve 80% of use cases with 20% of complexity

- Start with minimum viable complexity and iterate based on real user feedback

- Measure business impact, not technical sophistication

- Set hard deadlines for deployment to prevent endless optimization

Pitfall #6: Demo-to-Production Delusion (The "95% Accuracy")

Problem: Massive gap between proof-of-concept success and production deployment performance, often caused by oversimplified testing environments and vendor demo bias.

Example: A telecommunications company purchased an AI system based on vendor claims of 95% accuracy. Production performance dropped to 67% due to real-world variability.

Prevention:

- Use actual enterprise data (noise, accents, technical jargon), not cleaned demo datasets

- Run your own tests to avoid overfitting

- Design pilots with production constraints from day one.

Check out our articles on how different benchmarking methods can affect the results: Benchmarking a Wake Word, Things to Know About WER, Evaluating Large Language Models

Pitfall #7: Lack of Learning Loop (Deploy-and-Pray Syndrome)

Problem: Attempting large-scale deployments without feedback mechanisms, performance monitoring, or iterative improvement processes - treating AI as traditional software rather than learning systems.

Enterprise Impact: A retail bank deployed an enterprise-wide agentic voice system without piloting, resulting in customer complaints, regulatory scrutiny, and a forced rollback.

Prevention:

- Start with pilot deployments (single department/use case)

- Scale based on validated learning

- Implement real-time performance monitoring and user feedback loops

- Maintain the ability to quickly rollback or adjust based on performance data

- If your voice AI project is struggling or hasn’t started because of previous failures, consult an expert.