Emotion Recognition deals with automatically detecting human emotions. Speech Emotion Recognition is a subfield of Emotion Recognition that focuses on spoken signals. For example, Speech Emotion Recognition can decipher an audio file to determine if the speaker is angry, neutral, or happy.

Connection to Sentiment Analysis

Speech Emotion Recognition is related to Sentiment Analysis, which is a subtopic of Natural Language Processing (NLP) that focuses on interpreting opinions from a piece of text. Speech Emotion Recognition differs as it infers the speech signal from audio data; instead of written text like Sentiment Analysis. Together, Speech Emotion Recognition and Sentiment Analysis can capture semantic and vocal emotion.

Use Cases

Speech Emotion Recognition can help in areas including call centers, customer service, and voice assistants. It can provide real-time feedback (i.e. as a sales coach to a salesperson) or large-scale analytics (i.e. by finding the percentage of frustrated customers).

Challenges

There is an abundance of research related to Speech Emotion Recognition. However, at the time of this writing, there are no readily available commercial offerings. The Picovoice team has compiled a list of challenges we foresee in building an enterprise-grade Speech Emotion Recognition system below:

Existing Datasets

There are several free and paid datasets available for Speech Emotion Recognition, but there are shortcomings associated with all of them.

- These datasets are extremely limited in covering languages, speakers, genders, ages, dialects, etc. Training, validating, and testing models on a limited dataset results in overconfidence (overfitting) and hard failure in the future.

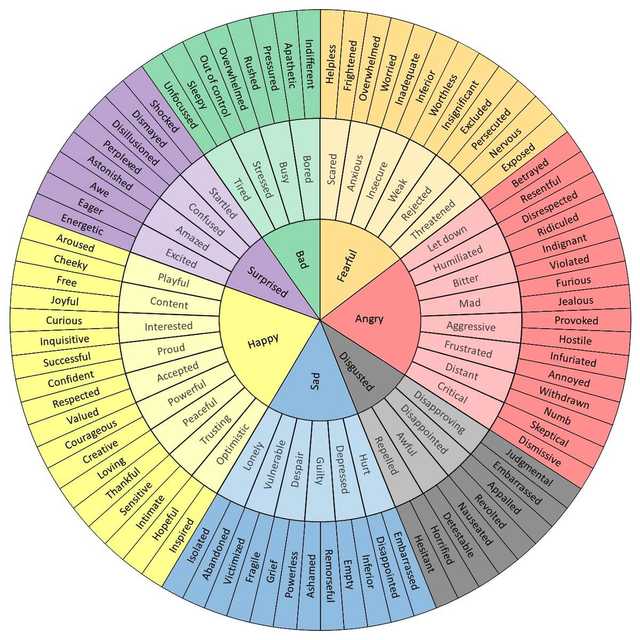

- There is no standard set of labels for human emotions! These datasets all use a slightly different set of emotions.

- Being angry in an online game differs from being angry with a customer service agent. A global definition of what an emotion looks like seems somewhat futile. Check the image of

Feelings Wheelbelow for a visual explanation.

Curating a Dataset

The direct approach is to collect and label the training examples. It is best if you have a way to keep collecting data beyond the first version of the dataset. Why? You will certainly need more data after your first training run. You might also want to invest in building a learning loop so you can keep gathering and labelling data from models in production. This method is expensive and time-consuming.

If you don't have the means to collect a dataset, you need to get creative! You can use crowdsourcing, which is much cheaper than building an in-house labelling team. But still, you pay per label. The last measure is to use an already-trained model to create labels for you. For example, given a computer vision system that understands emotions from facial expressions, you can snip what the user is saying at the time and attach the label to it.

Training a model

Assuming you have enough data, you can train a classifier end-to-end that maps from a speech signal to one of the labels. In practice, we won't be able to, and likely overfit to train and fail to generalize. Things we can try:

Data augmentation— Add noise, reverberation, speech perturbation, and any other valid audio manipulation technique.DropoutLabel SmoothingMulti-task learning— Auxiliary tasks help the network to learn helpful hidden states. For example, you can also ask the model to transcribe what the user is saying.Transfer learning— A feature embedder like Wav2vec to preprocess the input reduces the number of free parameters needed for training.Feature Engineering— Instead of feeding raw audio for low-level features such as spectrogram, use lower-dimensional features such as pitch.

Experts at Picovoice Consulting work with Enterprise customers to create private AI algorithms, such as Language Detection or Sound Detection, specific to their use cases.

Consult an Expert