llama.cpp and ollama are efficient C++ implementations of the LLaMA language model that allow developers to run large language models on consumer-grade hardware, making them more accessible, cost-effective, and easier to integrate into various applications and research projects.

What’s llama.cpp?

llama.cpp is an open-source, lightweight, and efficient implementation of the LLaMA language model developed by Meta.

Key points about llama.cpp

llama.cppis a port of the original LLaMA model to C++, aiming to provide faster inference and lower memory usage compared to the original Python implementation.llama.cppwas created by Georgi Gerganov in March 2023 and has been grown by hundreds of contributors.llama.cppallows running the LLaMA models on consumer-grade hardware, such as personal computers and laptops, without requiring high-end GPUs or specialized hardware.llama.cppleverages various quantization techniques and reduces the model size and memory footprint while maintaining acceptable performance.

llama.cpp has gained popularity among developers and researchers who want to experiment with large language models on resource-constrained devices or integrate them into their applications without expensive or specialized hardware. Although llama.cpp initially started with Meta’s LLaMA, it currently supports 37 models. llama.cpp also inspired and enabled many developers and researchers. Google’s localllm, lmstudio, and ollama are built with llama.cpp.

What’s ollama?

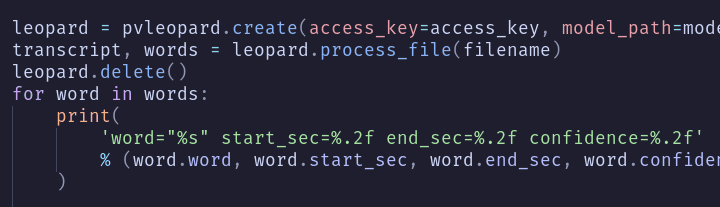

ollama, short for "Optimized LLaMA," was started by Jeffrey Morgan in July 2023 and built on llama.cpp. ollama aims to further optimize the performance and efficiency of llama.cpp by introducing additional optimizations and improvements to the codebase.

ollamafocuses on enhancing the inference speed and reducing the memory usage of the language models, making them even more accessible on consumer-grade hardware.ollamaautomatically handles templating the chat requests to the format each model expects, and it automatically loads and unloads models on demand based on which model an API client is requesting. Some of the further optimizations inollamainclude:- Improved matrix multiplication routines

- Better caching and memory management

- Optimized data structures and algorithms

- Utilization of modern CPU instruction sets (e.g., AVX, AVX2)

- Similar to Dockerfiles,

ollamaoffers Modelfiles that you can use to tweak the existing library of models (the parameters and such), or import gguf files directly if you find a model that isn’t in the library. ollamamaintains compatibility with the original llama.cpp project, allowing users to easily switch between the two implementations or integrateollamainto their existing projects.

What should enterprises consider while using llama.cpp and ollama?

llama.cpp and ollama offer many benefits. However, there are some potential downsides to consider, especially when using them in enterprise applications:

- Legal and licensing considerations: Both

llama.cppandollamaare available on GitHub under the MIT license. Yet, enterprises must ensure that their use complies with the projects' licensing terms and other legal requirements. - Lack of official support: As open-source projects,

llama.cppandollamado not come with official support or guarantees. Enterprises may need to rely on community support, reach out to individuals who started the projects, or invest in in-house expertise to troubleshoot issues and ensure smooth integration and maintenance. - Limited documentation:

ollamais easier to use thanllama.cpp. Yet, compared to commercial solutions, the documentation forllama.cppandollamamay seem less comprehensive, especially for those who do not have machine learning expertise. This can make it more challenging for developers to resolve issues, particularly in enterprise settings where time-to-market and reliability are critical. - Potential performance limitations: Although

llama.cppandollamaare designed to be efficient, the trade-off between efficiency and performance (accuracy) should be studied thoroughly. - Security and privacy concerns: Just like any open-source projects, the community contributes to

llama.cppandollama. Thus, enterprises should carefully review the codebase and any dependencies for potential vulnerabilities or risks. Very recently, a backdoor in upstream xz/liblzma that leads to an SSH server compromise has become public. - Integration challenges: Integrating

llama.cpporollamainto existing enterprise systems and workflows may require significant development effort and customization. In other words, working withllama.cpporollamamay require custom bindings, wrappers, or APIs to enable communication between their existing systems. - Maintenance and updates: As community-driven projects, the development and maintenance of

llama.cppandollamamay not follow a predictable schedule. Enterprises should be prepared to manage updates, bug fixes, and potential breaking changes in their applications that rely on these projects. Moreover, if enterprises build their own solutions based onllama.cpporollamathey have to keep a close eye on the releases to keep their libraries up-to-date. Otherwise, they will diverge from the initial original library. This could be challenging asllama.cpphas close to 2,000 releases.

Choosing the right AI algorithms can be challenging. Picovoice Consulting helps enterprises choose the best AI models for their needs.

Consult an Expert