Intent inference (detection) from spoken commands is at the core of any modern voice user interface (VUI). Typically, the spoken commands are within a well-defined domain of interest, i.e. Context. For example:

Play Tom Sawyer album by Rush[Music]Search for sandals that are under $40[Retail]Call John Smith on his cell phone[Conferencing]

Why End-to-End?

Current solutions work in two steps. First, a speech-to-text (STT) transcribes speech to text. Second, a natural language understanding (NLU) engine detects the user's intent by analyzing the transcribed text. NLU implementations can vary from grammar-based regular expressions to complex probabilistic (statistical) models or a mix. This approach requires significant compute resources, to process an inherently large-vocabulary STT. Moreover, it gives a suboptimal performance as errors introduced by STT impair NLU performance.

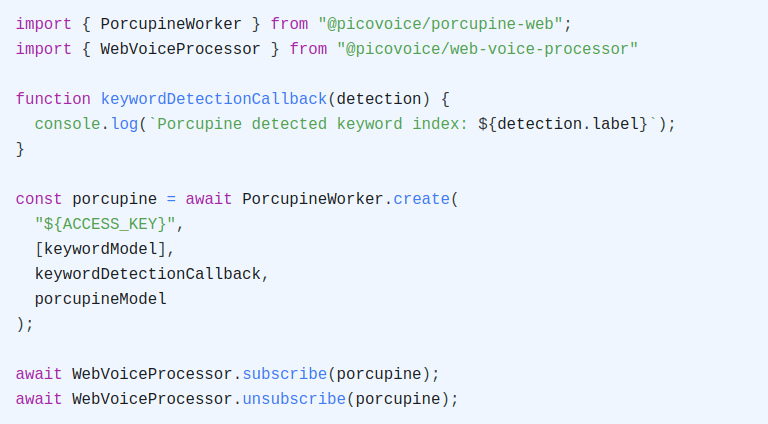

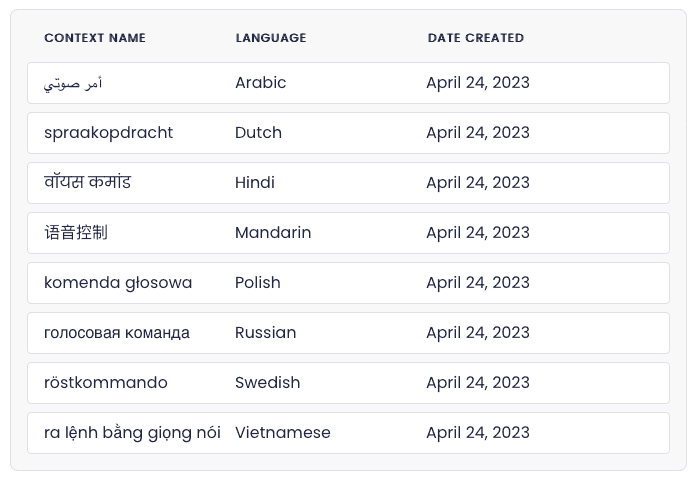

Picovoice Rhino Speech-to-Intent engine takes advantage of contextual information to create a bespoke jointly-optimized STT and NLU engine for the domain of interest. The result is an end-to-end model that outperforms alternatives in accuracy and runtime efficiency. Additionally, it can run on-device and offline, helping privacy, latency, and cost-effectiveness.

Accuracy

We have benchmarked the accuracy of Picovoice Speech-to-Intent against Amazon Lex, Google Dialogflow, IBM Watson, and Microsoft LUIS. The test scenario is VUI for a voice-enabled coffee maker. The detail of the benchmark is available on the Rhino benchmark page. This significant accuracy improvement stems from the joint optimization performed when training the end-to-end intent inference model.

Another open-source benchmark shows that Picovoice Rhino also beats Amazon Alexa. The study also evaluates other independent voice technology providers such as Houndify by SoundHound and Snips and mentions the limitations of each of these platforms.

Latency

Latency is an inherent limitation with cloud-based solutions, as the total delay is unpredictable and depends on the quality of the network connection and server-side load. Rhino offers reliable response time to detect users' intent.

Start Building

Start building with Rhino Speech-to-Intent for free.

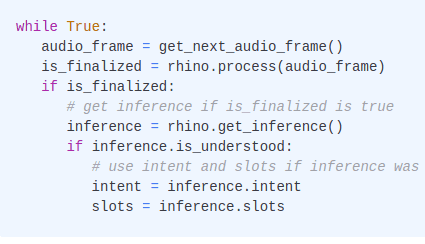

o = pvrhino.create(access_key,context_path)while not o.process(audio()):passinference = o.get_inference()