Today, there is no shortage of architectures for building a Speech-to-Text (STT) engine. No need to follow strict

and convoluted recipes anymore. The choice of architecture has implications for product, timeline, and level of investments.

Speech-to-Text, Automatic Speech Recognition (ASR), and Large-Vocabulary Speech Recognition (LVSR) are interchangeable terms.

What is End-to-End Speech Recognition?

An End-to-end (deep learning) model accepts raw data and produces the labels directly. An end-to-end ASR receives raw audio and produces text as output.

Most "end-to-end" speech recognition architectures accept a low-level feature rather than raw PCM. Spectrogram and

(biology-inspired) Filterbanks are the most common. Some end-to-end systems (optionally) fuse an external

Language Model (LM).

What is Hybrid Speech-to-Text?

We represent spoken sounds with Phonemes. International Phonetic Alphabet (IPA) and ARPABET are examples of

phonetic transcription codes.

The translation between phonemes and text (and vice versa) is not one-to-one, especially in languages such as English.

The hybrid systems break the problem into recognizing sounds and then transducing a sequence of sounds to words. A

Deep Neural Network (DNN) is the tool of choice for Phoneme Recognition. The transduction of phonemes to text requires

using a graph called Weighted Finite State Transducer (WFST).

State of Play

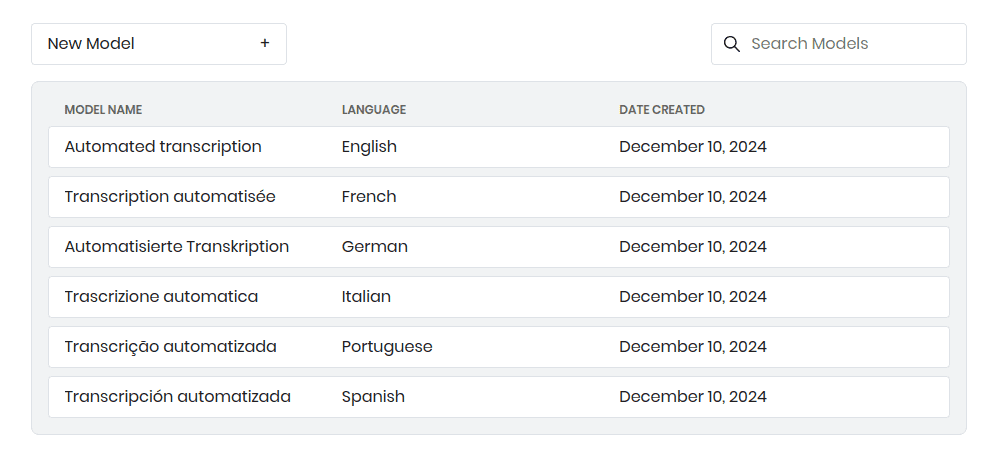

Commercially available systems are hybrid systems. Why? Legacy reasons and (or) that it is much easier to get the information

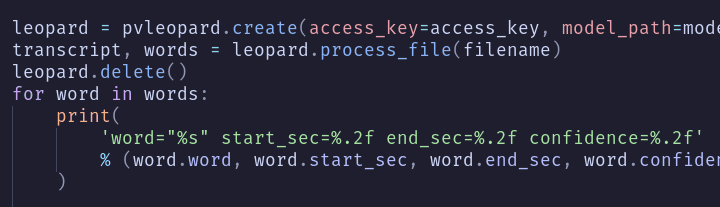

a production system requires from hybrid models. Practical applications of ASR need more than just transcription. They need

Word Timestamps, Word-Level Confidence, and Alternative Transcriptions. Last but not least, they require a method

for rapid and economic adaptation.

The Trend

The trend is towards end-to-end models.

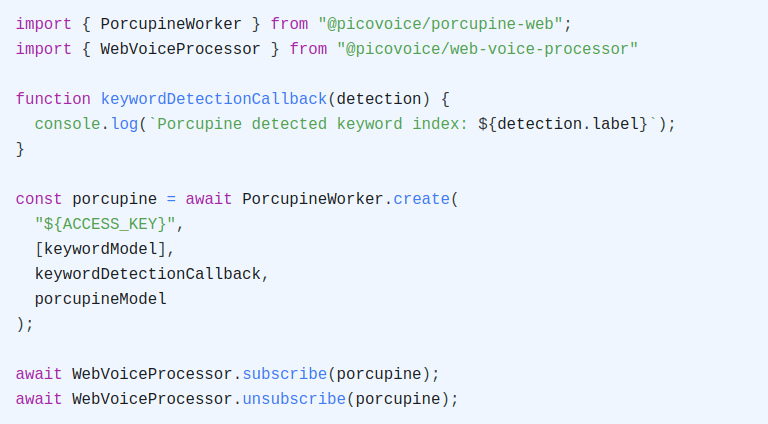

- They are much easier to build.

Kaldiis the crown jewel of hybrid systems and is hard to understand. People know how to use it as a black box, but only a handful understand the algorithms and their implementations. - Numerous research papers from Big Tech achieve unbelievably great results using end-to-end models.

The math of hybrid systems is just much harder to grasp.

The Decision

There is no straightforward answer for choosing between hybrid and end-to-end architecture. The decision depends on goals and resources.

- How much data do you have? End-to-end systems work great given many thousands of hours of labelled audio.

- How fast does your data change? e.g. new words showing up in data. If it is changing fast, you need to keep retraining. Otherwise, you get into a data mismatch problem. Hybrid systems are easier to retrain as you can adapt acoustic and language models separately.

- Do you have in-house voice recognition expertise? If not, building a hybrid system can be impractical. End-to-end systems reduce development time significantly.