This article a bit late to the party! Why? The seminal paper Hello Edge: Keyword Spotting on Microcontrollers is a few years old. But what we like to discuss about voice recognition on microcontrollers is fundamentally different. It bridges the gap between academic research and real-world commercialization. There is no shortage of papers or commercial demos showing that it is possible to detect a handful of keywords on MCUs using Google Speech Commands Dataset. But a commercial development and deployment is much more involved.

How many commercial products do you see in the market performing local keyword spotting and voice recognition on microcontrollers? If none or a just a few, then why? The rest of article discusses this question and presents Picovoice's solution.

Why Microcontrollers?

Today, we have embedded Linux on cheap microprocessors (low-end Arm Cortex-A, MIPS, RISC-V, etc.). Microcontrollers are only beneficial for ultra power-efficiency needed in wearable or hearable use cases. Alternatively, if cost is a hard target, MCUs become very competitive as they do not require external RAM or FLASH. The latter applies to high-volume use cases such as consumer electronics and appliances.

Why Picovoice?

Picovoice Porcupine Wake Word Engine enables training Keyword Spotting models without gathering data. Type the phrase you want and receive a model for on-device inference. This flexibility is a game changer for reducing development timeline, and eliminating risks involved in user testing.

Porcupine supports many languages, including English, French, German, Italian, Japanese, Korean, Portuguese, Spanish, and many more. Porcupine runs on many Arm Cortex-M4 MCUs from ST and Arduino. Hence it doesn't lock you down to a specific silicon provider and supports product expansion globally.

Building with Picovoice

Picovoice Console

Sign up for Picovoice Console for free and copy your AccessKey.

Often you want to use Custom Wake Word Models with your project. Branded Wake Word Models are essential for enterprise products. Otherwise, you are pushing Amazon, Google, and Apple's brand, not yours! You can create Custom Wake Word Models using Picovoice Console in seconds:

- Log in to Picovoice Console

- Go to the Porcupine Page

- Select the target language (e.g. English, Japanese, Spanish, etc.)

- Select Arm Cortex-M as the platform to optimize the model.

- Type in the wake phrase. A good wake phrase should have a few linguistic properties.

- Enter your board type and the UUID corresponding to the MCU.

- Click the train button. Your model with be ready momentarily.

- Unzip the downloaded file and find

pv_porcupine_params.h. Below is a snippet:

Porcupine SDK

Porcupine SDK is on GitHub. You can find libraries for supported microcontrollers on the Porcupine GitHub repository. Arduino libraries are available via a specialized package manager offered by Arduino.

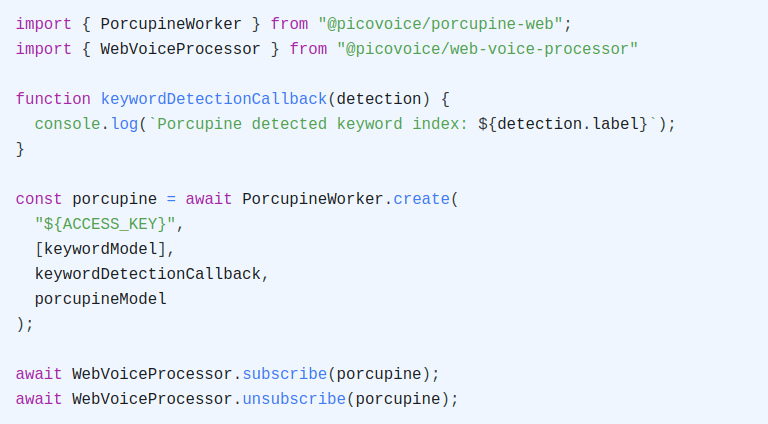

Initialization

Link the appropriate library (.a) and the parameter file(s) downloaded from Picovoice Console into your project. Include the header file for Porcupine into your project and put it is on the path. Initialize the engine:

ACCESS_KEY is the AccessKey from your Picovoice Console account. memory_buffer is a buffer on RAM that we use within the engine, num_keywords indicates the number of keywords you want to spot, KEYWORD_MODELS are the models in the parameter file downloaded from Picovoice Console, and SENSITIVITIES is the array of engine's sensitivity for detecting each keyword.

Processing

Pass audio to Porcupine in frames (chunk). Porcupine processes 16 kHz and 16-bit sampled PCM. Each frame should have pv_porcupine_frame_length() samples.

Cleanup

Release resources upon completion:

NLU on MCU?

It is nice to be able to detect static phrases on an MCU. But wouldn't it be more pragmatic to be able to match what a voice assistant like Alexa is able to do for a smart switch or a toaster? After all we need to understand complex voice commands in a well-defined domain. Rhino Speech-to-Intent and Picovoice SDK (wrapping Porcupine and Rhino together) accomplish this task. With Picovoice SDK, you can match or even exceed what Alexa can do for you on a MCU without any strings attached.

Watch demo below running offline on an Arm Cortex-M4: