Microcontrollers (MCUs) are still very attractive when cost and power consumption are top requirements. Recently, there has been lots of activity from Amazon and Google to put Alexa and Google Assistant on Microcontrollers. Semiconductor companies celebrated this move in hopes of gaining volume. Alas, the goal was limited to offloading the wake word detection on the MCU and then using connectivity (BLE, WiFi, or LoRA) to perform the heavy lifting in the cloud. The approach defeats the purpose, as API-based pricing makes the low cost of MCU irrelevant. Also, connectivity draws more power than computing! Nonetheless, Amazon and Google reportedly are curtailing their support for third parties and leaving everyone, including silicon companies, hot and dry!

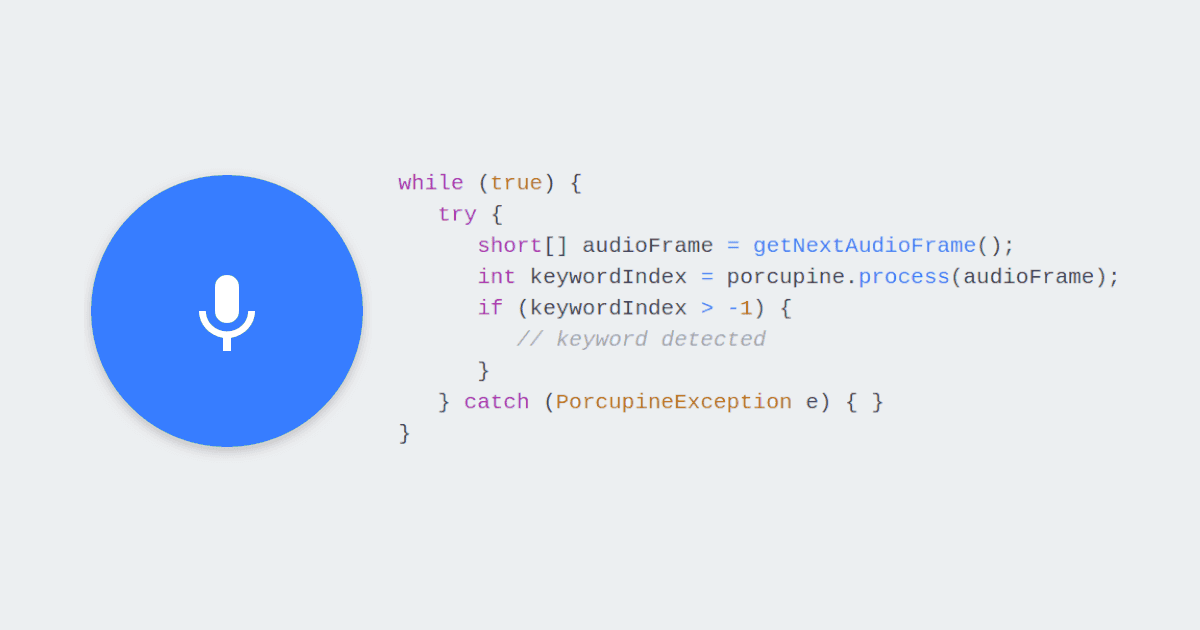

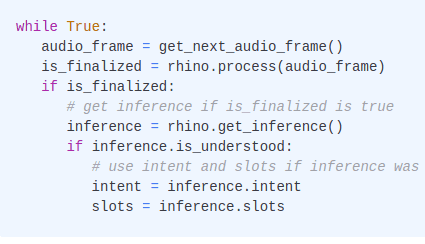

Here is where Picovoice kicks in! Picovoice offers the Porcupine Wake Word and Rhino Speech-to-Intent engines. Together they can match what a voice assistant like Alexa can do for a device like a smart thermostat. With them, you can understand complex phrases like:

Oddly enough, commands like these were the majority of active use cases for Alexa based on Amazon's report. People don't want to banter with their voice assistants! They want to get things done. Picovoice can deliver this experience on-device and offline with higher accuracy while respecting privacy. Below you learn how.

For this tutorial, we use the STM32F407 discovery board. We picked it because it is on the low end of what is available to showcase how efficient Picovoice voice AI is. Also, it only costs you $20.

Run the demo

You will need the dev board and STM32CubeIDE installed.

- Clone the Picovoice repository.

Open the project under

/demo/mcu/stm32f407/using STM32CubeIDE.You need to download the audio middleware. For licensing purposes, we can not have it on GitHub. You can download it from here. Once downloaded, unzip it. Find the

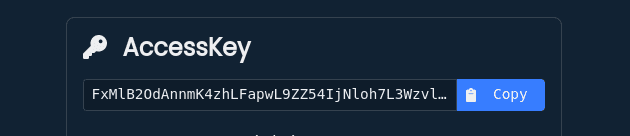

Middlewaresfolder and replace the emptyMiddlewaresfolder in the project with that.Sign up for Picovoice Console, go to dashboard, and copy your

AccessKey.

- In

main.c, insert yourAccessKeystring in the line:

Build and load the project into your board. The demo uses Serial Wire Viewer (SWV) to return log messages. Assure this works, and you can monitor it.

Say:

You should see the following in the debug console:

The UUID is the unique identifier of the ST MCU on the board. We will need it for training custom models. The rest

shows that the firmware understood the wake phrase Picovoice using the Porcupine Wake Word engine, and the follow-on

voice command turn on the light in the living room using Rhino Speech-to-Intent engine.

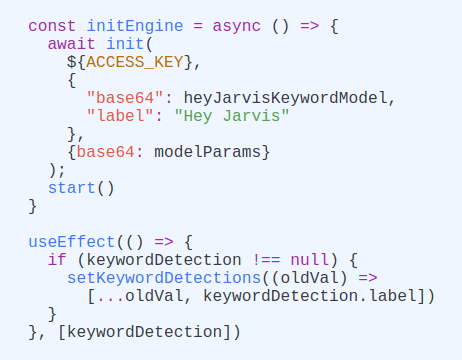

Train Custom Wake Word

- Sign up for Picovoice Console.

- Go to the

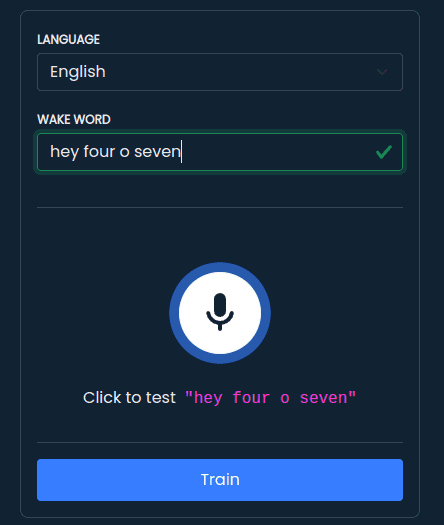

Porcupine Page - Select English as the language for your model

- Type in the phrase you want to build the model for. I choose

Hey Four O Sevenas an example. - Optionally, you can try it within the browser

- Once you are happy, click on the train button.

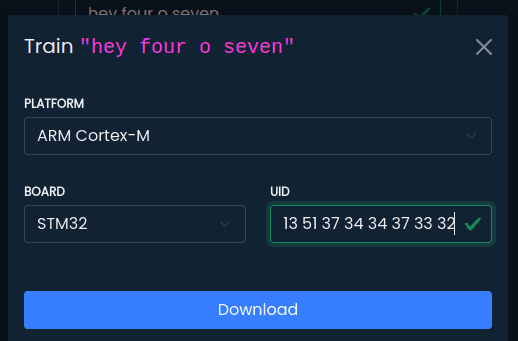

- Pick

Arm Cortex-Mas the platform - Select

STM32as your board type. - Enter the

UUIDfor your board. - Click on the Download button. You should have a

.zipfile in your download folder now.

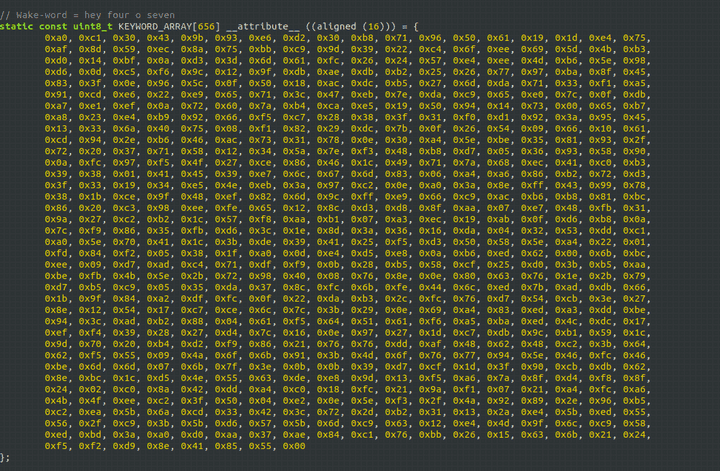

- Unzip it. Open the file

pv_porcupine_params.hwith a text editor. TheKEYWORD_ARRAY[]contains your newly-trained model!

Copy the content of this array into the

KEYWORD_ARRAY[]variable insideInc/pv_params.h.Build the project and load it on the board. Now you can say:

Train Custom Follow-on Voice Commands

Let's make a voice-enabled coffee maker!

- On Picovoice Console, go to the

Rhino Page. - Click on

New Context - Give the context a name of your choice, select English as the language, and choose

Coffee makeras a template. - Click on

Create Context. - Once in the Rhino context editor, click the Download button. Follow the steps similar to the custom wake word to download the model.

- Unzip the downloaded model. Find the header file and copy the content of

CONTEXT_ARRAY[]into theCONTEXT_ARRAY[]variable insideInc/pv_params.h. - Build the project and load it on the board. Now you can say:

You should see the following in the debug console:

Non-English models?

The demo project supports French, German, Italian, Japanese, Korean, Chinese (Mandarin), Portuguese, and Spanish as well. Check Porcupine Wake Word and Rhino Speech-to-Intent documentation to see all languages available.

Additional HW Support

We support four Arm Cortex-M microcontrollers (boards). These are selected based on popularity and cover a variety of computing and memory resources.

Adding support for a new microcontroller is expensive and entails ongoing costs. We have the following ruleset in our engagement:

- Prospects: Please engage with the Picovoice Consulting Team. If the opportunity is a fit, we will support your HW.

- Researchers and Hobbyists: Open a GitHub issue on the Picovoice repository. We can consider it if the traction of the GitHub issue is significant.

- Semiconductor Companies: If you have a customer, we are happy to engage with them and support your HW as part of that engagement.