This article serves as a comprehensive guide for adding on-device Speech Recognition to an Unity project.

When used casually, Speech Recognition usually refers solely to Speech-to-Text. However, Speech-to-Text represents only a single facet of Speech Recognition technologies. It also refers to features such as Wake Word Detection, Voice Command Recognition, and Voice Activity Detection (VAD). In the context of Unity projects, Speech Recognition can be used to implement a Voice Interface.

Fortunately Picovoice offers a few tools to help implement Voice Interfaces. If all that is needed is to recognize when specific phrases or words are said, use Porcupine Wake Word. If Voice Commands need to be understood and intent extracted with details (i.e. slot values), Rhino Speech-to-Intent is more suitable. Keep reading to see how to quickly start with both of them.

Picovoice Unity SDKs have cross-platform support for Linux, macOS, Windows, Android and iOS!

Porcupine Wake Word

To integrate the

Porcupine Wake WordSDK into your Unity project, download and import the latest Porcupine Unity package.Sign up for a free Picovoice Console account and obtain your

AccessKey. TheAccessKeyis only required for authentication and authorization.Create a custom wake word model using Picovoice Console.

Download the

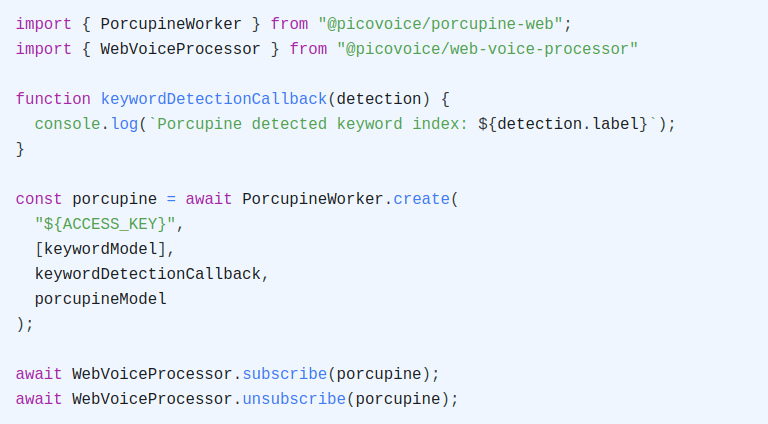

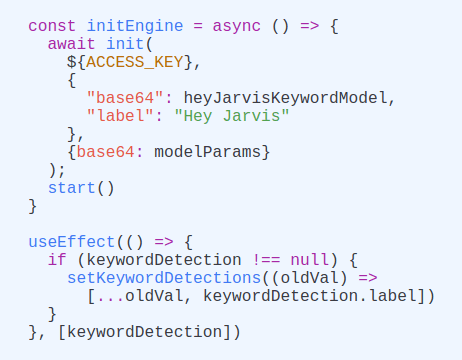

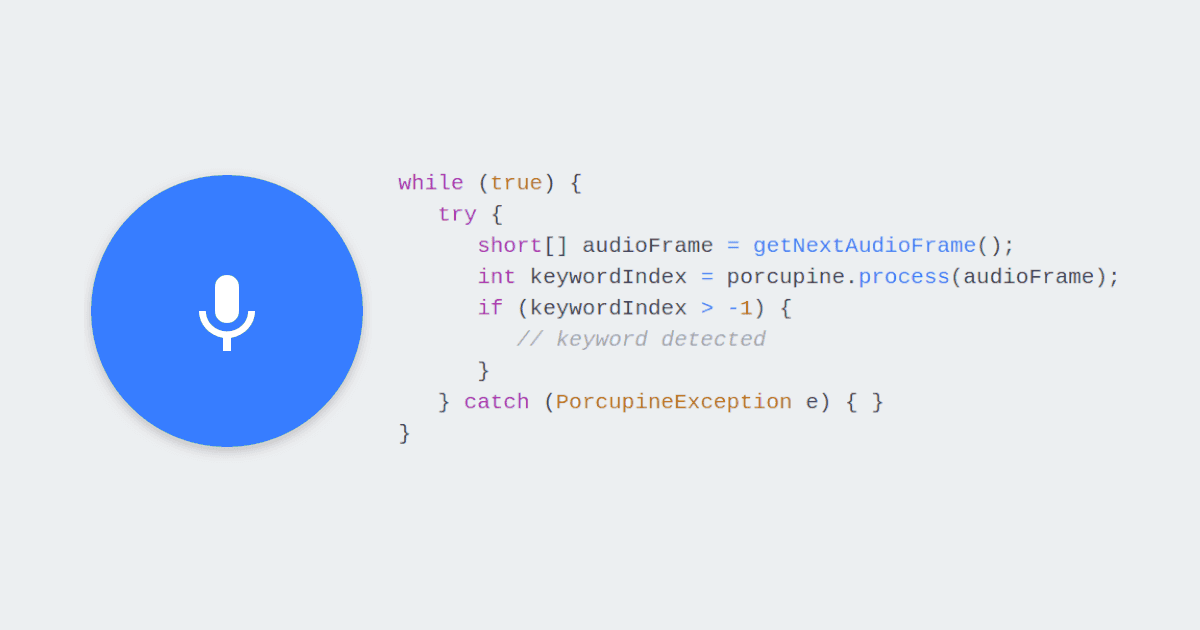

.ppnmodel file and copy it into your project'sStreamingAssetsfolder.Write a callback that takes action when a keyword is detected:

- Initialize the

Porcupine Wake Wordengine with the callback and the.ppnfile name (or path relative to theStreamingAssetsfolder):

- Start detecting:

For further details, visit the Porcupine Wake Word product page or refer to Porcupine's Unity SDK quick start guide.

Rhino Speech-to-Intent

To integrate the

Rhino Speech-to-IntentSDK into your Unity project, download and import the latest Rhino Unity package.Sign up for a free Picovoice Console account and obtain your

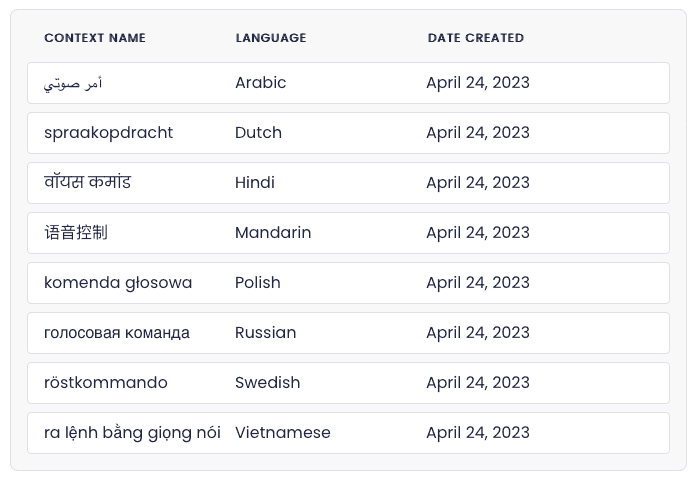

AccessKey. TheAccessKeyis only required for authentication and authorization.Create a custom context model using Picovoice Console.

Download the

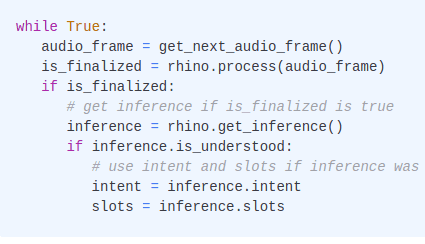

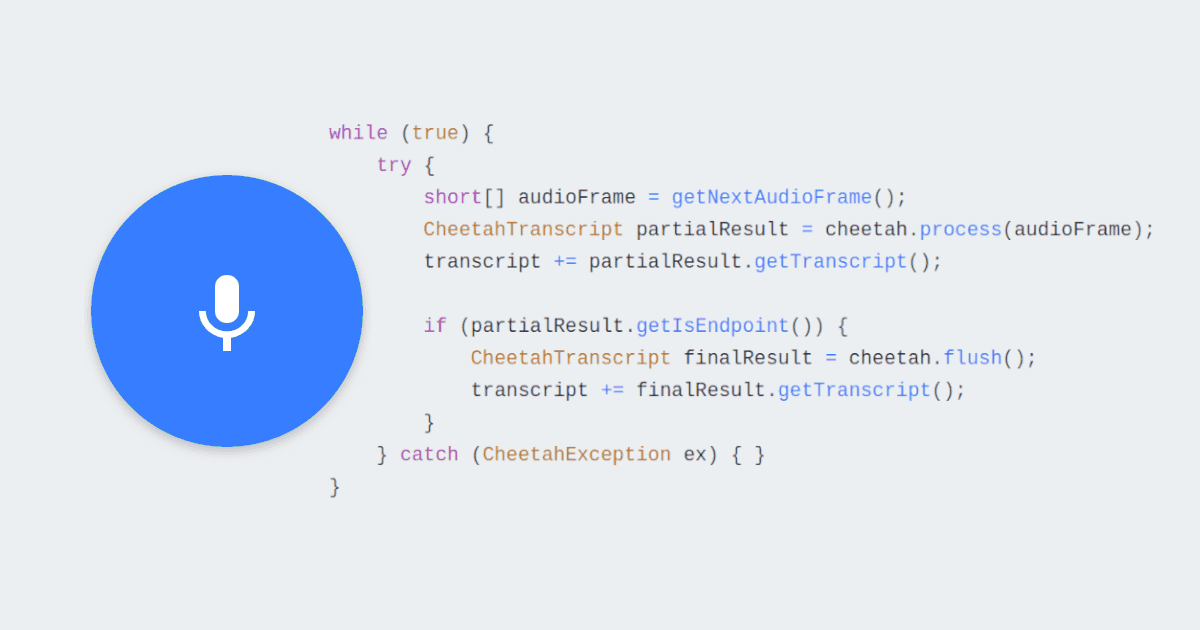

.rhnmodel file and copy it into your project'sStreamingAssetsfolder.Write a callback that takes action when a user's intent is inferred:

- Initialize the

Rhino Speech-to-Intentengine with the callback and the.rhnfile name (or path relative to theStreamingAssetsfolder):

- Start inferring:

For further details, visit the Rhino Speech-to-Intent product page or refer to Rhino's Android SDK quick start guide.