In this article, we will learn how to perform Wake Word Detection and Voice Command Detection in React.

Porcupine Wake Word is used to recognize specific phrases or words and Rhino Speech-to-Intent is used to understand voice commands and extract intent with details.

Porcupine Wake Word

- Install the

Porcupine Wake WordReact SDK usingnpm:

Sign up for a free Picovoice Console account and copy your

AccessKey. It handles authentication and authorization.Create your custom wake word model using Picovoice Console.

Add the Porcupine model and the Wake Word model to the project by:

Either copying the model file to the project's public directory:

(or)

Create a base64 string of the model using the pvbase64 script included in the package:

- Create an object containing the Porcupine model and Wake Word engine model options:

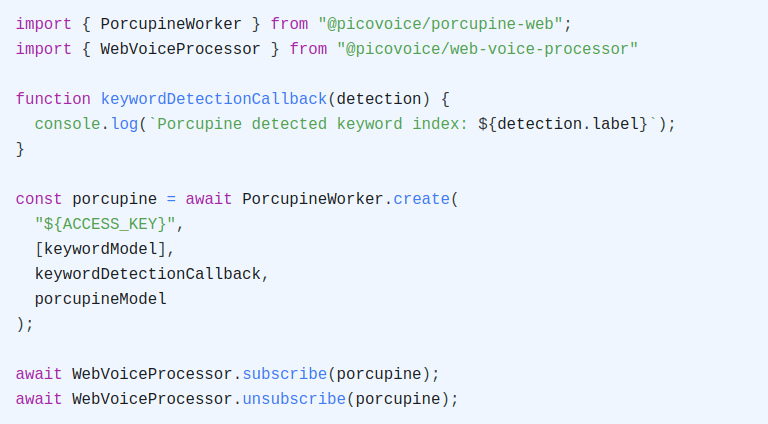

- Create an instance of the Wake Word engine using the model options in the previous step:

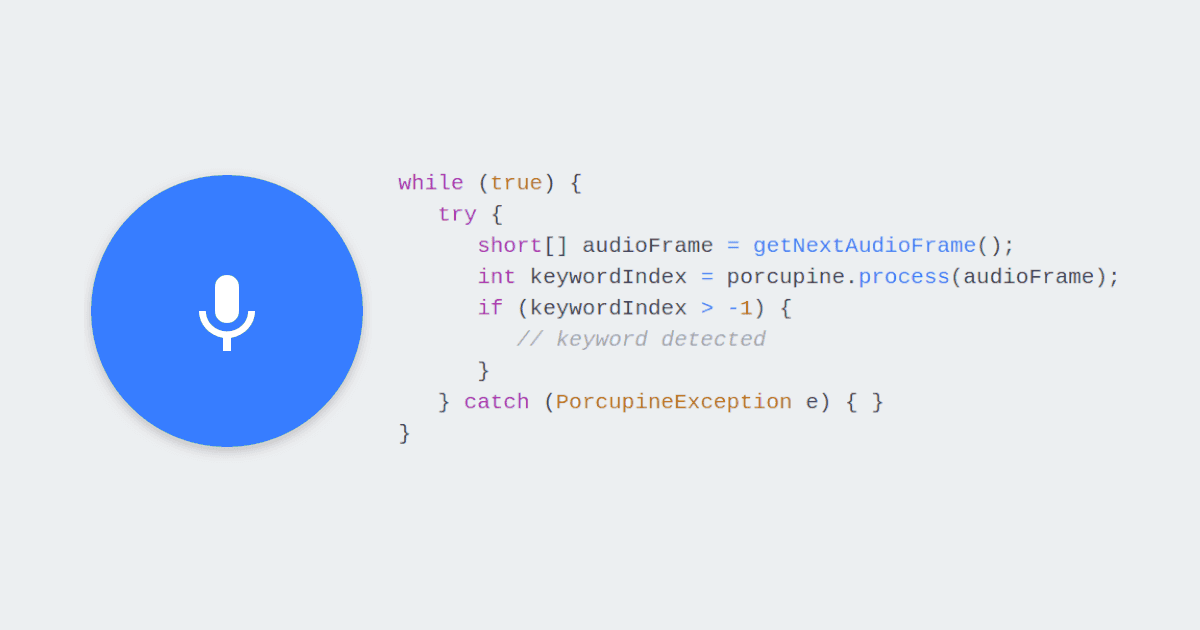

- Process audio frames by calling the

startmethod:

Once started, isListening state will be set to true and keywordDetection state will be updated based on Wake Word detections:

For more information check Porcupine Wake Words's product page or refer to Porcupine's React SDK quick start guide.

Rhino Speech-to-Intent

- Install the

Rhino Speech-to-IntentReact SDK usingnpm:

Sign up for a free Picovoice Console account and copy your

AccessKey. It handles authentication and authorization.Create your Context using Picovoice Console.

Add the Rhino model and the Context model to the project by:

Either copying the model file to the project's public directory:

(or)

Create a base64 string of the model using the pvbase64 script included in the package:

- Create an object containing the Rhino model and Context model options:

- Create an instance of the Speech-to-Intent engine using the model options in the previous step:

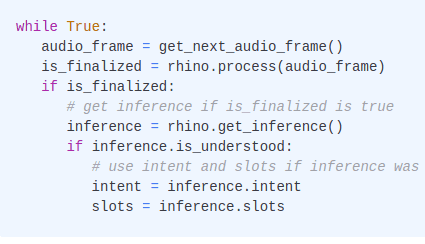

- Process audio frames by calling the

processmethod:

Rhino will listen and process frames of microphone audio until it has finalized an inference, which it will return via the inference variable. Rhino will enter a paused state once a conclusion is reached. From the paused state, call process again to start another inference.

For more information check Rhino Speech-to-Intent's product page or refer to Rhino's React SDK quick start guide.