AI Assistants and AI Agents are changing our lives. A Large Language Model (LLM) is the standard component of modern GenAI assistants. A voice-based LLM assistant can provide a more natural, efficient, and convenient user experience. Voice Agents also unlock use cases in call centers and customer support. However, Voice Assistants require additional voice AI models to work harmoniously with the LLM brain to create a satisfactory end-to-end user experience.

The recent GPT-4o audio demo proved that an LLM-powered voice assistant done correctly could create an awe-inspiring experience. Unfortunately, GPT-4o's audio feature is not publicly available. Instead of busy waiting, we will show you how to build a voice assistant of the same caliber right now in less than 400 lines of Python using Picovoice's on-device voice AI and local LLM stacks.

Why use Picovoice's tech stack instead of OpenAI? Picovoice is on-device, meaning voice processing and LLM inference are performed locally without the user's data traveling to a third-party API. Products built with Picovoice are private by design, compliant (GDPR, HIPPA, ...), and real-time without unreliable network (API) latency.

First, Let's Play!

Before building an LLM-powered voice assistant, let's check out the experience. Picovoice Python SDKs are cross-platform and can run on CPU and GPU across Linux, macOS, Windows, and Raspberry Pi.

Picovoice also supports mobile devices (Android and iOS) and all modern web browsers (Chrome, Safari, Edge, and Firefox).

Phi-2 on Raspberry Pi

The video below shows Picovoice's LLM-powered voice assistant running Microsoft's Phi-2 model on a Raspberry Pi 5. This enables use cases that can't afford reliance on connectivity, such as automotive or industrial mission-critical use cases.

Llama-3-8B-Instruct on CPU

The video below shows Picovoice's LLM assistant running Meta's Llama-3-8B-Instruct model on a consumer-grade CPU (AMD Ryzen 7 5700X 8-Core), enabling enterprise use cases that call for compliance and security to run on laptops, workstations, or on-premises.

Mixtral-8x7B-Instruct-v0.1 on CPU

The video below shows Picovoice's LLM assistant running Meta's Mixtral-8x7B-Instruct-v0.1 model on a consumer-grade CPU (AMD Ryzen 7 5700X 8-Core). This enables enterprise use cases in healthcare, finance, and legal, where privacy and compliance are paramount.

Llama-3-70B-Instruct on GPU

Pinnacle open-weight models like Meta's Llama-3-70B-Instruct approach (surpass) API alternatives in terms of performance but require a cluster of GPUs for execution. Picovoice can run Llama-3-70B-Instruct on a single RTX 4090 consumer GPU.

Anatomy of an LLM Voice Assistant

There are four things an LLM-powered AI assistant needs to do:

- Pay attention to when the user utters the wake word.

- Recognize the request (question or command) the user is uttering.

- Generate a response to a request using LLM.

- Synthesize speech from LLM's text response.

1. Wake Word

A Wake Word Engine is a voice AI software that understands a single phrase. Every time you use Alexa, Siri, or Hey Google, you activate their wake word engine.

Wake Word Detection is known as Keyword Spotting, Hotword Detection, and Voice Activation.

2. Streaming Speech-to-Text

Once we know the user is talking to us, we must understand what they say. This is done using Speech-to-Text. For latency-sensitive applications, we use the real-time variant of speech-to-text, also known as Streaming Speech-to-Text. The difference is that a Streaming Speech-to-Text transcribes speech as the user talks. In contrast, a normal speech-to-text waits for the user to finish before processing (e.g., OpenAI's Whisper).

Speech-to-Text (STT) is also known as Automatic Speech Recogniton (ASR). Streaming Speech-to-Text is also known as Real-Time Speech-to-Text.

3. LLM Inference

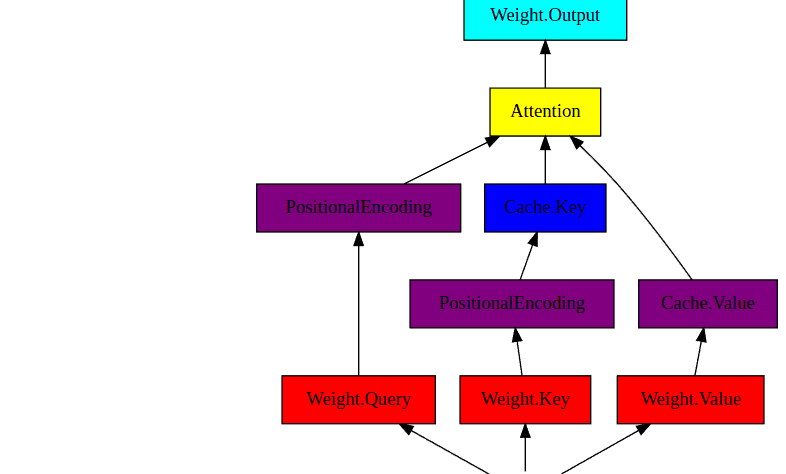

Once the user's request is available in text format, we need to run the LLM to generate the completion. Once the LLM inference starts, it generates the response piece-by-piece (token-by-token). We use this property of LLMs to run them in parallel with speech synthesis to reduce latency (more on this later). LLM inference is very compute intensive, and running it on the device requires techniques to reduce memory and compute requirements. A standard method is quantization (compression).

Are you a deep learning researcher? Learn how picoLLM Compression deeply quantizes LLMs while minimizing loss by optimally allocating bits across and within weights [🧑💻].

Are you a software engineer? Learn how picoLLM Inference Engine runs x-bit quantized Transformers on CPU and GPU across Linux, macOS, Windows, iOS, Android, Raspberry Pi, and Web [🧑💻].

4. Streaming Text-to-Speech

A Text-to-Speech (TTS) engine accepts text and synthesizes the corresponding speech signal. Since LLMs can generate responses token-by-token as a stream, we prefer a TTS engine that can accept a stream of text inputs to lower the latency. We call this a Streaming Text-to-Speech.

Soup to Nuts

This section explains how to code a local LLM-powered voice assistant in Python. You can check the entire script at LLM-powered voice assistant recipe in the picovoice cookbook github repository.

1. Voice Activation

Install Picovoice Porcupine Wake Word Engine:

Import the module, initialize an instance of the wake word engine, and start processing audio in real time:

Replace $ACCESS_KEY with yours obtained from Picovoice Console and $KEYWORD_PATH with the absolute path to the file containing the parameters of the keyword model you trained on Picovoice Console.

A remarkable feature of Porcupine is that it lets you train your model by just providing the text!

2. Speech Recognition

Install Picovoice Cheetah Streaming Speech-to-Text Engine:

Import the module, initialize an instance of the streaming speech-to-text engine, and start transcribing audio in real time:

Replace $ACCESS_KEY with yours obtained from Picovoice Console and $ENDPOINT_DURATION_SEC with the duration of silence at the end of the user's utterance to make sure they are done talking. The longer it is, the more time the user has to stutter or think in the middle of their request, but it also increases the perceived delay.

3. Response Generation

Install picoLLM Inference Engine:

Import the module, initialize an instance of the LLM inference engine, create a dialog helper object, and start responding to the user's prompts:

Replace $ACCESS_KEY with yours obtained from Picovoice Console and $LLM_MODEL_PATH with the absolute path to the picoLLM model downloaded from Picovoice Console.

Note that the LLM's .generate function provides the response in pieces (i.e., token by token) using its stream_callback input argument. We pass every token as it becomes available to the Streaming Text-to-Speech and when the .generate function returns we notify the Streaming Text-to-Speech model that there is no more text and flush any remaining synthesized speech.

4. Speech Synthesis

Install Picovoice Orca Streaming Text-to-Speech Engine:

Import the module, initialize an instance of Orca, and start synthesizing audio in real time:

Replace $ACCESS_KEY with yours obtained from Picovoice Console.

What's Next?

The voice assistant we've created above is sufficient but basic. The Picovoice platform allows you to add many more features to it. Below is a wish list that we would work on if we had spare engineering capacity.

Personalization

When interacting with the world, humans simultaneously use information about what is said and who said it. A voice-based LLM is not exempt if we want it to mimic intelligence, even artificially! Personalization can be achieved using the Picovoice Eagle Speaker Recognition Engine.

Multi-Turn Conversations

The voice assistant above requires the user to utter the wake word for each turn of interaction, which can become cumbersome for long-running multi-turn conversations. We can use Picovoice Cobra Voice Activity Detection Engine to remove the need to utter the wake word after the initial activation and speed up follow-up interactions.