We are excited to announce the release of Porcupine version 1.8, which achieves major accuracy and runtime efficiency improvements. This is the culmination of the intensive R&D efforts by our research and engineering teams at Picovoice.

Improved Accuracy and Runtime Efficiency

The standard wake word model in the Porcupine 1.8 release is 1.6 times more accurate and 1.7 times faster than the standard model in the previous release, 1.7. This significant improvement is even more pronounced considering 1.7 release was already 3.6 times more accurate and 3.8 times faster than commercial alternatives in the market.

Figure 1 compares the miss rate of the current and previous versions of Picovoice wake word engine with alternative solutions. Figure 2 compares the runtime efficiency (CPU usage on Raspberry Pi 3) of these engines. The details of the benchmark including code and data are open-sourced to enable independent benchmarking. Find the details of the benchmark in the Picovoice docs.

Compressed and Uber Models

The standard model in v1.8 is 1MB in size and consumes 3.8% of CPU cycles on a single core of a Raspberry Pi 3 (ARM Cortex-A53). This is the recommended trim to the majority of our customers. The standard model can run on a variety of platforms such as microcontrollers (e.g. ARM Cortex-M7), embedded microprocessors (e.g. all variants of Raspberry Pi), Android, iOS, and even modern web browsers.

For low-power applications (e.g. wearables, hearables) with stringent power consumption requirements, we provide a compressed model. The compressed model is 4 times smaller than the standard model, while delivering 90+% accuracy (at 1 false alarm per 10 hours) in noisy environments. The model is well suited to run on power-efficient ARM Cortex-M4 microcontrollers that have a lower clock rate (typically 48 MHz) in their power-efficient mode.

For applications requiring the highest accuracy, we provide an uber model, which is 1.6 times more accurate than the standard model. The model is well suited for use on ARM Cortex-A microprocessors or x86_64 processors (e.g. desktops and servers). Figure 3 compares the accuracy of different trims, while Figure 4 compares their CPU usage on a Raspberry Pi 3 (ARM Cortex-A53).

Autonomous Model Training Platform: Picovoice Console

Building speech models was previously a cumbersome, time-consuming, and expensive undertaking. The process often involved large-scale data gathering, bespoke model training, and testing. This required many weeks and significant cost as it involves humans in the loop.

Picovoice has revolutionized speech model training by inventing a proprietary transfer learning technology that enables zero-shot learning. This eliminates the need to have human coordination and oversight, which in turn expedites the process by orders of magnitude shortening it to a few hours.

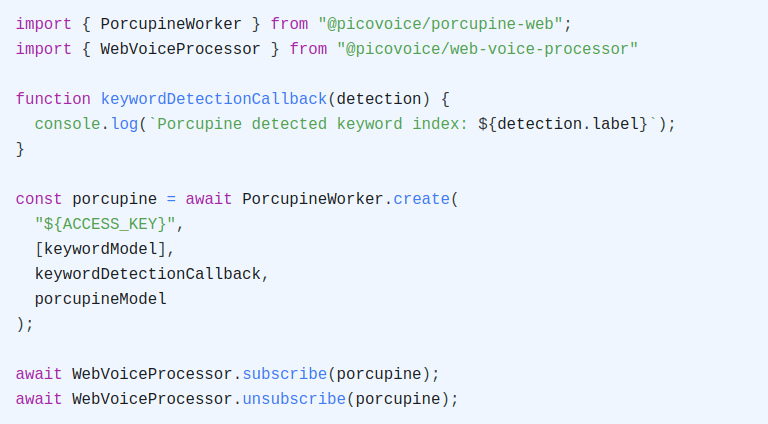

The power of the Picovoice transfer learning technology is combined with our scalable web platform: Picovoice Console. With the Console you can simply type in the wake phrase, click a button to train a model for it and have a downloadable result instantly. Before training a model, you can check our tips for choosing a wake word here. The trained model—evidenced by our open-source benchmark—provides significantly higher accuracy compared to alternatives requiring manual labor.