Five years ago, in his 2018 Congress testimony, Mark Zuckerberg said AI would take a primary role in automatically detecting hate speech on Facebook in five to ten years. Last year, Meta published a post on challenges in detecting hate speech, as most hate speech on Meta platforms was not in text but in other unstructured formats. Like Meta, most enterprises fail to use unstructured data, including audio and video, to achieve their goals. That’s why it’s called dark data.

Voice Content Moderation has become a hot topic with social media and online gaming platforms. However, it’s been on call centers' radar for decades. The mandate for customer service has been providing high-quality service with respect and regulatory compliance while protecting employees from harassment. Yet, many have relied on complaints and human content moderators to address this problem. Now, advances in AI allow all enterprises to process massive amounts of data in real time, regardless of when they have started working on this problem. Yet not all enterprises have machine learning experts as Meta has. They should learn the nuances before investing in AI models to moderate their content.

What is Content Moderation?

Content Moderation aims to detect sensitive content such as hate speech, threats, violence, profanity, gambling, adultery, terrorism, and weapons, as well as company financials, competition, and customer information.

A lack of content moderation may affect user engagement and retention significantly, causing direct and indirect revenue losses. No advertiser would love to see its ad content in a video about terrorism, no customer would love to be treated terribly by agents, and no parent would pay for a game where their kid gets bullied.

How to choose an AI model for content moderation?

1. Business Priorities

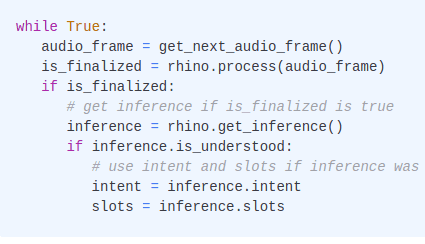

There are many out-of-the-box content moderation solutions out there, including the offerings of Big Tech, such as Microsoft Azure AI Content Moderator and Amazon Rekognition. One can buy out-of-shelf models or build their own solution using the voice AI models, such as Porcupine Wake Word, Cheetah Speech-to-Text, Leopard Speech-to-Text, Rhino Speech-to-Intent, Emotion Detection, NLP, Large Language Models and so on.

First, enterprises should define the needs and priorities to choose the technology that addresses the needs. Every use case and business may have different requirements.

Some applications, such as broadcasting, cannot afford unreliable delays. In radio and television, “broadcast delay,” "seven-second delay," or "profanity delay" refers to an intentional delay that prevents unacceptable content from being broadcast. Using a cloud-dependent content moderation API may result in unpleasant disruptions caused by latency. While latency is critical for real-time conversations and applications, it is not for scanning an existing archive.

Define priorities before looking for alternatives.

2. Customization

Content moderation, as the name suggests, moderates the content. Each enterprise has its own content using specific abbreviations, terms, and proper nouns, such as brand and product names. These may not exist in generalist models. Thus, a content moderation tool should adapt the unique jargon and terms. When your content moderation tool doesn’t know your competitor’s name, how can you know whether your agent is not violating antitrust laws while making comments about the competition?

Choose a content moderation tool that is customizable to capture everything in your playbook.

3. Coverage

The cost of processing data in the cloud is not crucial for use cases with low volume of data. Those enterprises can afford 100% of their content covered and moderated. However, most enterprises that need content moderation deal with massive amounts of data. Social media platforms, games, call centers. Last year, more than 500 hours of video were uploaded to YouTube every minute. Before processing that audio data, the cost of sending this massive amount of video content to a 3rd party remote server would be the deal-breaker. Hence, enterprises with large volumes can only cover a certain percentage of their content when they use a 3rd party cloud-dependent content moderation solution. On the other hand, on-device speech processing can be a game changer for these enterprises as it is cost-effective at scale and eliminates the cloud-related costs by default, allowing enterprises to cover their whole content. Plus, on-device processing mitigates third-party data privacy, security, and compliance risks as no data leaves the premises.

If you’re ready to build your own voice content moderation tool using Picovoice, start building. If you need help with choosing the right tools, consult our experts!

Consult an Expert