Maintaining the accuracy of Speech-to-Text (STT) in custom domains is a challenge. Automatic Speech Recognition (ASR) engines that achieve low Word Error Rate (WER) struggle when operating in specialized contexts like medical transcription, sales coaching, and customer service. Lowering back the WER requires engineering efforts. The level of investment depends on the strategy and varies from hours to months of R&D. Below is a pragmatic strategy (flow) starting from low-cost tasks.

Add Custom Words

Every Speech-to-Text engine understands a large but limited set of words known as the Lexicon. A phrase not in the Lexicon is called Out-of-Vocabulary (OOV). When the Lexicon doesn't contain an uttered word, STT transcribes it to another (set of) phrase (s) that sound similar, increasing WER.

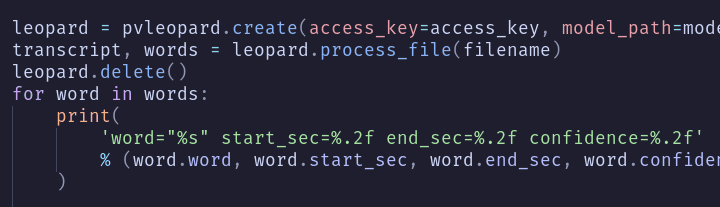

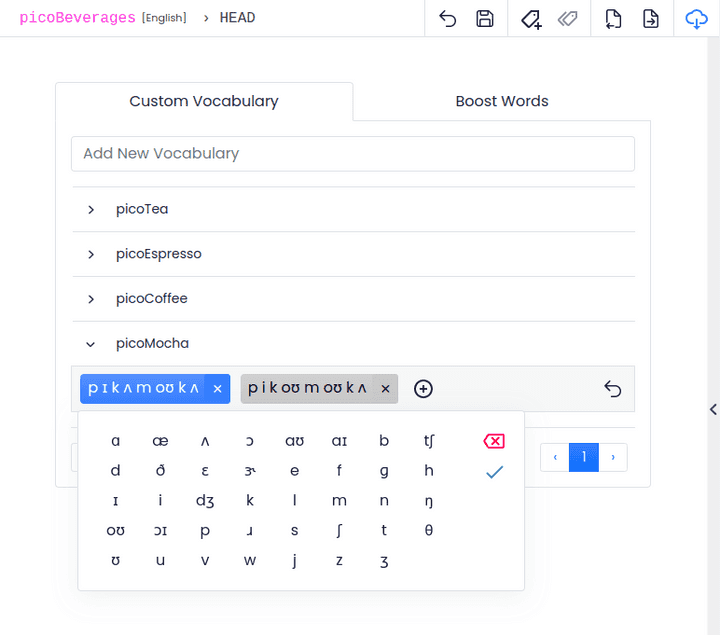

If you have an in-house STT engine, add OOVs to your Language Model (LM) pipeline. If you use a third-party vendor, ask if they have an API (facility) to enable you to add OOVs. For example, developers can add custom words (OOVs) via Picovoice Console to Picovoice Leopard Speech-to-Text and Cheetah Streaming Speech-to-Text engines.

Boost Phrases

Some phrases are frequent in a given specialized domain but otherwise are rare. For example, a keynote speaker at Interspeech uses terms like Word Error Rate, Acoustic Model, Language Model, and End-to-End Speech Recognition. But these sequences of words are not frequent in daily

conversations.

You can provide these specialized terms (phrases) to the ASR model, so it can closely pay attention to them. If you are building your LM in-house, these terms should be frequent in your Text Corpus. If you are using a third-party vendor, ask them about their API for Keyword Boosting. For example, developers can boost keywords via Picovoice Console to Picovoice Leopard Speech-to-Text and Cheetah Streaming Speech-to-Text engines.

Language Model Adaptation

If adding OOVs and boosting keywords doesn't suffice, adapting (training) the Language Model (LM) is the next logical step. A large text corpus (think millions of words) representing expected utterances is needed. LM Adaptation is accessible if you have an in-house solution or have a strong partnership with your ASR vendor.

Picovoice doesn't provide an automated facility for adapting or training Language Models. The decision is intentional. The steps involved in adapting an LM are complex, and a linguist needs to examine the properties of the adaptation corpus.

Acoustic Model Adaptation

Acoustic Model Adaptation is the last step. It is expensive if done right. But, thankfully, rarely required. There is only a handful of scenarios that require Acoustic Model Adaptation. For example, ASR for kids.

Self-service Picovoice Console is freely available to any developer to improve the accuracy of Leopard Speech-to-Text and Cheetah Streaming Speech-to-Text without writing a single line of code. If you have a domain-specific use case with unique jargon consisting of thousands of words, such as medical dictation, or need an acoustic model adaptation, engage with Picovoice Consulting.

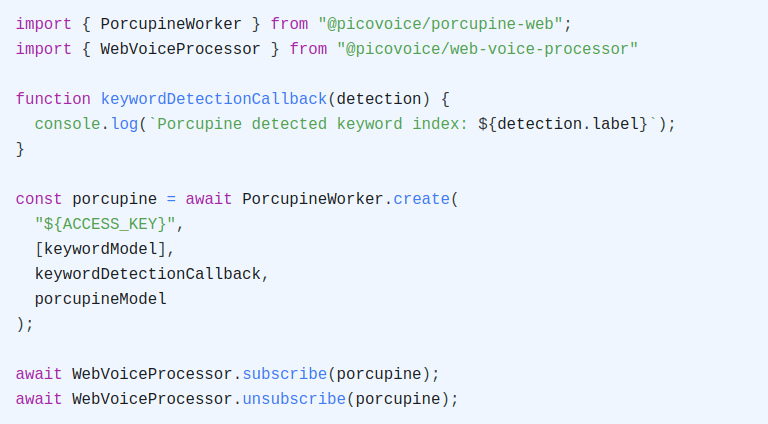

Start Building