Generative AI for Audio has made significant progress in recent years, enabling the creation of high-quality audio content. AI advances from non-audio, such as ideas from large language models or text-to-image generation techniques, contributed to this progress.

Applications of Generative AI for Audio

Generative AI for Audio has numerous applications, such as music composition, remixing, accompaniment generation, and generic or personalized audio content creation for advertising and education.

Artists use Audio Generated by AI in various creative ways, such as to create new compositions, remix existing songs, and generate accompaniments for live performances. Generative AI models can also provide music recommendations for personalized experiences.

Text-to-Speech applications consist of voiceover for movies, audiobooks, customer service agents, etc. Enterprises can use custom voices to generate personalized voices for virtual assistants or targeted advertising campaigns.

How does Generative AI for Audio work?

Text-to-Audio models, enabling audio content production from textual prompts, employ techniques such as Tokenization, Quantization, and Vectorization to represent and manipulate audio data.

Tokenization refers to the process of breaking down data into tokens, i.e., discrete units to be processed and analyzed by machine learning algorithms. Tokens represent various aspects of the audio signal, such as pitch, timbre, and rhythm.

Quantization is a technique that represents continuous audio signals as discrete values, so audio can be generated using similar techniques used for Large Language Models.

Vectorization is the process that converts audio data into a high-dimensional vector space to capture the relationships between different audio signals. Vectorization enables machine learning algorithms to identify patterns and generate new audio content.

In short, Generative Audio AI models generate new audio content by converting text data into sequences of audio tokens.

Generative AI for Audio Powered by Deep Learning

Deep Learning architectures, such as Transformers, GANs (Generative Adversarial Networks), and VAEs (Variational Autoencoders), have effectively generated realistic audio content by learning the complex relationships between different audio signals.

What’s an Autoregressive Transformer?

Autoregressive Transformers have emerged as a popular choice for generating audio content, considering their ability to generate audio content one token at a time based on the previous tokens in the sequence. It enables models to capture the complex relationships between various audio signals over time, making it essential for generating realistic audio content.

What’s a Generative Adversarial Network (GAN)?

Generative Adversarial Networks (GANs) consist of two components: a generator network, which generates new audio content, and a discriminator network, which evaluates the quality of the generated content. By training these two networks together, GANs learn to generate high-quality audio content that is indistinguishable from real audio recordings.

What’s a Variational Autoencoder (VAE)?

Variational Autoencoders (VAEs) is another promising approach for generating audio content. VAEs consist of an encoder network, which maps input audio data into a latent space, and a decoder network, which maps the latent space back into the audio domain. VAEs train the encoder and decoder networks together to learn to generate new audio content.

Why is Audio Generation Hard?

Similar to other AI models, training Generative Audio models is not hard. What’s hard is to train an efficient and high-quality Generative AI Model for Audio.

Audio signals are complex and multifaceted.

Audio signals consist of various frequencies and amplitudes, needed to be accurately modeled to generate realistic audio. For example, we pronounce “read” and “live” differently depending on the context. Accents and dialects have nuances requiring one-to-many mapping. Moreover, audio signals are continuous and require more sophisticated mathematical models than models that generate text or images using discrete symbols such as pixels.

High-quality audio data is scarce.

Training high-quality AI models requires a large amount of high-quality audio data. Finding text or images in large quantities on the internet is much easier than obtaining high-quality audio data.

What’s next?

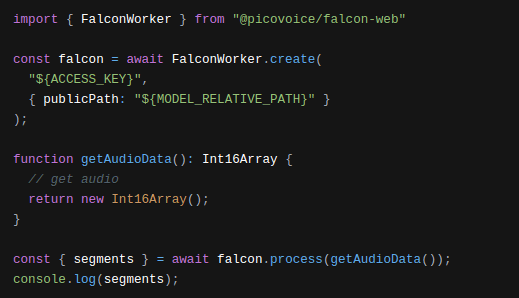

Picovoice has two offerings for those interested in Generative AI Models for Audio. Orca Text-to-Speech is an off-shelf voice generator that processes data locally on-device. Picovoice Consulting builds custom Generative AI Models for enterprise customers, whether they’re interested in custom voices or audio.