TLDR: 2025 Highlights

- 5x revenue growth with 1.5x increase in developer user base

- 12 new language releases across Cheetah Streaming Speech-to-Text and Orca Streaming Text-to-Speech

- Cheetah Fast launched for ultra-low latency conversational AI applications

- Cobra v2.1 delivers superior accuracy in noisy environments with the same low footprint

- .NET support expanded for Cobra VAD and Orca TTS

What's New in 2025?

2025 was another transformative year at Picovoice. We expanded our multilingual capabilities across core engines, introduced breakthrough performance optimizations, and grew revenue by 5x while maintaining our commitment to best-in-class on-device voice AI. The user base grew by 2.5x as more developers chose privacy-first, low-latency on-device voice AI and LLM solutions. Here are the 2025 highlights from Picovoice:

Multilingual Voice AI Expansion

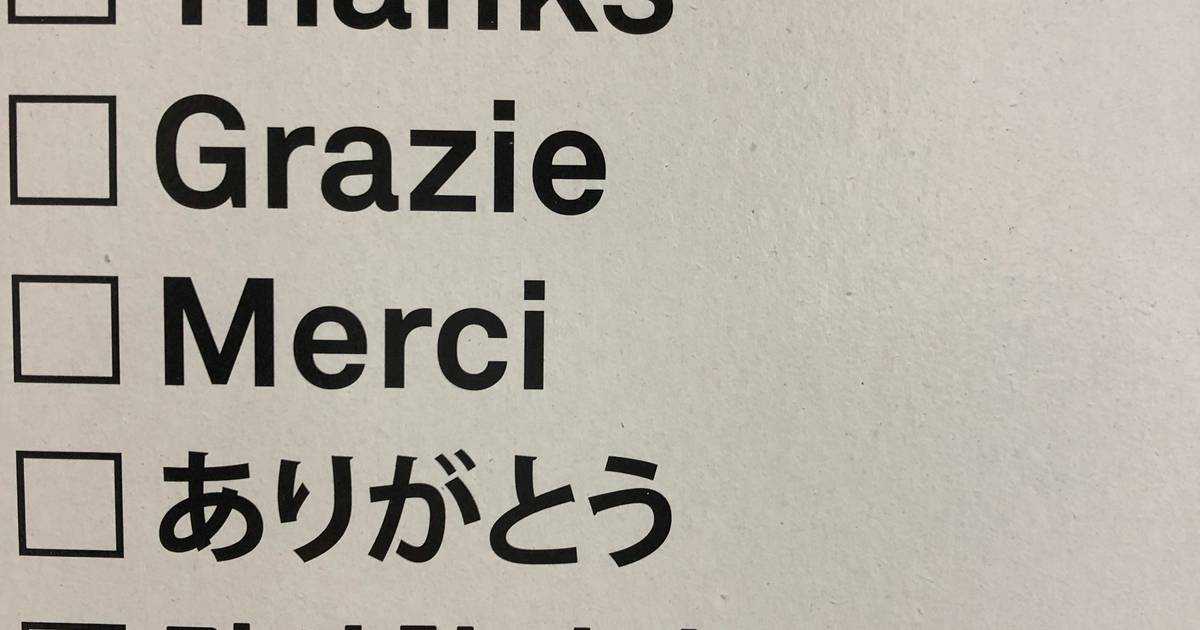

In Q1 2025, Picovoice dramatically expanded language support across two core engines, enabling developers worldwide to build private, on-device voice experiences in their native languages.

Cheetah Streaming Speech-to-Text: Five New Languages

Cheetah Streaming Speech-to-Text added support for French (Français), German (Deutsch), Italian (Italiano), Portuguese (Português), and Spanish (Español). This expansion enables real-time, zero-latency transcription for millions more users, all while maintaining Cheetah's privacy-first, cost-effective approach.

Orca Text-to-Speech: Seven New Languages

Orca Streaming Text-to-Speech expanded even further, adding French (Français), German (Deutsch), Italian (Italiano), Japanese (日本語), Korean (한국어), Portuguese (Português), and Spanish (Español). Developers can now create natural-sounding voice experiences across 8 languages with consistent quality and zero cloud dependency.

Looking for additional languages? Picovoice Consulting can train custom models for your application when you become an Enterprise Plan customer.

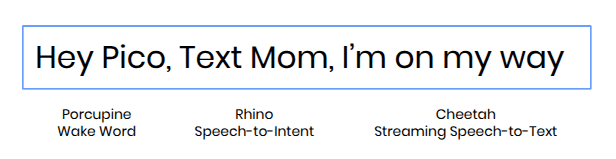

Cheetah Fast: Ultra-Low Latency Speech-to-Text

In October 2025, Picovoice introduced Cheetah Fast, a new model optimized for ultra-low latency real-time transcription. Cheetah Fast is purpose-built for conversational AI applications where speed is critical.

While accuracy decreases slightly (~1 bps) compared to the standard Cheetah model, Cheetah Fast still outperforms Google Cloud Speech-to-Text in accuracy while delivering significantly faster processing speeds. Learn more about how speech-to-text vendors measure latency and why many cloud providers' claims can be misleading.

Figure: Cheetah Fast outperforms Google Cloud Speech-to-Text Streaming and Amazon Transcribe Streaming.

Cobra v2.1: Superior Voice Activity Detection

In September 2025, Picovoice released Cobra VAD v2.1 with improved accuracy, particularly in noisy environments. The new version maintains the same low footprint while delivering superior performance that helped establish Cobra as the best voice activity detection engine in 2025.

Cobra v2's improvements make it ideal for real-world applications where background noise and challenging acoustic conditions are the norm rather than the exception.

Figure: Cobra VAD significantly outperforms both Silero and WebRTC VAD at all thresholds.

.NET Support Expansion

Following developer demand, Picovoice expanded .NET support in 2025:

This enables Windows developers and enterprise teams using the .NET ecosystem to integrate on-device voice AI directly into their applications.

Most Popular Tutorials

- On-device Text-to-Speech in Python: Build privacy-first Python applications using Orca TTS, PyTTSx3, Coqui TTS, and Mimic3 from Mycroft

- Speech Recognition on Raspberry Pi: Deploy accurate STT, wake word, VAD, and more on embedded devices

- Console Tutorial: Custom Wake Word: Train custom wake word models in seconds

- AI Voice Assistant for Android Powered by Local LLM: Build on-device LLM-powered voice assistants for Android apps, leveraging ultra-lightweight wake word detection, streaming speech-to-text, any LLM and streaming text-to-speech without sacrificing performance or draining the battery of mobile devices.

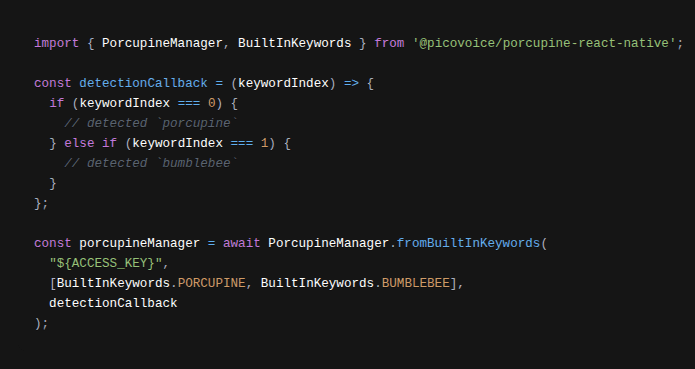

- React Native Speech Recognition: All you need to deploy accurate and lightweight voice AI for React Native apps. Learn how to add wake word, voice commands and STT for mobile devices

- How to Add Custom Wake Words to any Web App: Add multiple wake words across languages to all web browsers without hurting the website load time using Porcupine Wake Word Web SDK.

Most Popular Articles

- Complete Guide to Voice Activity Detection (VAD): Everything you need to know about VAD, from fundamental concepts to production implementation and evaluation metrics, such as Real-time Factor.

Complete Guide to Wake Word: Everything developers need to know about wake word detection and why it's easy to build good wake word detection, but very difficult to build great wake word detection, comparing it against other tech and implementation methods

Local Text-to-Speech with Cloud Quality: Things we learned while building Orca TTS - the on-device TTS achieves cloud-level voice quality and naturalness while using minimal resources

Alternatives for Retiring Microsoft Azure AI Speaker Recognition: Options developers have after Azure AI Speaker Recognition retired on September 30, 2025

picoLLM Compression: Enabling Sub-4-bit Quantization Without Sacrificing Accuracy: Technical breakdown of picoLLM's advanced quantization techniques

Whisper Speech-to-Text Alternative for Real-Time Transcription: Performance comparison of Whisper vs Cheetah - purpose-built streaming STT built for real-time transcription

Text-to-Speech Latency: Learn how to read vendor claims and minimize TTS latency

The Most Popular SDK

Python remained the developers' first choice in 2025. If you haven't started yet, build voice AI applications with just a few lines of Python:

Looking Ahead to 2026

Picovoice remains a research-driven company, and we're continuing to push our technology forward. In the first half of the year, every engine will transition to our new architecture, delivering substantial speed improvements. We also have two new products (maybe more) in the pipeline.

Stay updated on new releases and voice AI insights by , or dive in and start building for free to test them out firsthand.

Start FreeFrequently Asked Questions

Picovoice engines support English, French, German, Italian, Portuguese, Spanish, Japanese, and Korean across engines. Language availability varies by engine. Please refer to the Picovoice documentation to learn how to implement multiple languages.

Cheetah Fast is an ultra-low latency speech-to-text model optimized for conversational AI applications. It delivers faster processing than standard Cheetah Streaming Speech-to-Text and cloud APIs with minimal accuracy tradeoff (~1 bps).

Cobra VAD v2.1 offers improved voice activity detection accuracy, especially in noisy environments, while maintaining the same low footprint as previous versions.

Yes, Picovoice added .NET support for Cobra Voice Activity Detection and Orca Streaming Text-to-Speech in 2025, enabling Windows and enterprise .NET developers to integrate on-device voice AI. picoLLM On-device LLM, Porcupine Wake Word, Rhino Speech-to-Intent, Cheetah Streaming Speech-to-Text, and Leopard Speech-to-Text all support .NET SDKs. Visit picovoice.ai/docs for other SDKs.

Picovoice runs entirely on-device, providing superior privacy, zero network latency, predictable costs, and offline data processing functionality while matching or exceeding the accuracy of cloud-based alternatives like Google Cloud, Azure Speech AI, or Amazon Web Services.