Everything you need to know about wake word technology — how it works, why it matters, and how to build reliable on-device voice activation.

Wake word detection has become the standard interface for voice-enabled devices—the invisible foundation that powers modern voice AI. Every time you say "Hey Siri," "Alexa," or "OK Google," a specialized algorithm monitors audio for that specific phrase. Once detected, it signals the system to begin processing your voice commands.

This guide covers everything you need to know about wake word detection: how it works, why it matters, and how to implement it in your applications.

Table of Contents

- What is Wake Word Detection?

- How does Wake Word Detection Work?

- Wake Word vs Other Technologies

- On-Device vs Cloud Wake Word Detection

- Choosing the Right Wake Word

- Measuring Wake Word Accuracy

- Implementation Guide

- Platform-Specific Tutorials

- Use Cases and Applications

- Best Practices

- Community Projects & Inspiration

- Frequently Asked Questions

- Getting Started with Porcupine Wake Word

- Conclusion

What is Wake Word Detection?

Wake word detection, also called hotword detection, keyword spotting, or trigger word detection, is a technology that enables software to listen for a specific phrase and transition from passive listening to active listening mode when that phrase is detected. While academic literature typically uses "keyword spotting," the industry standard term is "wake word detection," since this technology "wakes" voice assistants by monitoring for a single word or phrase.

Common wake words include: "Hey Siri," "Alexa," "OK Google," "Hey Mycroft."

Why Wake Words Matter

Wake words enable hands-free interaction with devices and applications. Instead of pressing a button or touching a screen to activate a voice assistant, users can simply speak a phrase. Once the wake word is detected, the assistant knows that it has to process the voice commands coming right after. Wake words are essential for:

- Convenience - Multitasking while interacting with devices

- Productivity - Performing tasks faster, such as surgeons using voice-activated robots

- Accessibility - Accommodating users with physical disabilities using voice control

- Safety - Controlling in-car systems without taking their hands off the wheel

- Brand Recognition - Creating memorable brand associations through custom wake words

How does Wake Word Detection Work?

Wake word detection is fundamentally different from automatic speech recognition (ASR). Rather than transcribing everything you say, a wake word engine is a binary classifier designed to recognize specific phrases.

Wake Word Detection Pipeline

- Audio Capture - Continuously streams audio from a microphone

- Feature Extraction - Converts audio into features like MFCCs or mel spectrograms

- Neural Network Processing - A deep learning model analyzes the features

- Detection Decision - Outputs a confidence score to determine if the wake word is present

- Activation - Triggers an action if the wake word is detected

Modern wake word engines use deep neural networks trained on thousands of examples of the target wake word. The traditional wake word training process involves:

- Recording hundreds of speakers with different accents, speaking speeds, and noise conditions, saying the wake word

- Training the model to differentiate the wake word from similar-sounding phrases

- Optimizing for low false acceptance, false rejection rates, and for the target platform

Picovoice's wake word training approach uses

- transfer learning, eliminating the need to gather new data when training each wake phrase

- pre-trained, general-purpose acoustic models that have been trained on large amounts of voice data across many phonemes, speakers, environments, etc.

When developers type a phrase (e.g., "Hey Jarvis"), Picovoice converts the text into phonemes and then uses transfer learning to adapt the base model to the target phonetic pattern while retaining discrimination capacity in order to reject non‑wake-word phrases. Once the adapted model is ready, it's "compiled" to the target platform (e.g., web, mobile, etc.) so it runs efficiently. Through this combination of machine learning and software engineering, the entire training process is completed in seconds on Picovoice Console.

Wake Word vs. Other Technologies

Understanding the differences between wake word detection and related technologies helps you choose the right solution.

Wake Word vs. Hotword vs Keyword Spotting

Wake Word Detection, Wake Word Spotting, Wake Word Recognition, Wake-up Word (WuW) Detection, Hotword Detection, Trigger Word Detection, and Keyword Spotting are used interchangeably to refer to the same technology. For example, Picovoice's wake word engine, Porcupine, is used by NASA for several projects. In one project, NASA calls Porcupine Hot Word Recognition, and in another, Wake Word Detection.

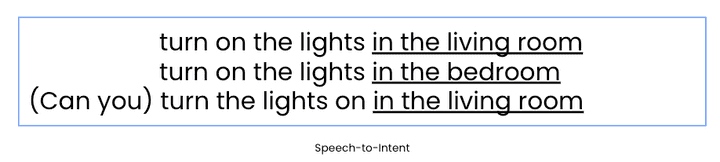

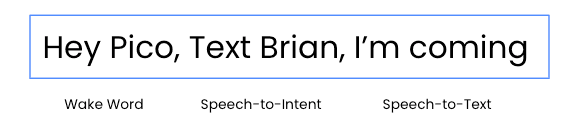

Wake Word vs. Speech-to-Intent

- Wake Word Detection - Handles simple commands

- Speech-to-Intent - Used for complex commands and combinations

Wake Word vs. Speech-to-Intent vs. Speech-to-Text

- Wake Word Detection - Listens to predetermined phrases to activate software (binary: yes/no)

- Speech-to-Intent - Infers intents and intent details (extracts meaning)

- Speech-to-Text - Transcribes speech into text without focusing on any specific word or meaning

Why Not Use ASR for Wake Words?

Some developers use Automatic Speech Recognition (ASR) to detect wake words. This approach has several significant drawbacks:

- Resource Intensive - ASR requires significantly more CPU/memory than wake word detection

- Higher Latency - ASR introduces delays unacceptable for always-listening scenarios

- Privacy Concerns - ASR records and transcribes everything to "find" the wake word

- Power Consumption - ASR drains battery quickly on mobile/IoT devices

Read more about why ASR shouldn't be used for wake word recognition.

Wake Word vs. Push-to-Talk

- Wake Word: Offers an accessible, truly hands-free experience and enables more natural interaction

- Push-to-Talk: Offers simpler implementation via physical or graphical button

On-Device vs. Cloud Wake Word Detection

One of the most critical architectural decisions in building voice AI products is where speech recognition runs. For wake word detection, the answer is straightforward: it should run locally on the device.

Otherwise, audio must be continuously streamed to cloud servers in order to detect the wake word in the cloud, leading to privacy, latency, reliability, scalability, and efficiency issues.

- Privacy - No audio sent to the cloud, meeting regulatory compliance

- Low Latency - Instant activation without network round-trip

- Reliability - No disruptions due to connectivity issues

- Scalability - No orchestration required for millions of concurrent streams

- Efficiency - Continuously sending audio data drains the battery of mobile devices

Read more about wake word detection in the cloud or the advantages of on-device voice AI.

Choosing the Right Wake Word

Not all wake words are created equal. The phonetic properties of your chosen phrase significantly impacts detection accuracy.

Good Wake Words:

- 2-4 syllables - Long enough to be unique, short enough to be natural

- Mix of vowels and consonants - Easier to detect acoustically

- Distinctive phonemes - Avoid common words that appear in conversations

- Easy to pronounce - Users shouldn't struggle

- Brand-appropriate - Reflects your brand identity

Examples of Good Wake Words:

- "Hey Siri" (2 syllables, clear phonemes)

- "Alexa" (3 syllables, distinctive)

- "OK Google" (3 syllables, unique combination)

- "Hey Mycroft" (3 syllables, uncommon word)

Pro Tip: Test multiple wake word candidates with your target demographic. What sounds natural to you might not work for users across different regions and languages.

For detailed guidance, see Picovoice's tips for choosing a wake word.

Multi-Language Considerations

If your application serves global markets, understanding language, dialect, and accent differences is crucial.

Consider:

- How the wake word sounds in different languages

- Accent variations within the same language

- Cultural appropriateness of the phrase

- Phonetic similarities across languages

Porcupine Wake Word can run multiple wake words across languages without additional runtime overhead, whether in German, French, Spanish, Italian, Japanese, Korean or Portuguese.

Measuring Wake Word Accuracy

Wake word benchmarks assess accuracy, efficiency, and robustness across various conditions, including different speakers, noisy environments, and diverse accents. Accuracy measures the system's ability to correctly identify the wake word when spoken while avoiding false activations from non-wake-word sounds:

- False Acceptance Rate (FAR): Mistakes of the system recognizing non-wake-words as a wake word. Typically measured as FAR per hour, i.e., how many false activations occur per hour of normal conversation/environment.

Example: The user says "Seriously" and the engine recognizes it as if it's the wake word "Siri".

- False Rejection Rate (FRR): Mistakes of the system not recognizing the wake word. The system misses detecting the wake word.

Example: User says "Siri" and the engine doesn't wake the voice assistant.

Sensitivity: Modern wake word engines come with a sensitivity setting, which determines the trade-off between FAR and FRR. A higher sensitivity value gives a lower miss rate at the expense of a higher false alarm rate. In other words, one can set Porcupine's sensitivity to 1, and claim 100% accuracy, i.e., detection rate, without mentioning the FAR.

Learn more about benchmarking wake word systems and how ROC curves illustrate the trade-off between detection rate and false alarms.

Latency: Measures how fast the system can detect the wake word after the user finishes uttering the wake word.

Efficiency: Measures runtime utilization, such as CPU usage. Utilization depends on the platform and implementation.

Robustness: Measures the ability to detect the wake word in various challenging conditions, such as noisy backgrounds, multiple speaker environments.

Picovoice's open-source wake word benchmark includes a test dataset with noise data representing 18 different environments.

Test data is an important factor that determines accuracy. Overfitting (i.e., benchmarking against training data) and diversity of test data (including words similar to the wake word or not) will affect the results significantly.

Picovoice crowdsourced its wake word benchmark test data, open-sourced it, and curated the most well-known open-source keyword spotting speech corpora.

Benchmarking Wake Words

When evaluating wake word solutions, insist on:

- Transparent methodology - How was the data collected?

- Real-world test conditions - Noise, reverberation, accents

- Independent verification - Can you reproduce the results?

- Fair comparisons - Same test data for all engines

Read more about Wake Word Benchmarks: How to Verify Vendor Claims.

Implementation Guide

Ready to add wake word detection to your application? Here's how to get started.

Step 1: Choose Your Wake Word Engine

Commercial Options:

- Porcupine Wake Word - Enterprise solution, robust to noise and ready to deploy in seconds across platforms

- Sensory TrulyHandsfree - Enterprise solution with a long track record

- SoundHound Houndify - Enterprise solution offering two wake word tiers: proof-of-concept (low-cost version, delivered in weeks) and production-grade

Open Source Options:

- openWakeWord - Actively maintained open-source solution with good accuracy; requires ML knowledge to train custom wake words

- Snowboy (deprecated) - No longer maintained

- PocketSphinx - Legacy CMU solution

Recommendation: Porcupine Wake Word offers the best balance of accuracy, ease of use, and cross-platform support.

Step 2: Create Your Custom Wake Word

If your choice is Porcupine Wake Word, you can create custom wake words in seconds:

- Log in to Picovoice Console

- Navigate to Porcupine Wake Word

- Type your desired wake word phrase

- Click "Train" - model is generated in seconds

- Download the model file for your target platform

No data collection, no ML expertise required. See this tutorial or watch this video for a detailed walkthrough.

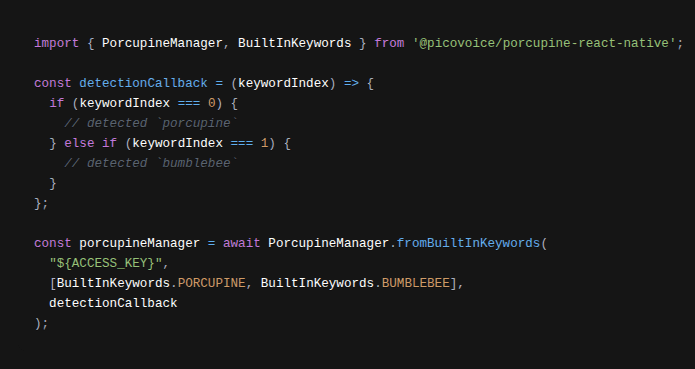

Step 3: Integrate into Your Application

Porcupine provides SDKs for every major platform:

- Mobile: iOS, Android, React Native, Flutter

- Web: JavaScript, React

- Desktop: Windows, macOS, Linux

- Embedded: Raspberry Pi, Microcontrollers, Arduino

- Languages: Python, Node.js, Java, C, .NET

Basic Python Example (with pvporcupine):

Step 4: Test and Optimize

- Test in realistic noise conditions

- Test with various speakers, accents

- Adjust sensitivity threshold as needed

- Monitor FRR and FAR in production

Platform-Specific Tutorials

Choose your platform to get started with wake word detection:

Wake Word Detection for Web Applications

- How to Add Custom Wake Words to Any Web App

- JavaScript Speech Recognition Guide

- React Speech Recognition Tutorial

- Wake Word Detection with Next.js

- Wake Word Detection with React.js

- Voice AI Browser Extension

- Offline Voice AI in Web Browsers

Wake Word Detection for Desktop Applications

- Python Wake Word Detection Tutorial

- Python Speech Recognition

- Adding Voice Controls to Chess in .NET

- Voice-Enabling .NET Desktop Applications

- Voice AI Linux Assistant with Python

Wake Word Detection for Mobile Applications

Embedded & IoT

- Speech Recognition on Raspberry Pi

- Arduino Voice Recognition in 10 Minutes

- Offline Voice Assistant on STM32 Microcontroller

- Keyword Spotting on Microcontrollers

Advanced Implementations

- On-Device LLM-Powered Voice Assistant

- Local LLM-Powered Voice Assistant for Web Browsers

- AI Voice Assistant for iOS with Local LLM

- AI Voice Assistant for Android with Local LLM

If you enjoy watching videos, check out the Porcupine playlist on YouTube for video tutorials.

Use Cases and Applications

Wake word detection enables voice interfaces across countless applications:

Smart Home & IoT

Voice-controlled lights, thermostats, appliances, and home automation systems. Wake words enable hands-free control without requiring users to reach for their phone.

Automotive

In-car voice assistants for navigation, climate control, and entertainment. Wake words allow drivers to keep their hands on the wheel and eyes on the road.

Enterprise & Productivity

- Voice Picking in Warehouses

- Voice-controlled Vending Machine

- Hands-free Elevator Control

- QSR drive-thrus

Consumer Electronics

Emerging Applications

Best Practices

Wake word detection must run locally on the device for privacy, user experience, and resource utilization. However, using on-device wake word detection is just a starting point. We curated a list of best practices.

Privacy First

- Use on-device speech technologies when possible

- Provide clear visual/audio feedback when the software is listening

- Be transparent about data handling

User Experience

- Choose wake words that are easy to pronounce and remember

- Provide visual confirmation when the wake word is detected

- Set appropriate sensitivity to balance FRR and FAR

- Test with diverse user groups

- Support multiple wake words for different users/contexts

Performance Optimization

- Ensure the engine is lightweight without affecting the app's overall performance

- Use appropriate audio preprocessing (noise reduction, echo cancellation)

- Monitor real-world accuracy metrics

- Regularly update models based on user feedback

- Consider battery life on mobile/IoT devices

Testing & Monitoring

- Test in realistic noise environments

- Test with various accents and speaking styles

- A/B test different wake word options

- Monitor FRR and FAR in production

- Collect user feedback systematically

Cross-Platform Development

- Use SDKs that support your target platforms

- Maintain consistent wake word behavior across platforms

- Plan for offline scenarios

- Consider resource constraints on each platform

Check out 7 Voice AI Implementation Pitfalls to avoid common mistakes in your projects.

Community Projects & Inspiration

See what developers are building with wake word detection:

- Art Generator Using Voice Prompts with DALL-E

- ChatGPT AI Virtual Assistant in Python

- Open Source Keyword Spotting Data

Frequently Asked Questions

Can I change the "Alexa" or "Hey Google" wake words?

You can only use the official wake words on Amazon's or Google's smart speakers. However, if you're building your own device, such as a smart speaker, you can use Porcupine Wake Word to create custom wake words like "Jarvis" or any phrase you choose.

How accurate is wake word detection?

Accuracy varies by engine. Industry-leading solutions like Porcupine achieve <5% false rejection rate at 1 false acceptance per 10 hours. See the open-source wake word benchmark for detailed comparisons and learn how to approach accuracy claims of vendors, and learn more nuances of benchmarking a wake word detection engine.

Does wake word detection work offline?

Yes, on-device wake word detection engines process voice data on the device without sending data to the cloud. On-device wake word systems don't require internet connectivity to function.

How much computational power is needed?

Modern wake word engines like Porcupine can run on devices as small as microcontrollers. Keyword spotting on microcontrollers demonstrates implementations on resource-constrained devices.

Can I detect multiple wake words simultaneously?

Yes, modern wake word engines, like Porcupine, support detecting multiple wake words concurrently with negligible additional resource usage.

How do I train a custom wake word?

You can train custom wake words in seconds by simply typing the phrase on Picovoice Console for free. No data collection or ML expertise required.

What languages are supported?

Porcupine supports wake word detection in English, Spanish, French, German, Italian, Japanese, Korean, Portuguese, and Chinese (Mandarin).

Is wake word detection always listening?

Yes, wake word engines continuously monitor audio. However, on-device solutions only process audio locally and don't send anything to the cloud until the wake word is detected. This preserves privacy while enabling always-on voice activation.

How much does it cost?

Pricing varies by provider. Porcupine offers a free plan for non-commercial projects, and a free trial for commercial use.

What's the difference between wake word detection and automatic speech recognition?

Wake word detection identifies a specific trigger phrase to activate the system. Automatic speech recognition (ASR) transcribes any speech into text. Wake word detection is much more lightweight and suitable for always-on scenarios.

Getting Started with Porcupine Wake Word

Ready to add wake word detection to your application? Try the Porcupine Wake Word Web Demo.

- Create custom wake words in seconds

- Cross-platform support (mobile, web, desktop, embedded)

- Industry-leading accuracy

- Processes data completely on the device

- Free plan and trial available

Quick Start Guides:

- Porcupine Wake Word Python Quick Start

- Porcupine Wake Word Android Quick Start

- Porcupine Wake Word Node.js Quick Start

- Porcupine Wake Word Flutter Quick Start

- Porcupine Wake Word Web Quick Start

- Porcupine Wake Word React Native Quick Start

Conclusion

Wake word detection has evolved from a Big Tech exclusive to an accessible technology that any developer can implement. Modern on-device solutions provide the accuracy of cloud-based systems while offering superior privacy, lower latency, and offline functionality. Whether you're building a smart home device, automotive interface, mobile app, or enterprise voice assistant, wake word detection is the foundation of natural voice interaction.

Key Takeaways:

- Wake words enable hands-free voice activation

- On-device detection offers superior privacy, lower latency, and comparable or better performance than cloud solutions

- Select wake words with 2-4 syllables and distinctive phonemes

- Measure accuracy using FRR (false rejections) and FAR (false acceptances)

Start building your voice-enabled application today with Porcupine Wake Word Detection.

Start Free